Automating web scraping with Python Scripts and Spiders can help in resolving many challenges. There are numerous scrapers, both premium and open-source, that help with this. However, while choosing a scraper, one should always look for one utilizing Python Scripts and Spiders, so the gathered data is easily extractable, readable, and expressive.

Here, this article will discuss different aspects of automated web scraping using Python scripts and spiders.

Why Python Scripts and Spiders are Used to Automate Web Scraping?

Python is one of the easier programming languages to learn, easier to read, and simpler to write in. It has a brilliant collection of libraries, making it perfect for scraping websites. One can continue working further with the extracted data using Python scripts too.

Also, the lack of using semicolons “;” or curly brackets “{ }” makes it easier to learn Python and code in this language. The syntax in Python is clearer and easier to understand. Developers can navigate between different blocks of code simply with this language.

One can write a few lines of code in Python to complete a large scraping task. Therefore, it is quite time-efficient. Also, since Python is one of the popular programming languages, the community is very active. Thus, users can share what they are struggling with, and they will always find someone to help them with it.

On the other hand, spiders are web crawlers operated by search engines to learn what web pages on the internet contain. There are billions of web pages on the internet, and it is impossible for a person to index what each page contains manually. In this manner, the spider helps automate the indexing process and gathers the necessary information as instructed.

What are the Basic Scraping Rules?

Anyone trying to scrape data from different websites must follow basic web scraping rules. There are three basic web scraping rules:

- Check the terms and conditions of the website to avoid legal issues

- Avoid requesting data from websites aggressively as it can harm the website

- Have adaptable code adapt to website changes

So, before using any scraping tool, users need to ensure that the tool can follow these basic rules. Most web scraping tools extract data by utilizing Python codes and spiders.

Common Python Libraries for Automating Web Crawling and Scraping

There are many web scraping libraries available for Python, such as Scrapy and Beautiful Soup. These libraries make writing a script that can easily extract data from a website.

Here are some of the most popular ones include:

Scrapy: A powerful Python scraping framework that can be used to write efficient and fast web scrapers.

BeautifulSoup: A Python library for parsing and extracting data from HTML and XML documents.

urllib2: A Python module that provides an interface for fetching data from URLs.

Selenium: A tool for automating web browsers, typically used for testing purposes.

lxml: A Python library for parsing and processing XML documents.

Read More: Web Crawling with Python

How to Automate Web Scraping Using Python Scripts and Spiders?

Once you have the necessary Python scripts and spiders, you can successfully start to scrape websites for data. Here are the simple 5 steps to follow:

1. Choose the website that you want to scrape data from.

2. Find the data that you want to scrape. This data can be in the form of text, images, or other elements.

3. Write a Python script that will extract this data. To make this process easier, you can use a web scraping library, such as Scrapy or Beautiful Soup

4. Run your Python script from the command line. This will start the spider and begin extracting data from the website.

5. The data will be saved to a file, which you can then open in a spreadsheet or document.

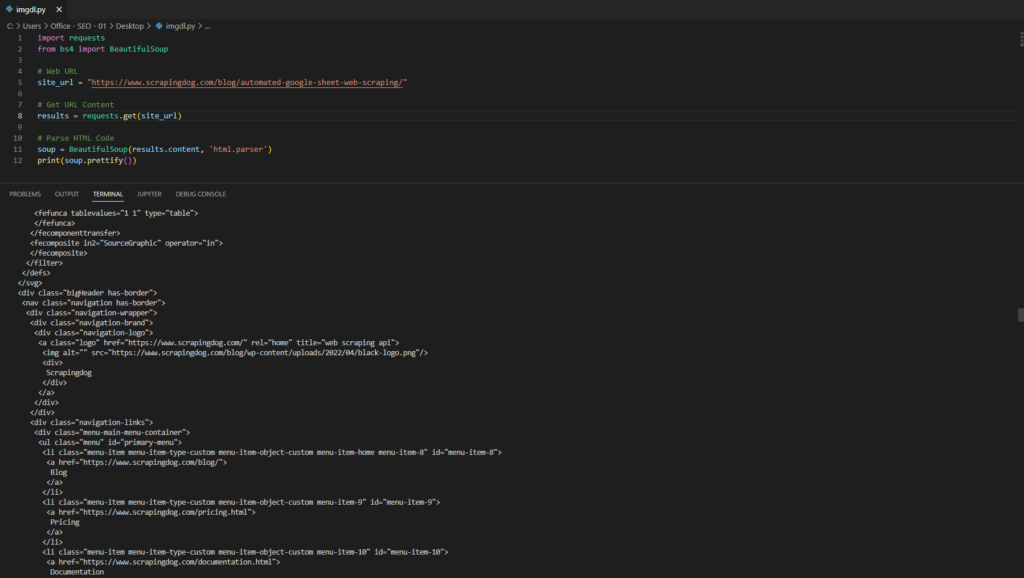

For example, here is a basic Python script:

import requests from bs4 import BeautifulSoup # Web URL site_url = "https://www.scrapingdog.com/blog/automated-google-sheet-web-scraping/" # Get URL Content results = requests.get(site_url) # Parse HTML Code soup = BeautifulSoup(results.content, 'html.parser') print(soup.prettify())

When all the steps are done properly, you will get a result like the following:

In this code, we have chosen the blog page of the Scrapingdog website and scraped it for the content on that page. Here BeautifulSoup was used to make the process easier.

Next, we will run the Python script from the command line, and with the help of the following spider, data from the chosen page will be scrapped. The spider being:

from scrapy.spiders import Spider

from scrapy.selector import Selector

class ResultsSpider(Spider):

name = "results"

start_urls = ["https://www.website.com/page"]

def parse(self, response):

sel = Selector(response)

results = sel.xpath('//div[@class="result"]')

for result in results:

yield {

'text': result.xpath('.//p/text()').extract_first()

}

Result

Run Scrappy console to run the spider properly through the webpage.

How does Automated Web Scraping work?

Most web scraping tools access the World Wide Web by using Hypertext Transfer Protocol directly or utilizing a web browser. A bot or web crawler is implemented to automate the process. This web crawler or bot decides how to crawl websites and gather and copy data from a website to a local central database or spreadsheet.

These data gathered by spiders are later extracted to analyze. These data may be parsed, reformatted, searched, copied into spreadsheets, and so on. So, the process involves taking something from a page and repurposing it for another use.

Once you have written your Python script, you can run it from the command line. This will start the spider and begin extracting data from the website. The data will be saved to a file, which you can then open in a spreadsheet or document.

Take Away

Data scraping has immense potential to help anyone with any endeavor. However, it is closer to impossible for one person to gather all the data they need manually. Therefore, automated web scraping tools come into play.

These automated scrapers utilize different programming languages and spiders to get all the necessary data, index them and store them for further analysis. Therefore, a simpler language and an effective web crawler are crucial for web scraping.

Python script and the spider are excellent in this manner. Python is easier to learn, understand, and code. On the other hand, spiders can utilize the search engine algorithm to gather data from almost 40% -70% of online web pages. Thus, whenever one is thinking about web scraping, one should give Python script and spider-based automated web scrapers a chance.

Additional Resources

- A Complete Guide To Web Scraping with Python

- Build a Python Web Crawler

- How To Use A Proxy with Python Requests

- Web Scraping with Xpath & Python: A Beginner-Friendly Tutorial

- Web Scraping with Selenium & Python

- Python Web Scraping Auth. behind OAuth Wall

- Scrape Dynamic Web Pages using Python

- BeutifulSoup Tutorial: Web Scraping

- Know the Best Libraries for Web Scraping with Python