Web scraping is a process of extracting data from websites. It can be done manually, but it is usually automated. Golang is a programming language that is growing in popularity for web scraping. In this article, we will create a web scraper with Go.

Is Golang good for web scraping?

Go or Golang is a good choice for web scraping for several reasons. It is a compiled language, so it runs quickly, and it is also easy to learn.

Go has good support for concurrency which is important for web scraping because multiple pages can be scraped at the same time. It also has good libraries for web scraping.

Why Golang Web Scraper?

Well, it’s probably the best go-to program. A Go web scraper is a program that can be used to extract data from websites. It can be used to extract data from HTML pages, XML pages, or JSON pages.

Prerequisites/Packages for web scraping with Go

Initializing Project Directory and Installing Colly

To set up your project directory, create a new folder and then initialize it with a go.mod file. Install Colly with the go-get command.

Let’s Start Web Scraping with Go (Step by Step)

So, we start by creating a file by the name ghostscraper.go. We are going to use the colly framework for this. It is a very well-written framework and I highly recommend you read its documentation.

To install it we can copy the single line command and throw it in our terminal or our command prompt. It takes a little while and it gets installed.

go get -u github.com/gocolly/colly/...

Now, switch back to your file. We begin with specifying the package, and then we can write our main function.

package main

func main () {

}

Try to run this code just to verify everything is ok.

Now, the first thing we need inside the function is a filename.

package main

func main () {

fName:= "data.csv"

}

Now that we have a file name, we can create a file.

package main

func main () {

fName:= "data.csv"

file, err := os.Create(fName)

}

This will create a file by the name data.csv. Now that we have created a file we need to check for any errors.

If there were any errors during the process, this is how you can catch them.

package main

func main () {

fName:= "data.csv"

file, err := os.Create(fName)

if err != nil {

log.Fatalf("could not create the file, err :%q",err)

return

}

}

Fatalf() basically prints the message and exits the program.

The last thing you do with a file is close it.

package main

func main () {

fName:= "data.csv"

file, err := os.Create(fName)

if err != nil {

log.Fatalf("could not create the file, err :%q",err)

return

}

defer file.Close()

}

Now, here defer is very helpful. Once you write, defer anything following that will be executed afterward and not right away. So, once we are done working with the file, go will close the file for us. Isn’t that amazing? We don’t have to worry about going and closing the file manually.

Alright so we have our file ready and as we hit save, go will add a few things within your code.

package main

import (

"log"

"os"

)

func main () {

fName:= "data.csv"

file, err := os.Create(fName)

if err != nil {

log.Fatalf("could not create the file, err :%q",err)

return

}

defer file.Close()

}

Go import the necessary packages. This was really helpful.

The next thing we need is a CSV writer. Whatever data we are fetching from the website, we will write it into a CSV file. For that, we need to have a writer.

package main

import (

"encoding/csv"

"log"

"os"

)

func main () {

fName:= "data.csv"

file, err := os.Create(fName)

if err != nil {

log.Fatalf("could not create the file, err :%q",err)

return

}

defer file.Close()

writer := csv.NewWriter(file)

}

After adding a writer and saving it, go will import another package and that is encoding/csv.

The next thing we do with a writer once we are done writing the file, we throw everything from the buffer into the writer, which can later be passed onto the file. For that, we will use Flush.

package main

import (

"encoding/csv"

"log"

"os"

)

func main () {

fName:= "data.csv"

file, err := os.Create(fName)

if err != nil {

log.Fatalf("could not create the file, err :%q",err)

return

}

defer file.Close()

writer := csv.NewWriter(file)

defer writer.Flush()

}

But again this process has to be performed afterward and not right away. So, we can add the keyword defer.

So, now we have our file structures and a writer ready. Now, we can get our hands dirty with web scraping.

So, we will start with instantiating what is a collector.

package main

import (

"github.com/gocolly/colly"

"encoding/csv"

"log"

"os"

)

func main () {

fName:= "data.csv"

file, err := os.Create(fName)

if err != nil {

log.Fatalf("could not create the file, err :%q",err)

return

}

defer file.Close()

writer := csv.NewWriter(file)

defer writer.Flush()

c := colly.NewCollector(

colly.AllowedDomains("internshala.com")

)

}

Go has also imported colly for us. We have also specified what domains we are working with. We will scrape Internshala (It provides a platform for companies to post internships).

The next thing we need to do is we need to point to the web page from where we will fetch the data from. Here is how we are going to do that. We will fetch internships from this page.

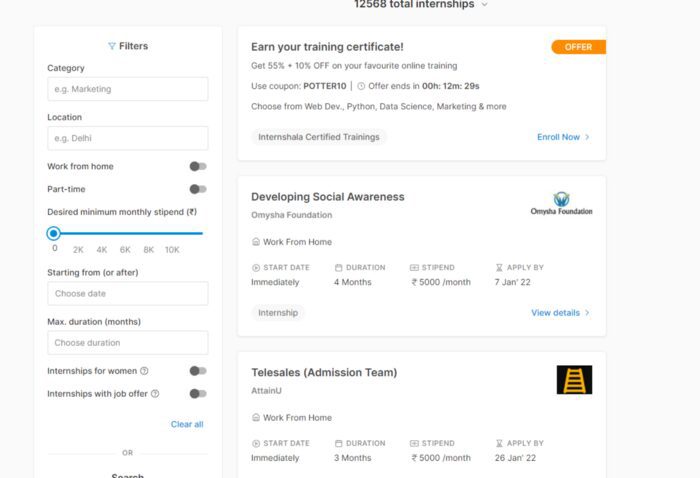

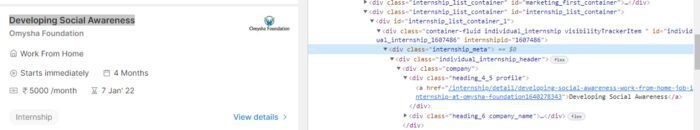

We are interested in what internships we have. We will scrape every individual internship provided. If you will inspect the page you will find that internship_meta is our target tag.

package main

import (

"github.com/gocolly/colly"

"encoding/csv"

"log"

"os"

)

func main () {

fName:= "data.csv"

file, err := os.Create(fName)

if err != nil {

log.Fatalf("could not create the file, err :%q",err)

return

}

defer file.Close()

writer := csv.NewWriter(file)

defer writer.Flush()

c := colly.NewCollector(

colly.AllowedDomains("internshala.com")

)

c.onHTML(".internship_meta", func(e *colly.HTMLElement){

writer.Write( []string {

e.ChildText("a"),

})

})

}

We have created a pointer to that HTML element and it is pointing to internship_meta tag. Using the above code we are going to write the data into our CSV file. writer function will type the slice of a string.

We need to specify precisely what we need. ChildText will return concatenated and stripped text of matching elements. Inside that, we have passed a tag a to extract all the elements with tag a. We have applied a comma because we are writing a CSV file. We also need ChildText of span tag to get the stipend amount a company is offering.

So, what we have basically done is, earlier we created a collector from colly and after that, we pointed to the web structure and specified what we needed from the web page.

So, the next thing is we need to visit this website and fetch all the data. Also, we have to do it for all the pages. You can find the total pages at the bottom of the page. Right now I have like 330 pages on that website. We will use the famous for loop here.

package main

import (

"github.com/gocolly/colly"

"encoding/csv"

"log"

"os"

)

func main () {

fName:= "data.csv"

file, err := os.Create(fName)

if err != nil {

log.Fatalf("could not create the file, err :%q",err)

return

}

defer file.Close()

writer := csv.NewWriter(file)

defer writer.Flush()

c := colly.NewCollector(

colly.AllowedDomains("internshala.com")

)

c.onHTML(".internship_meta", func(e *colly.HTMLElement){

writer.Write( []string {

e.ChildText("a"),

})

})

for i=0; i<330; i++ {

fmt.Printf("Scraping Page : %d\n",i)

c.Visit("https://internshala.com/internships/page-"+strconv.Itoa(i))

}

log.Printf("Scraping Complete\n")

log.Println(c)

}

First, we used a print statement to update us about the scraped page. Then our script will visit the target page. Since there are 330 pages then we will insert the value of i after converting it to a string to our target URL. Then we printed the data that colly will bring from the website.

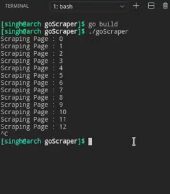

Let’s build it then. You just have to type go build on the terminal.

go build

It did nothing but created a file goscraper for us and we can execute that.

The next command to execute the file will be ./goscraper and a tab for compilation.

./goscraper

It will start scraping the pages.

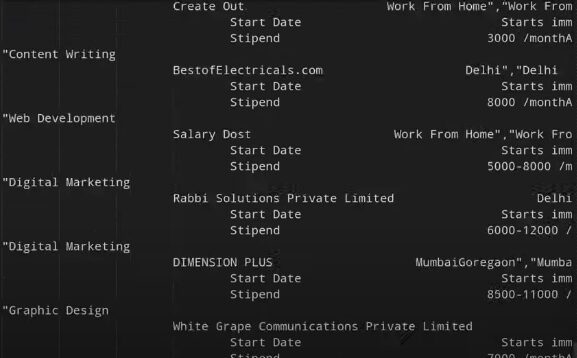

I have just stopped the scraper in between because I don’t want to scrape all the pages. Now, if you will look at the file data.csv which Go has created for us. It will look like the one below.

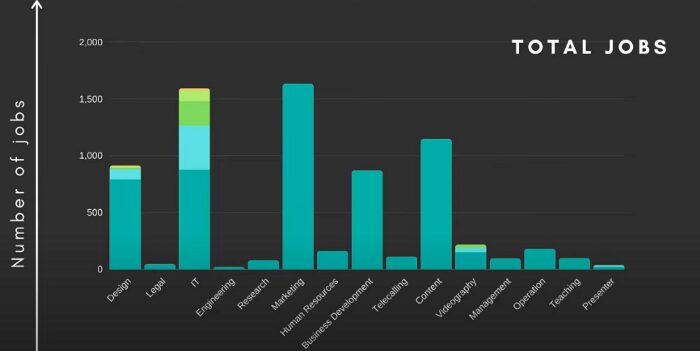

That is it. Your basic go scraper is ready. If you want to make it more readable then use regex. I have also created a graph for the number of jobs vs. job sectors. I leave this activity for you as homework.

What’s Next? Advanced Topics in Web Scraping with Go

This section will discuss some advanced topics in web scraping with Go.

Pagination

Pagination is when a website splits its content up into multiple pages. Goquery has a method called FindNext which can be used to find the next page link and go to it.

Cookies

Cookies are often used to track user data. They can be set in the request headers so that the server will send them back with the response.

User-Agents

User agents are used to identify the browser or program making the request. They can be set in the request headers.

Conclusion

This tutorial discussed the various Golang open-source libraries you may use to scrape a website. If you followed along with the tutorial, you were able to create a basic scraper with Go to crawl a page or two.

While this was an introductory article, we covered most methods you can use with the libraries. You may choose to build on this knowledge and create complex web scrapers that can crawl thousands of pages.

Feel free to message us to inquire about anything you need clarification on.

Additional Resources

Here are a few additional resources that you may find helpful during your web scraping journey: