Web Scraping as the name suggests is the process of extracting data from a source on the internet. With so many tools, use cases, and a large market demand, there are a couple of web scraping tools to cater to this market size with different capabilities and functionality.

I have been web scraping for the past 8 years and have vast experience in this domain. In these years, I have tried and tested many web scraping tools (& finally, have made a tool myself too).

In this blog, I have handpicked some of the best web scraping tools, tested them separately, and ranked them.

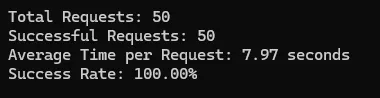

I am going to test each API on Amazon, Google, & Idealista. Specifically, I have checked the success rate, their pricing, the documentation design, and their response time and depicted my results at the very last.

Let’s Jump in!!

Testing Script

I am going to use this Python code to test all the products in the list.

We will test these APIs on Amazon, Google, and Idealista. Will calculate their success rate, average response time, and per-request credit cost.

In total, 50 requests will be made to each API for each domain. This study will you a clear hint on selecting a web scraping API while starting any data-related project.

import requests

import time

import random

# List of random words to use in the search query

search_terms_amazon = ["https://www.amazon.com/dp/B0CTKXMQXK", "https://www.amazon.com/dp/B0D1ZFS9GH", "https://www.amazon.com/dp/B0CXG3HMX1", "https://www.amazon.com/dp/B0CKM9JVJB/", "https://www.amazon.com/dp/B0BYX1XT81"]

search_terms_idealista = ['https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/','https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/','https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/','https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/','https://www.idealista.com/alquiler-viviendas/malaga-malaga/con-solo-pisos%2Caticos/']

search_terms_google = [

"pizza", "burger", "sushi", "coffee", "tacos", "salad", "pasta", "steak",

"sandwich", "noodles", "bbq", "dumplings", "shawarma", "falafel",

"pancakes", "waffles", "curry", "soup", "kebab", "ramen"

];

# base_url = Your-API-URL

total_requests = 50

success_count = 0

total_time = 0

for i in range(total_requests):

try:

# Pick a random search term from the list

search_term = random.choice(search_terms)

url = base_url.format(query=search_term)

start_time = time.time() # Record the start time

response = requests.get(url)

end_time = time.time() # Record the end time

# Calculate the time taken for this request

request_time = end_time - start_time

total_time += request_time

# Check if the request was successful (status code 200)

if response.status_code == 200:

success_count += 1

print(f"Request {i+1} with search term '{search_term}' took {request_time:.2f} seconds, Status: {response.status_code}")

except Exception as e:

print(f"Request {i+1} with search term '{search_term}' failed due to {str(e)}")

# Calculate the average time taken per request

average_time = total_time / total_requests

success_rate = (success_count / total_requests) * 100

# Print the results

print(f"\nTotal Requests: {total_requests}")

print(f"Successful Requests: {success_count}")

print(f"Average Time per Request: {average_time:.2f} seconds")

print(f"Success Rate: {success_rate:.2f}%")

List of All Web Scraping Tools

Scrapingdog

- Scrapingdog‘s Web scraping API provides a general scraper through which you can scrape any website and in return, you get raw HTML data from that particular website. It also provides dedicated APIs for scraping Amazon, Google, X, Instagram, Indeed, etc. Dedicated APIs provide parsed JSON data.

- Once you sign you get free 1000 credits which are more than enough for testing any API. Of course, the credit cost of each API is different.

- The dashboard is designed so that even a non-developer can scrape the data. The documentation is user-friendly, and integrating their APIs is super simple.

- Per request, the cost is around $0.000067 in their PRO plan and it goes down with the increase in volume.

- Custom support is super active and you will get a response within a few minutes.

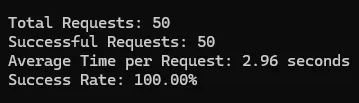

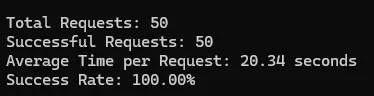

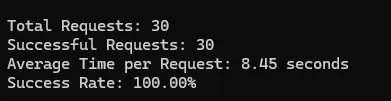

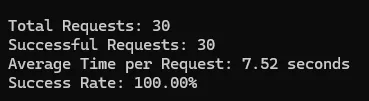

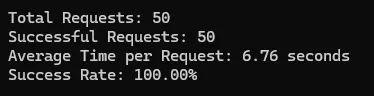

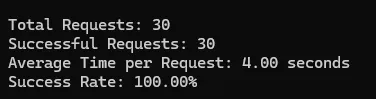

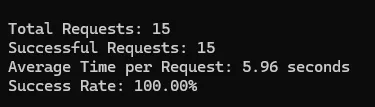

Test Results of Scrapingdog

I tested Scrapingdog on Idealista, Amazon, and Google, and here are the test results.

Amazon

Idealista

ScraperAPI

- ScraperAPI also provides robust web scraping and dedicated APIs. It provides dedicated APIs for Google Amazon, etc.

- The free trial comes with a generous 5000 credits. ScraperAPI also has different credit costs for many websites. So, the pricing will change according to that.

- You can easily integrate the API into your production environment. You can even test the API directly from the dashboard.

- Per request, the cost is around $0.0000997 in their Business plan and it goes down with the increase in volume.

- They do not offer a chat widget but you can mail them to resolve your queries.

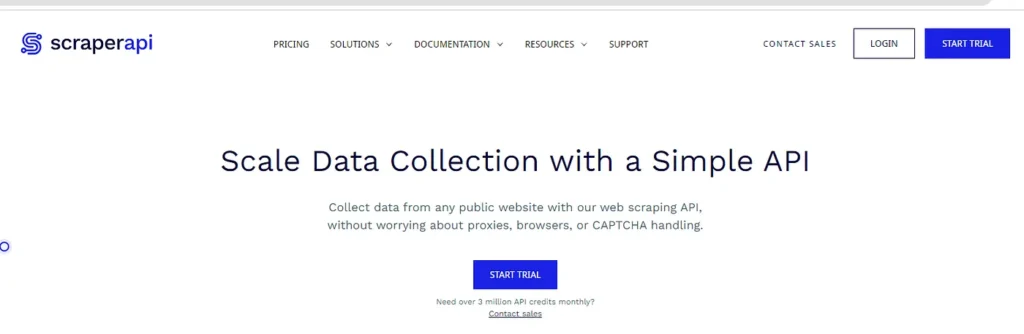

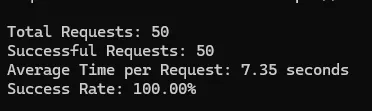

Test Results of ScraperAPI

Amazon

Idealista

Scrapingbee

- Scrapingbee provides scraping APIs extracting raw HTML data and JSON data through its dedicated APIs. Although it only provides a dedicated API for Google only.

- The trial pack will provide you with 1000 credits.

- Documentation is very clear and developers can easily integrate the API in any working environment.

- Per API credit cost is around $0.000083 in their Business pack.

- You can contact them via chat widget or email.

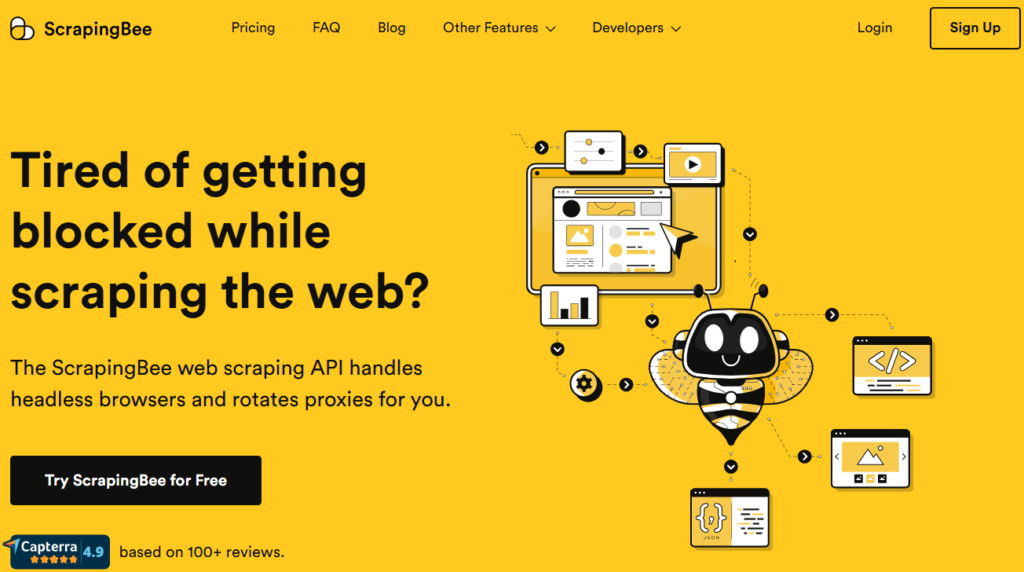

Test Results of Scrapingbee

Amazon

Idealista

Zenrows

- Zenrows is in web scraping proxies but also provides web scraping APIs.

- They provide $1 worth of free credits when you sign up. However, they do not follow a credit system, so analyzing the per-request credit is difficult.

- Documentation is quite informative; any developer could integrate their proxy or API.

- Per API credit cost is $0.00008.

- Both email and chat support are available on the website.

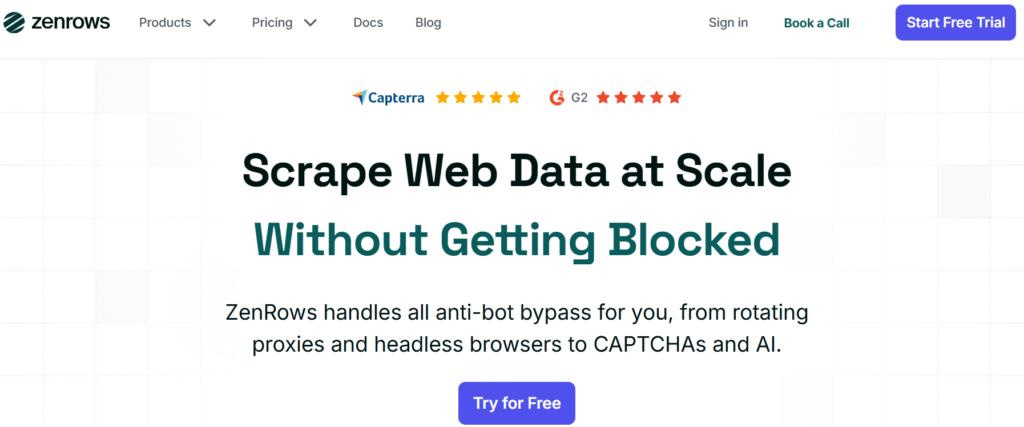

Test Results of Zenrows

Amazon

Idealista

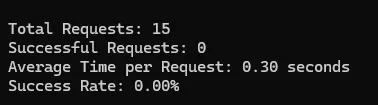

Scrape.do

- Scrape.do is a great web scraping API provider. They do not provide dedicated APIs other than a general web scraping API which provides raw HTML data.

- The free pack provides 1000 credits.

- Again documentation is a master piece and anybody can integrate the API.

- Per API credit cost is around $0.000071 in their Business pack.

- They respond quickly on chat and emails.

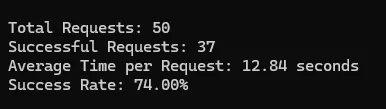

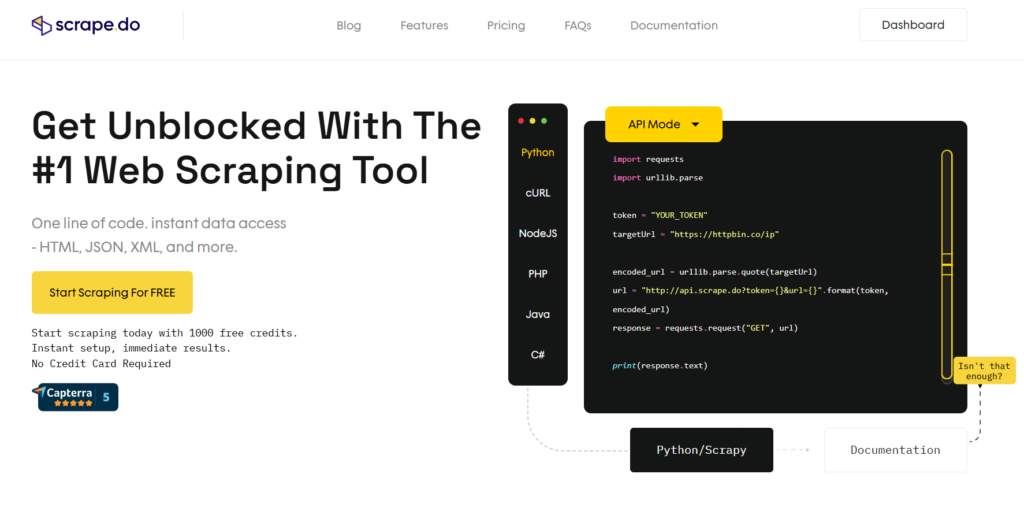

Test Results of Scrape.do

Amazon

Idealista

Final Verdict

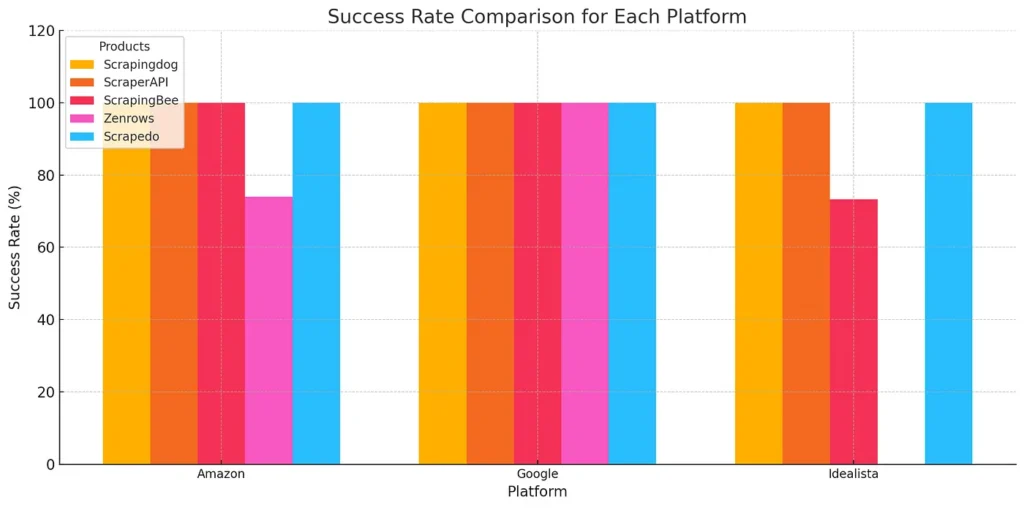

Here Scrapingdog and ScraperAPI are outperforming.

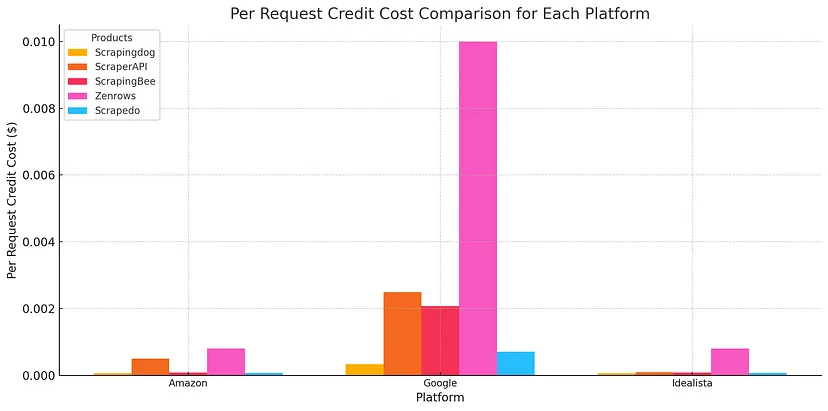

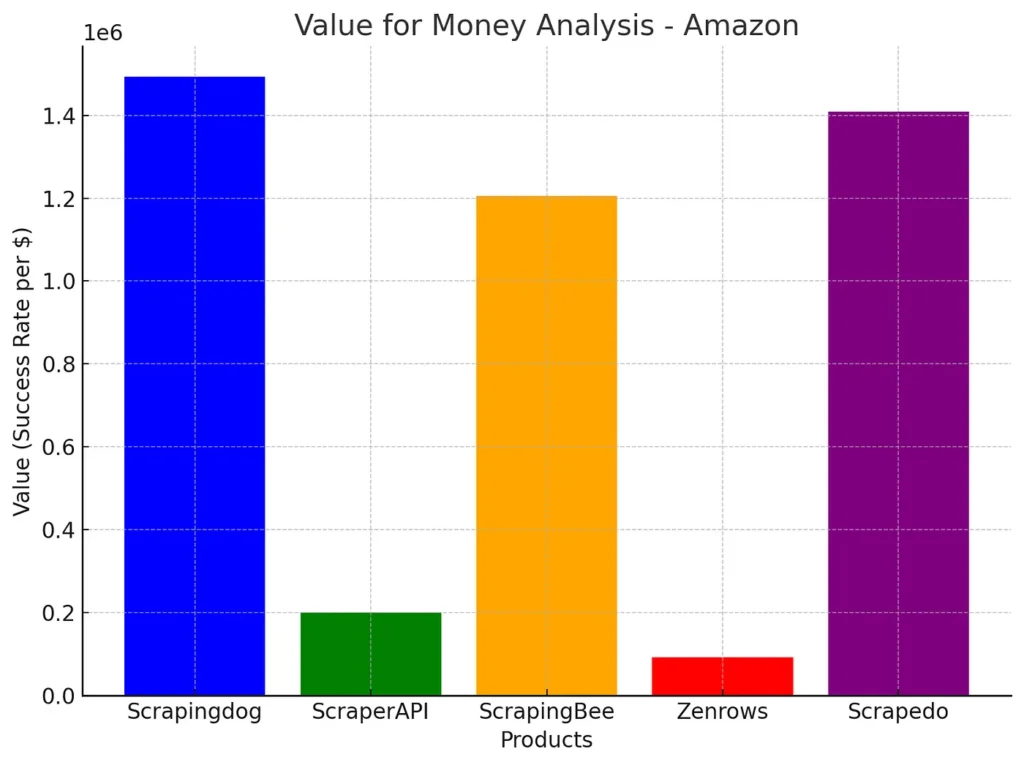

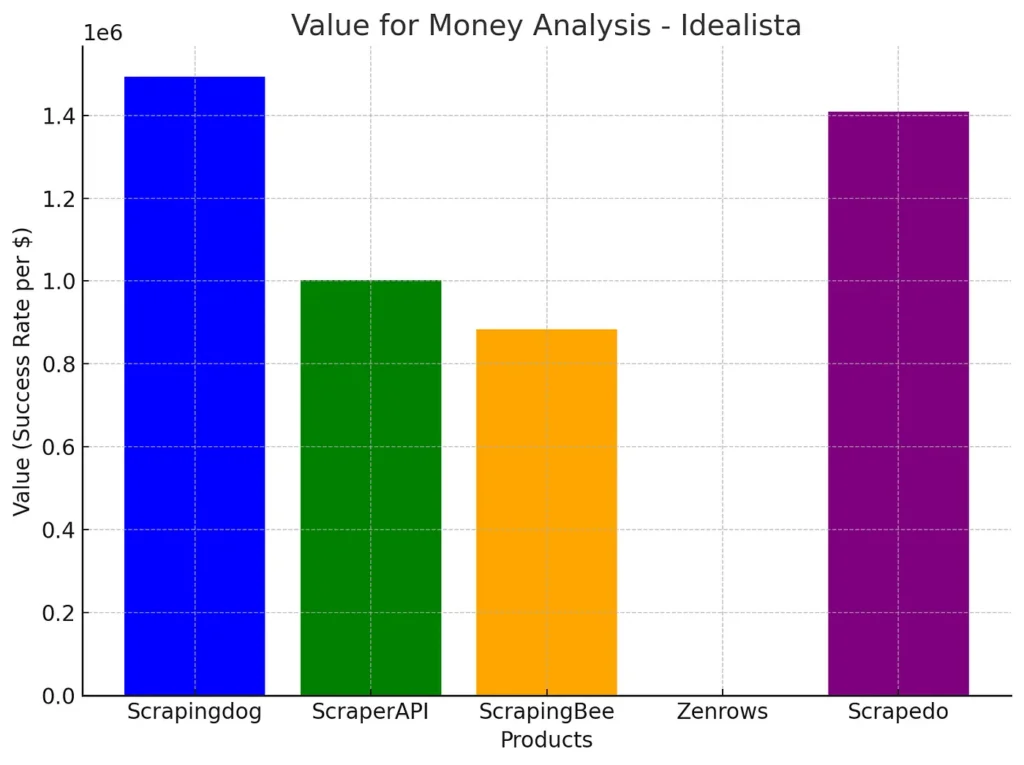

Scrapingdog, Scrapingbee, and Scrape.do offer economical web scraping solutions. In the case of Google Scrapingdog offers the most economical solution.

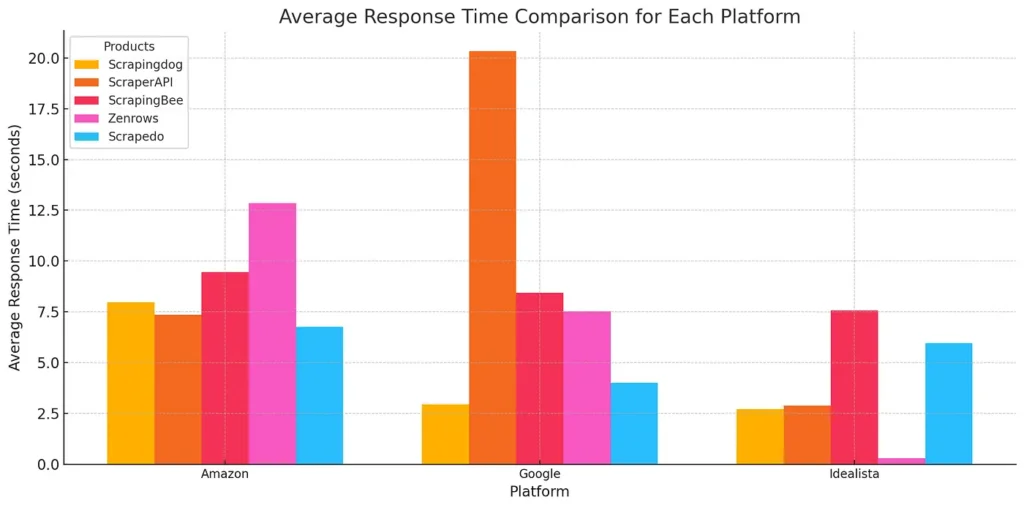

In the case of Amazon, almost all the APIs are providing the same average response time. But Scrapedo is faster here.

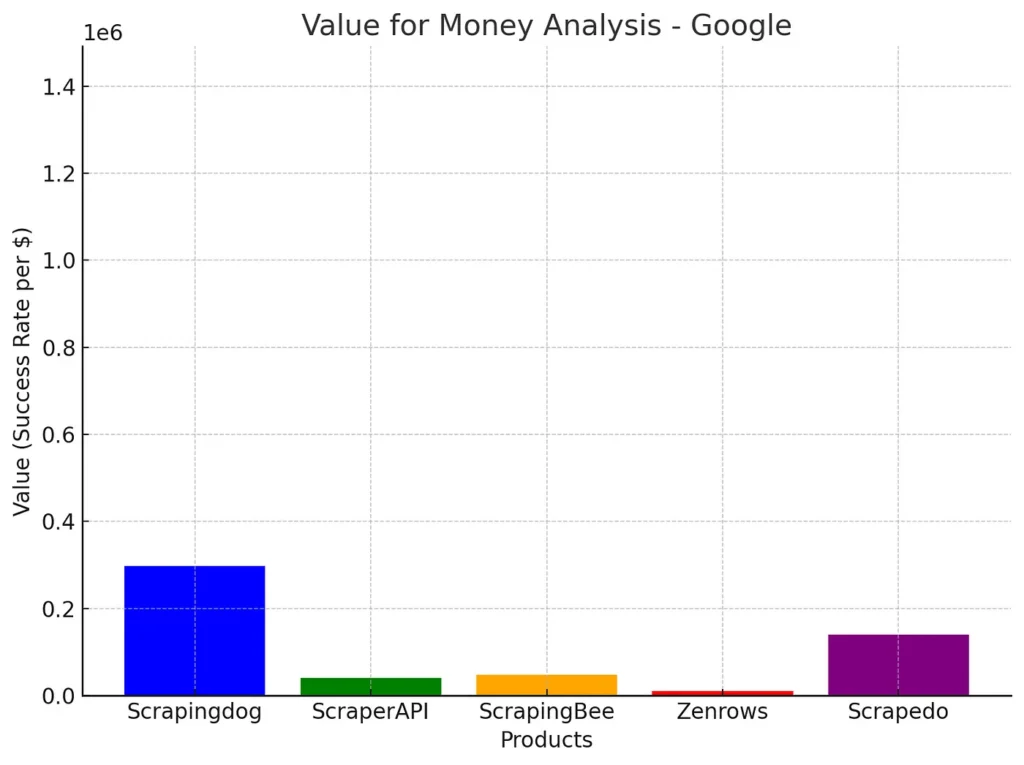

ScraperAPI is too slow when it comes to Google and Scrapingdog and Scrape.do outperforms all the other web scrapers.

If you see Idealista then there is a catch. Zenrows was unable to scrape this domain and therefore it cannot be considered as the fastest. Other than that Scrapingdog and ScraperAPI produced the fastest results.

Value for Money Product (For Scraping Amazon Only)

Value for Money Product (For Scraping Google Only)

Scrapingdog offers the best value, followed by Scrapedo and ScrapingBee.

Value for Money Product (For Scraping Idealista Only)

Conclusion

There were many other web scraping APIs, however to keep the article concise and knowledgeable we kept it to the best of what we found.

The comparisons we have done are for you to dig down into when you would scale any scraping process. On the surface, each API mentioned here would work as well.

If you would scale the process to some millions, then the stats given here would help you determine the choice as per your needs.

Happy Scraping!!