Web Scraping is incomplete without data extraction from the raw HTML or XML you get from the target website.

When it comes to web scraping then Python is the most popular choice among programmers because it has great community support and along with that, it is very easy to code. It is readable as well without those semicolons and curly braces.

Python also has many libraries that help in different aspects of any web application. One of them is Beautiful Soup, which is mainly used in web scraping projects. Let’s understand what it is and how it works.

What is BeautifulSoup?

Beautiful Soup is a Python library that was named after Lewis Carroll’s poem of the same name in “Alice’s Adventures in the Wonderland”. It is also known as BS4. Basically, BS4 is used to navigate and extract data from any HTML and XML documents.

Since it is not a standard Python library you have to install it in order to use it in your project. I am assuming that you have already installed Python on your machine.

pip install beautifulsoup4

It is now installed let’s test it with a small example. For this example, we will use the requests library of Python to make an HTTP GET request to the host website. In this case, we will use Scrapingdog as our target page. In addition to this, you can select any web page you like.

Getting the HTML

It’s time to use BS4, let’s make a GET request to our target website to get the HTML.

We would aim to get the title of the website.

from bs4 import BeautifulSoup

import requests

target_url = "https://www.scrapingdog.com/"

resp = requests.get(target_url)

print(resp.text)

Output of the above script will look like this.

<!DOCTYPE html>

<html lang="en">

<head>....

We got the complete HTML data from our target website.

Parsing HTML with BeautifulSoup

Now, we have the raw HTML data. This is where BS4 comes into action. We will kick start this tutorial by first scraping the title of the page and then by extracting all the URLs present on the page.

Get the Title

from bs4 import BeautifulSoup

import requests

target_url = "https://www.scrapingdog.com/"

resp = requests.get(target_url)

soup = BeautifulSoup(resp.text, 'html.parser')

print(soup.title)

Output of this script will look like this.

<title>Web Scraping API | Reliable & Fast</title>

Let’s try one more example to better understand how BS4 works. This time let’s scrape all the URLs available on our target page.

Getting all the Urls

Here we will use .get() method provided by BS4 for extracting value of any attribute.

from bs4 import BeautifulSoup

import requests

target_url = "https://www.scrapingdog.com/"

resp = requests.get(target_url)

soup = BeautifulSoup(resp.text, "html.parser")

allUrls = soup.find_all("a")

for i in range(0,len(allUrls)):

print(allUrls[i].get('href'))

Output of the script will look like this.

/

blog

pricing

documentation

https://share.hsforms.com/1ex4xYy1pTt6rrqFlRAquwQ4h1b2

https://api.scrapingdog.com/login

https://api.scrapingdog.com/register

javascript://

/

javascript://

blog

pricing

documentation

https://share.hsforms.com/1ex4xYy1pTt6rrqFlRAquwQ4h1b2

https://api.scrapingdog.com/login

https://api.scrapingdog.com/register

https://api.scrapingdog.com/register

/pricing

asynchronous-scraping-webhook

https://api.scrapingdog.com/register

https://api.scrapingdog.com/register

/documentation#python-linkedinuser

/documentation#python-google-search-api

/documentation#python-proxies

/documentation#python-screenshot

documentation

https://api.scrapingdog.com/register

documentation

https://share.hsforms.com/1ex4xYy1pTt6rrqFlRAquwQ4h1b2

tool

about

blog

faq

affiliates

documentation#proxies

documentation#proxies

terms

privacy

<a class="twitter-timeline" data-width="640" data-height="960" data-dnt="true" href="https://twitter.com/scrapingdog?ref_src=twsrc%5Etfw" target="_blank" rel="noopener">Tweets by scrapingdog</a><script async="" src="https://platform.twitter.com/widgets.js" charset="utf-8"></script>

https://www.linkedin.com/company/scrapingdog

This way you can use BS4 for extracting different data elements from any web page. I hope now you have got an idea of how this library can be used for data extraction.

BeautifulSoup — Objects

Once you pass any HTML or XML document to the Beautiful Soup constructor it converts it into multiple python objects. Here is the list of objects.

- Comments

- BeautifulSoup

- Tag

- NavigableString

Comments

You might have got an idea by the name itself. This object contains all the comments available in the HTML document. Let me show you how it works.

from bs4 import BeautifulSoup

soup=BeautifulSoup('<div><!-- This is the commnet section --></div>','html.parser')

print(soup.div)

The output of the above script will look like this.

<div><!-- This is the commnet section --></div>

BeautifulSoup

It is the object which we get when we scrape any web page. It is basically the complete document.

from bs4 import BeautifulSoup

soup=BeautifulSoup('<div>This is BS4</div>','html.parser')

print(type(soup))

<class 'str'>

Tag

A tag object is the same as the HTML or XML tags.

from bs4 import BeautifulSoup

soup=BeautifulSoup('<div>This is BS4</div>','html.parser')

print(type(soup.div))

<class 'bs4.element.Tag'>

There are two features of tag as well.

- Name — It can be accessed with a .name suffix. It will return the type of tag.

- Attributes — Any tag object can have any number of attributes. “class”, “href”, “id”, etc are some famous attributes. You can even create your custom attribute to hold any value. This can be accessed through .attrs suffix.

NavigableString

It is the content of any tag. You can access it by using .text suffix.

from bs4 import BeautifulSoup

soup=BeautifulSoup('<div>This is BS4</div>','html.parser')

print(type(soup.text))

<class 'str'>

How to search in a parse Tree?

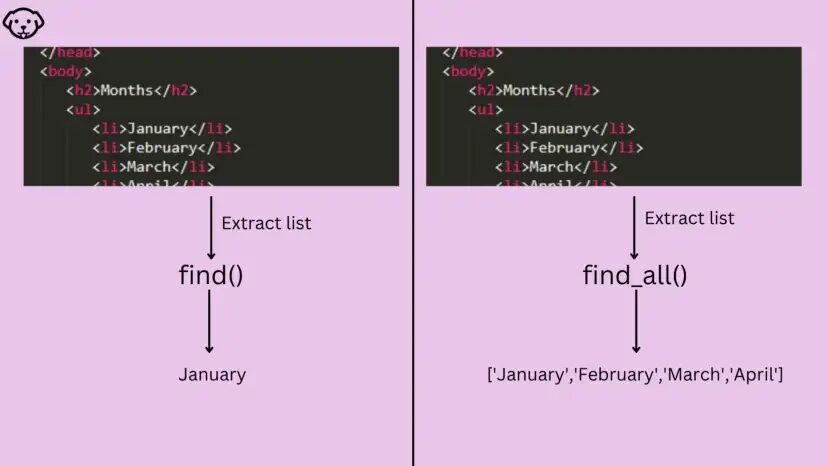

There are multiple Beautifulsoup methods through which you can search any element inside a parse tree. But the most commonly used methods are find() and find_all(). Let’s understand them one by one.

What is the find() method?

Let’s say you already know that there is only one element with class ‘x’ then you can use the find() method to find that particular tag. Another example could be let’s say there are multiple classes ‘x’ and you just want the first one.

The find() method will not work if you want to get the second or third element with the same class name. I hope you got the idea. Let’s understand this by an example.

<div class="test1">test1</div><div class="q">test2</div><div class="z">test3</div>

Take a look at the above HTML code. We have three div tags with different class names. Let’s say I want to extract “test2” text. I know two things about this situation.

- test2 text is stored in class q

- class q is unique. That means there is only one class with the value q.

So, I can use the find() method here to extract the desired text.

from bs4 import BeautifulSoup

soup=BeautifulSoup('<div class="test1">test1</div><div class="q">test2</div><div class="z">test3</div>','html.parser')

data = soup.find("div",{"class":"q"}).text

print(data)

test2

Now let’s consider you have three div tags with the same classes.

<div class="q">test1</div><div class="q">test2</div><div class="q">test3</div>

In this condition, you cannot scrape “test2”. You can only scrape “test1” because it comes first in the parse tree. Here you will have to use the find_all() method.

What is the find_all() method?

Using the find_all() method you can extract all the elements with a particular tag. Unlike the find() method you can extract data from any tag even if it doesn’t appear on the top. It will return a list.

Consider this HTML string.

<div class="q">test1</div><div class="q">test2</div><div class="q">test3</div>

If I want to scrape “test2” text then I can easily do it by scraping all the classes at once since all of them have the same class names.

from bs4 import BeautifulSoup

soup=BeautifulSoup('<div class="q">test1</div><div class="q">test2</div><div class="q">test3</div>','html.parser')

data = soup.find_all("div",{"class":"q"})[1].text

print(data)

test2

To get the second element from the list I have used [1]. This is how find_all() method works.

How to Modify a Tree?

This is the most interesting part. Beautiful Soup allows you to make changes to the parse tree according to your own requirements. Using attributes we can make changes to the tag’s property. Using .new_string(), .new_tag(), .insert_before(), etc methods we can add new tags and strings to an existing tag.

Let’s understand this with a small example.

<div class="q">test1</div>

I want to change the name of the tag and the class.

from bs4 import BeautifulSoup

soup=BeautifulSoup('<div class="q">test1</div>','html.parser')

data = soup.div

data.name='BeautifulSoup'

data['class']='q2'

print(data)

<BeautifulSoup class="q2">test1</BeautifulSoup>

We can even delete the attribute like this.

from bs4 import BeautifulSoup

soup=BeautifulSoup('<div class="q">test1</div>','html.parser')

data = soup.div

data.name='BeautifulSoup'

del data['class']

print(data)

<BeautifulSoup>test1</BeautifulSoup>

This is just an example of how you can make changes to the parse tree using various methods provided by BS4.

Points to remember while using Beautiful Soup

- As you know written HTML or XML documents use a specific encoding like ASCII or UTF-8 but when you load that document into BeautifulSoup it is converted to Unicode. BS4 by default uses a library unicode for this purpose.

- While using BeautifulSoup you might face two kinds of errors. One is AttributeError and the other is the KeyError. AttributeError occurs when a sibling tag to the current element is absent and KeyError occurs when the HTML tag is missing.

- You can use diagnose() function to analyze what BS4 does to our document. It will show how different parsers handle the document.

Conclusion

Beautiful Soup is a smart library. It makes our parsing job quite simple. Obviously, you can use it in more innovative ways than shown in this tutorial. While using it in a live project do remember to use try/except statements in order to avoid any production crash. You can lxml as well in place of BS4 for data extraction but personally, I like Beautiful Soup more due to vast community support.

I hope now you have a good idea of the difference between the two. Please do share this blog on your social media platforms. Let me know if you have any scraping-related queries. I would be happy to help you out.

Additional Resources

Here are a few additional resources that you may find helpful during your web scraping journey:

- 4 Best Python HTML Parsing Libraries

- Best Libraries In Python To Web Scrape

- Web Scraping with Python (A Complete Tutorial for Beginners)

- How to Use A Proxy with Python Requests

- Beautifulsoup vs Scrapy: Which one to choose for web scraping?

- How to do Web Scraping with XPath & Python

- Web Scraping with Selenium & Python

- Scrape Dynamic Web Pages using Python