TL;DR

- Call

https://api.scrapingdog.com/scrape?api_key=&url=…(1 k free credits) to grabHomegate.chHTML. requests+BeautifulSouppulls price, address & description fromdiv[data-test='result-list-item'].- Paginate via

ep = 1-10, loop pages, append rows, save tohomegate.csvwith pandas. - Scrapingdog auto-handles proxies, headless browser & CAPTCHAs.

Scraping real estate data can offer valuable insights into market trends, investment opportunities, and property availability.

In this tutorial, we’ll explore how to use Python to scrape property listings from Homegate.ch, one of Switzerland’s most popular real estate websites.

This guide will walk you through the entire process, from setting up your environment to extracting useful data like property prices, locations, and descriptions.

Let’s get started!!

Requirements To Scrape Data From Homegate

I hope you have installed Python 3.x on your machine. If not, you can download it from here. Now, create a working folder by any name you like. I am naming the folder as homegate.

mkdir homegate

We need to install a few libraries before starting the project.

requestsfor making an HTTP connection with the target website.BeautifulSoupfor parsing the raw data.Pandasfor storing data in a CSV file.

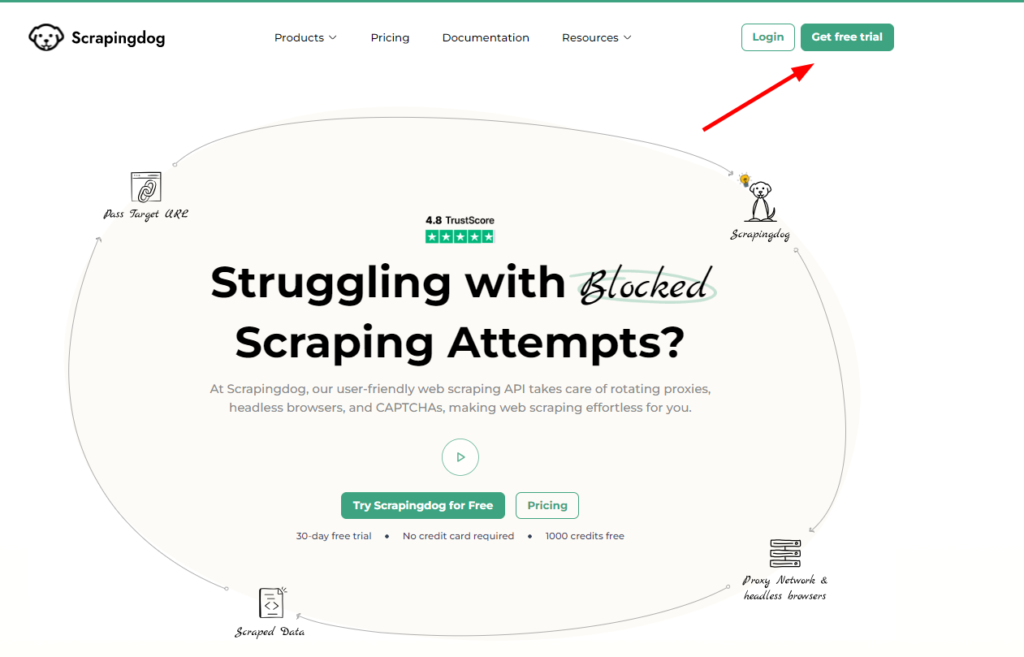

For scraping this website, we are going to use Scrapingdog’s web scraping API, which will handle all the proxies, headless browsers, and captchas for me. You can sign up for the free pack to start with 1000 free credits.

The final step before coding would be to create a Python file where we will keep our Python code. I am naming the file as estate.py.

Scraping Homegate with Python

Before we start coding the scraper, take a moment to read Scrapingdog’s documentation; it’ll give you a clear idea of how we can use the API to scrape Homegate.ch at scale.

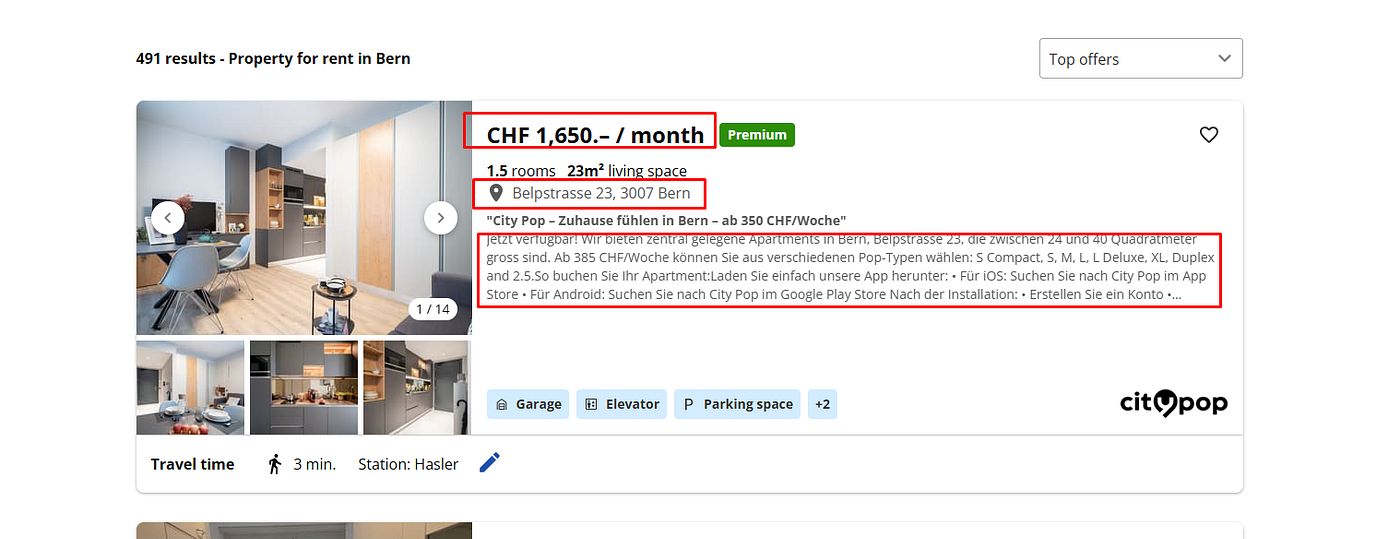

It’s always wise to determine exactly what information we want to extract from the target page before proceeding.

We are going to scrape:

- Price

- Address

- Description

Now, we have to find the location of each element inside the DOM.

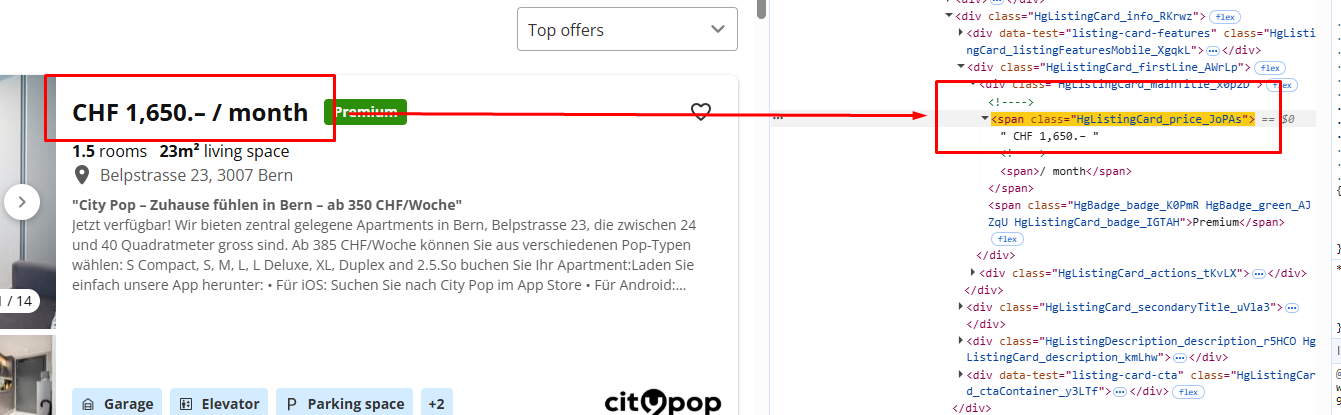

The price is stored inside the span tag with the class HgListingCard_price_JoPAs.

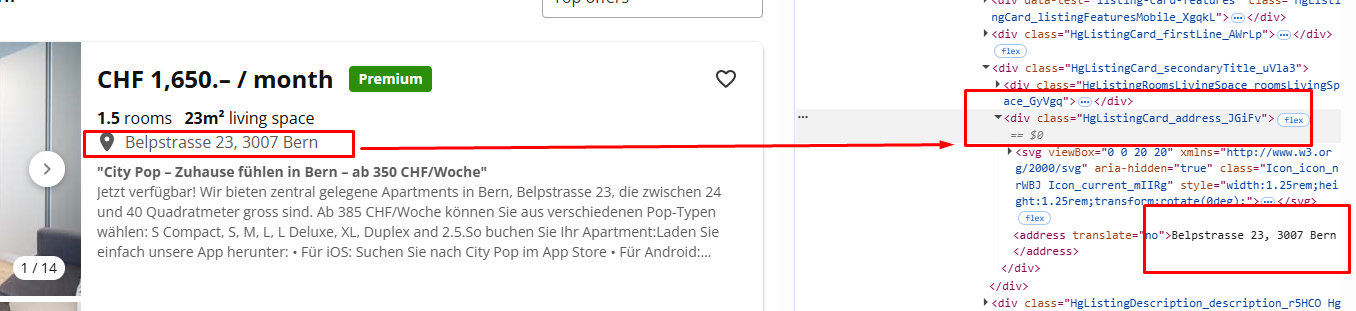

The address is stored inside a div tag with a class HgListingCard_address_JGiFv.

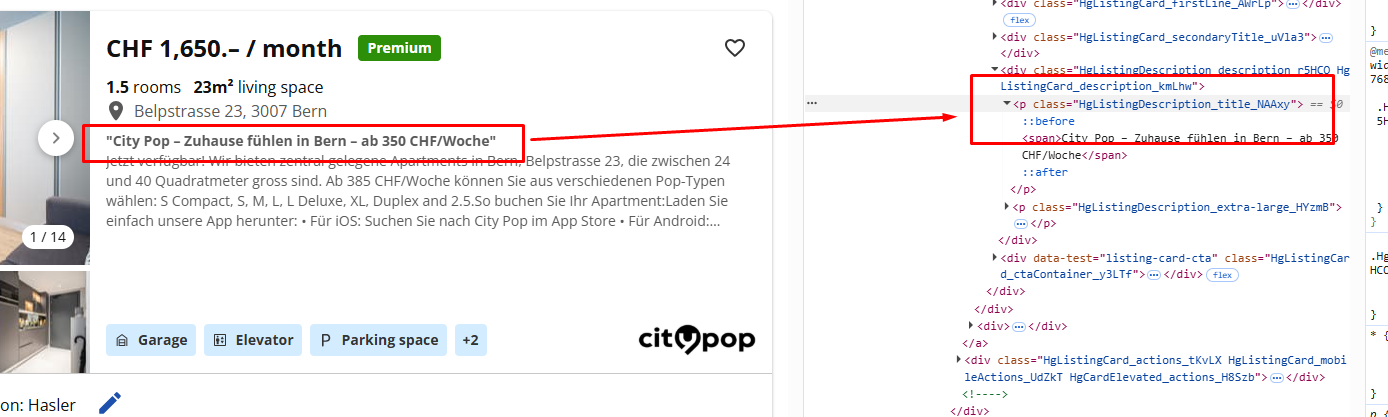

The description is stored inside a p tag with class HgListingDescription_title_NAAxy.

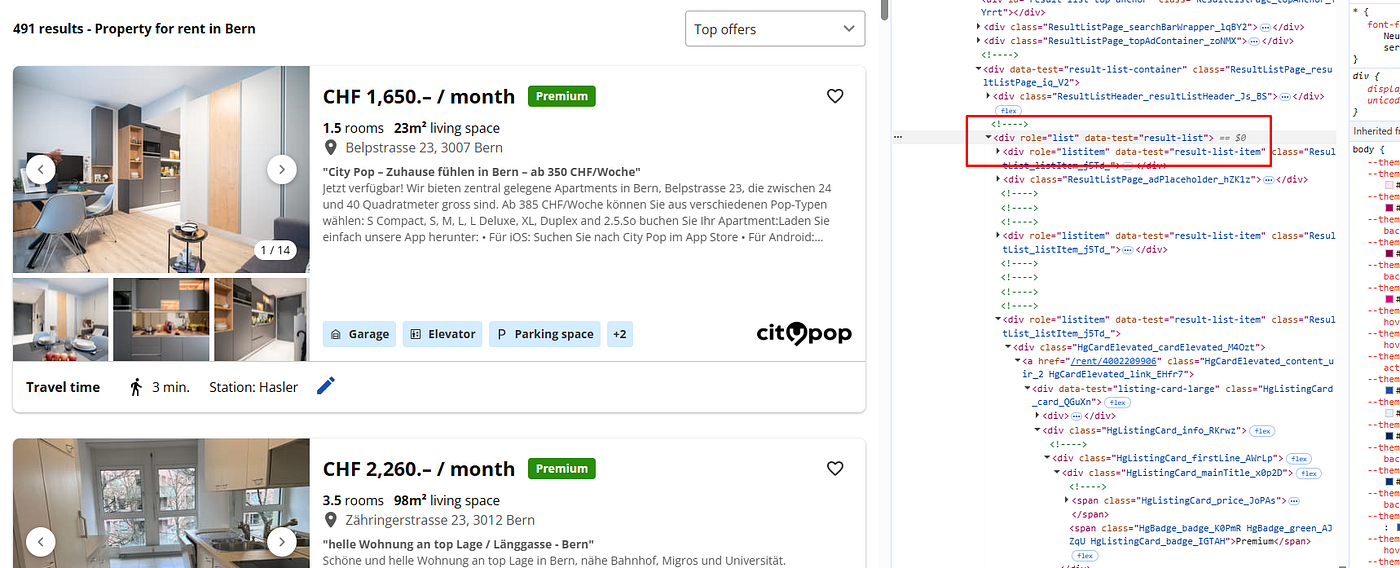

And all these properties are stored inside a div tag with the attribute data-test and value result-list-item.

Scraping Raw HTML from Homegate

import requests

from bs4 import BeautifulSoup

import pandas as pd

l=[]

obj={}

params={

'api_key': 'your-api-key',

'url': 'https://www.homegate.ch/rent/real-estate/city-bern/matching-list?ep=1',

'dynamic': 'false',

}

response = requests.get("https://api.scrapingdog.com/scrape", params=params)

print(response.status_code)

print(response.text)

The code is very simple, we are making a GET request to the host website using Scrapingdog’s API. Remember to use your own API key in the above code.

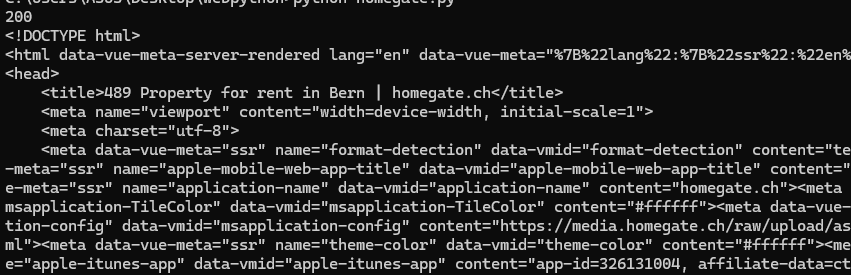

If we get a 200 status code, then we can proceed with the parsing process. Let’s run this code.

We got a 200 status code, and that means we have successfully scraped homegate.ch.

Parsing the data with BeautifulSoup

import requests

from bs4 import BeautifulSoup

import pandas as pd

l=[]

obj={}

params={

'api_key': 'your-api-key',

'url': 'https://www.homegate.ch/rent/real-estate/city-bern/matching-list?ep=1',

'dynamic': 'false',

}

response = requests.get("https://api.scrapingdog.com/scrape", params=params)

print(response.status_code)

# print(response.text)

soup = BeautifulSoup(response.text, 'html.parser')

allData = soup.find_all("div",{"data-test":"result-list-item"})

for data in allData:

try:

obj["price"]=data.find("span",{"class":"HgListingCard_price_JoPAs"}).text

except:

obj["price"]=None

try:

obj["address"]=data.find("div",{"class":"HgListingCard_address_JGiFv"}).text

except:

obj["address"]=None

try:

obj["description"]=data.find("p",{"class":"HgListingDescription_title_NAAxy"}).text

except:

obj["description"]=None

l.append(obj)

obj={}

print(l)

Let me explain to you the logic behind this code.

- Imports required libraries:

requests,BeautifulSoup, andpandas. - Initializes an empty list

land dictionaryobj. - Sets up API parameters with Scrapingdog, including the target URL and API key.

- Sends a GET request to Scrapingdog to scrape the specified webpage.

- Prints the HTTP response status code.

- Parses the HTML content using BeautifulSoup.

- Finds all listing elements on the page using a specific

divattribute. forloop to iterate over every property.- Tries to extract the price, address, and description.

- Assigns

Noneif any data is missing. - Adds the extracted data to the list

l. - Prints the final list of extracted data.

Handling pagination

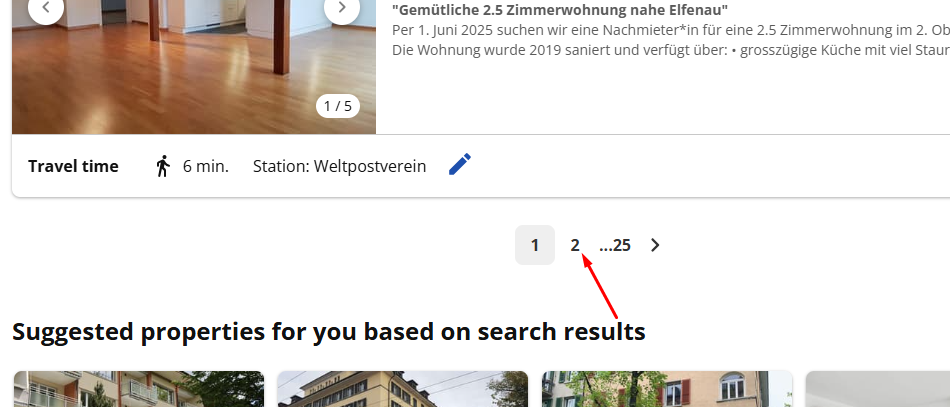

You will notice that when you click the second page, a new URL appears.

The URL of that page looks like https://www.homegate.ch/rent/real-estate/city-bern/matching-list?ep=2. So, that means the parameter ep is changing the page.

Now, to iterate over each page, we have to run another for loop to collect data from each page.

import requests

from bs4 import BeautifulSoup

import pandas as pd

l=[]

obj={}

for i in range(0,11):

params={

'api_key': 'your-api-key',

'url': 'https://www.homegate.ch/rent/real-estate/city-bern/matching-list?ep={}'.format(i),

'dynamic': 'false',

}

response = requests.get("https://api.scrapingdog.com/scrape", params=params)

print(response.status_code)

soup = BeautifulSoup(response.text, 'html.parser')

allData = soup.find_all("div",{"data-test":"result-list-item"})

for data in allData:

try:

obj["price"]=data.find("span",{"class":"HgListingCard_price_JoPAs"}).text

except:

obj["price"]=None

try:

obj["address"]=data.find("div",{"class":"HgListingCard_address_JGiFv"}).text

except:

obj["address"]=None

try:

obj["description"]=data.find("p",{"class":"HgListingDescription_title_NAAxy"}).text

except:

obj["description"]=None

l.append(obj)

obj={}

print(l)

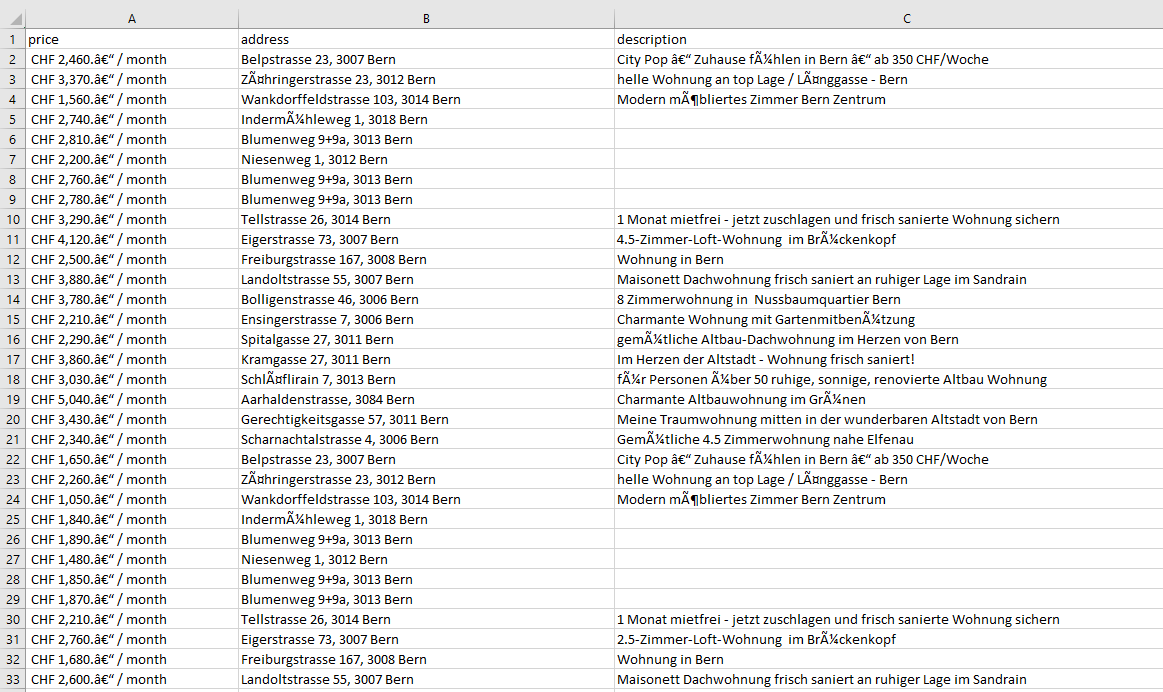

Saving data to CSV

Here we will use the pandas library to save the collected data to a CSV file.

df = pd.DataFrame(l)

df.to_csv('homegate.csv', index=False, encoding='utf-8')

- Creates a DataFrame

dffrom the listl. - Saves the DataFrame to a CSV file named

homegate.csv. - Disables the index column in the CSV using

index=False.

Once you run it, you will find a CSV file by the name homegate.csv.

Complete Code

import requests

from bs4 import BeautifulSoup

import pandas as pd

l=[]

obj={}

for i in range(0,3):

params={

'api_key': 'your-api-key',

'url': 'https://www.homegate.ch/rent/real-estate/city-bern/matching-list?ep={}'.format(i),

'dynamic': 'false',

}

response = requests.get("https://api.scrapingdog.com/scrape", params=params)

print(response.status_code)

# print(response.text)

soup = BeautifulSoup(response.text, 'html.parser')

allData = soup.find_all("div",{"data-test":"result-list-item"})

for data in allData:

try:

obj["price"]=data.find("span",{"class":"HgListingCard_price_JoPAs"}).text

except:

obj["price"]=None

try:

obj["address"]=data.find("div",{"class":"HgListingCard_address_JGiFv"}).text

except:

obj["address"]=None

try:

obj["description"]=data.find("p",{"class":"HgListingDescription_title_NAAxy"}).text

except:

obj["description"]=None

l.append(obj)

obj={}

# print(l)

df = pd.DataFrame(l)

df.to_csv('homegate.csv', index=False, encoding='utf-8')

You can, of course, alter this code and scrape other stuff as well from the page.

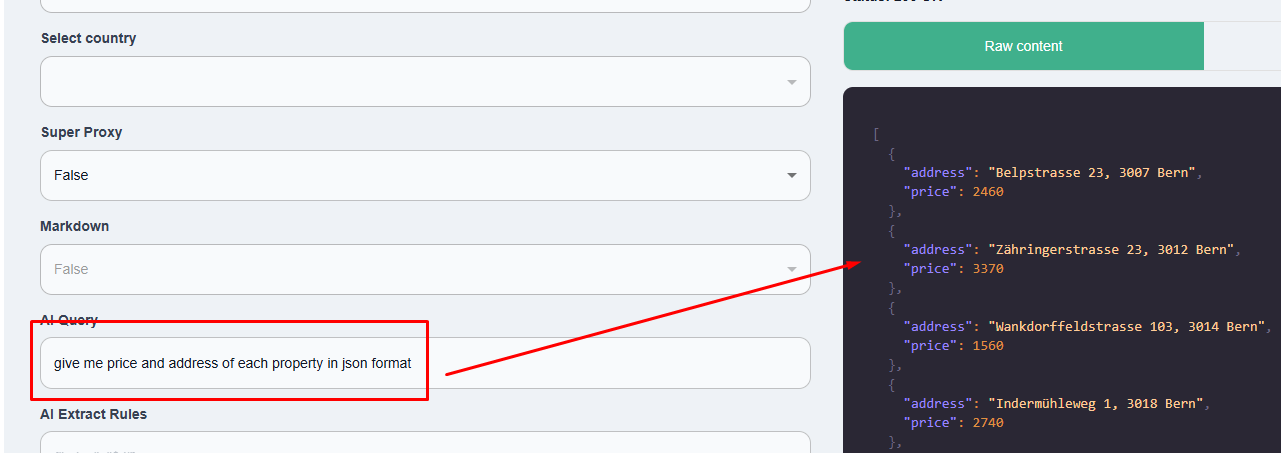

Get Structured Data without Parsing using Scrapingdog AI Scraper

Now, in the above code, we created a parsing logic by finding the location of each element within the DOM. Now, this logic will fall flat if the website is redesigned again. To avoid this, you can use Scrapingdog’s AI query feature, where you just have to pass the prompt. Let me explain to you how.

The code will look like this.

import requests

response = requests.get("https://api.scrapingdog.com/scrape", params={

'api_key': 'your-api-key',

'url': 'https://www.homegate.ch/rent/real-estate/city-bern/matching-list?ep=1',

'dynamic': 'false',

'ai_query': 'give me price and address of each property in json format'

})

print(response.text)

I have just passed a prompt “give me price and address of each property in JSON format” and it will provide me with the parsed data without writing a single line of parsing code.

This approach will help you maintain the data pipeline even when the website has changed its layout.

Conclusion

Scraping real estate data from Homegate.ch becomes efficient and straightforward when using Scrapingdog in combination with Python. By leveraging Scrapingdog’s API, we can bypass complex site structures and dynamic content challenges, allowing for reliable and scalable data extraction. Whether you’re gathering data for market analysis, academic research, or a personal project, this approach provides a powerful and flexible solution.