TL;DR

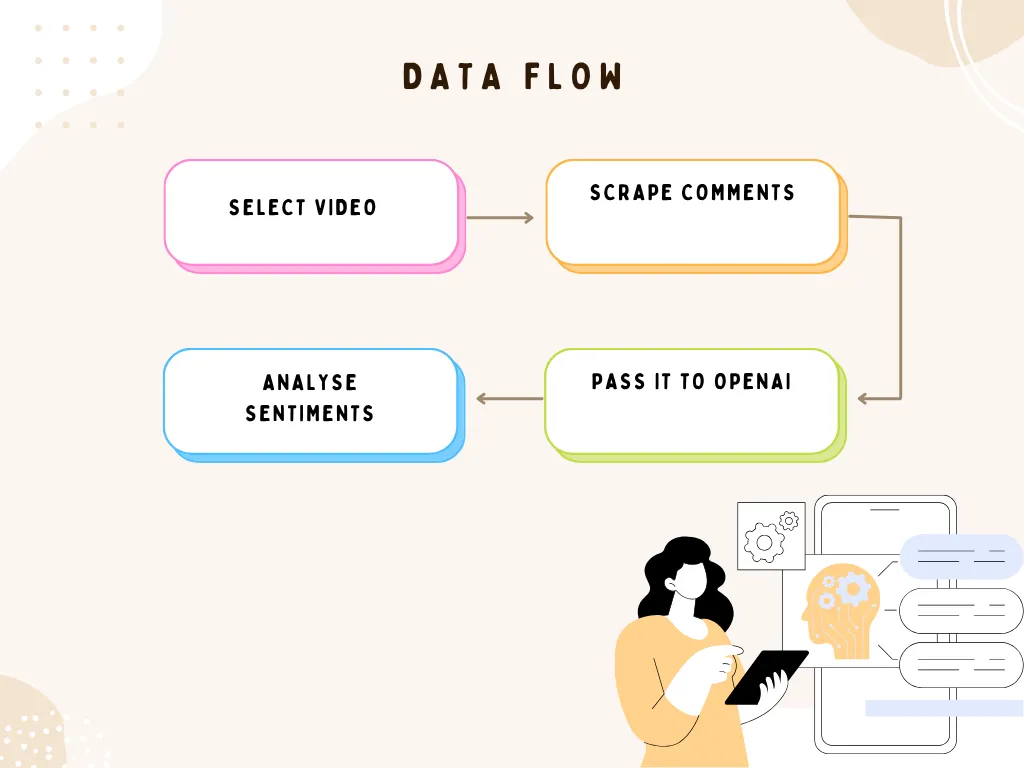

- Scrape comments via Scrapingdog:

GET https://api.scrapingdog.com/youtube/comments/?api_key=&v=VIDEO_ID; paginate withnext_page_token. - Python loops first 3 pages to build

all_comments. - Send comments to openrouter (

openai/gpt-4.1-nano) to return a 2-line sentiment summary. - Demo outcome: sentiment is mostly negative toward Elon’s new party.

YouTube is more than just a video platform; it’s a goldmine of audience opinions, feedback, and emotions. Every comment under a video reflects how people feel, react, or engage with content, making it a valuable resource for brands, researchers, and creators.

But manually reading thousands of comments? Not practical.

In this tutorial, we’ll learn how we can scrape YouTube comments and how we can do sentiment analysis on those comments. We’ll be using Python to tie it all together. By the end, you’ll have a working script that scrapes YouTube comments and instantly tells you what the audience really thinks.

Prerequisites

Scrape YouTube Comments

For this step, we will use Scrapingdog’s YouTube scraping API. This API will help us pull comments from any video. It is advisable to read the documentation first before moving ahead with this blog.

I have also created a video to guide you how to use Scrapingdog YouTube Comment API.

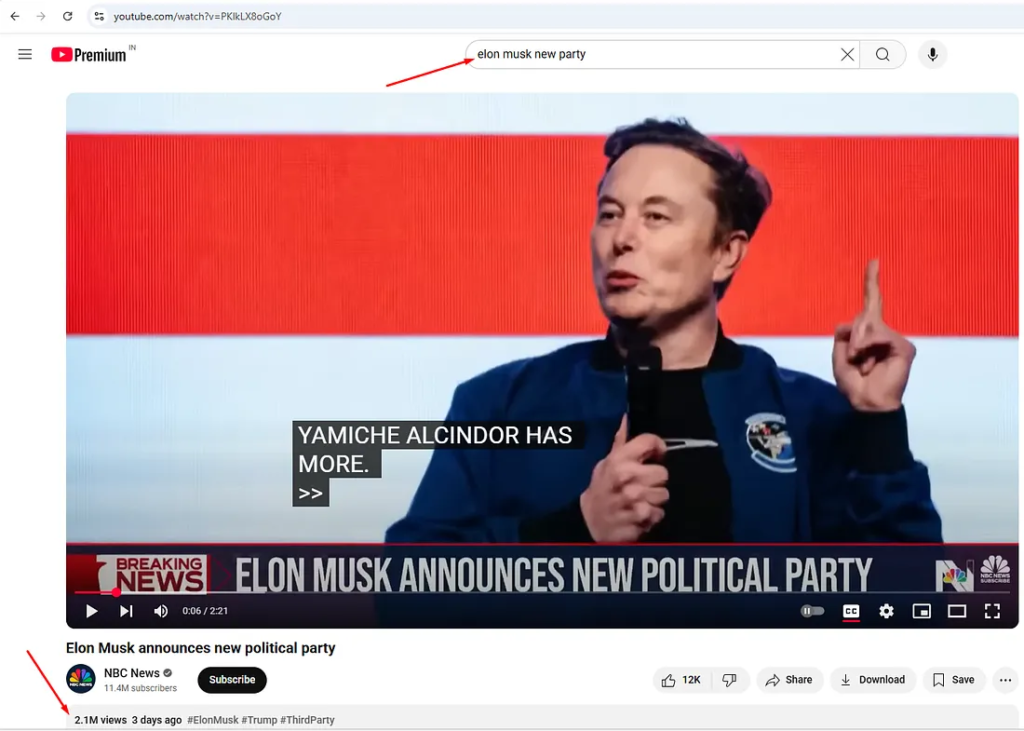

For this example, we are going to scrape data from this video.

Reason for selecting this video:

- I wanted to analyze whether there’s genuine public interest in his new party.

- The video had over 3 million views, so I assumed the comment volume would be high as well, making it a good sample for analysis.

The ID of this video is PKlkLX8oGoY as you can clearly see it in the video URL.

import requests

import openai

api_key = "your-api-key"

url = "https://api.scrapingdog.com/youtube/comments/"

params = {

"api_key": api_key,

"v": "PKlkLX8oGoY"

}

response = requests.get(url, params=params)

if response.status_code == 200:

data = response.json()

print(data)

else:

print(f"Request failed with status code: {response.status_code}")

Once I run this code, I will get comments from the I page. Now, we will conclude our decision based on the comments from the first three pages only.

To get the comments from the next page, we will have to use the next_page_token parameter. You will get this token within the response of the I page.

import requests

import openai

all_comments=[]

api_key = "your-api-key"

url = "https://api.scrapingdog.com/youtube/comments/"

next_page_token=""

for i in range(0,3):

params = {

"api_key": api_key,

"v": "PKlkLX8oGoY",

"next_page_token":next_page_token

}

response = requests.get(url, params=params)

if response.status_code == 200:

data = response.json()

for x in range(0,len(data['comments'])):

print(data['comments'][x]['text'])

all_comments.append(data['comments'][x]['text'])

next_page_token=data['pagination']['replies_next_page_token']

print(next_page_token)

else:

print(f"Request failed with status code: {response.status_code}")

So, once the loop is over, we will have all the comments from the first three pages inside the all_comments array.

Now, let’s pass these comments to the OpenAI API and see what the sentiments of citizens are.

Sentiment Analysis using OpenAI

def analyze_sentiment(comments):

print("once")

prompt = "Classify the sentiment of each YouTube comment below as Positive, Negative, or Neutral. Return in the format: Comment → Sentiment.\n\n"

prompt += "\n".join([f"{c}" for c in comments])

ai_query = "Classify the sentiment of the people in 2 lines"

user_prompt = f"User Query: {ai_query}\n\nText:\n{prompt}"

system_prompt = "You are a sentiment analysis engine. Return a 2 line result whether people are positive or negative on elon's new party."

headers = {

"Authorization": f"Bearer {OPENROUTER_API_KEY}",

"Content-Type": "application/json",

"HTTP-Referer": "https://scrapingdog.com", # Mandatory for OpenRouter

"X-Title": "YouTube Comment Sentiment Analyzer"

}

payload = {

"model": "openai/gpt-4.1-nano",

"messages": [

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt}

],

"temperature": 0.2

}

response = requests.post("https://openrouter.ai/api/v1/chat/completions", headers=headers, json=payload)

if response.status_code == 200:

result = response.json()

return result["choices"][0]["message"]["content"].strip()

else:

print("❌ Error:", response.status_code, response.text)

return "Error"

Let me explain this code.

- Builds a prompt by joining all YouTube comments line by line.

- Prepares a

user_promptwith the query “Classify the sentiment in 2 lines”. - Adds a

system_prompttelling the AI to summarize public sentiment on Elon’s new party. - Sets required headers for OpenRouter (API key, Referer, X-Title).

- Constructs the payload for the OpenRouter chat API using

gpt-4.1-nano. - Sends a POST request to OpenRouter’s

/chat/completionsendpoint. - If successful, returns the 2-line AI-generated sentiment summary.

- If it fails, it prints an error and returns

"Error".

Once you run this code, you will get the sentiments of the American citizens on his new party.

Complete Code

You can certainly scrape all the comments for a broader analysis, but for now, the code focuses on a limited set for demonstration purposes.

import requests

import openai

OPENROUTER_API_KEY = 'your-key'

all_comments=[]

api_key = "your-key"

url = "https://api.scrapingdog.com/youtube/comments/"

next_page_token=""

def analyze_sentiment(comments):

print("once")

prompt = "Classify the sentiment of each YouTube comment below as Positive, Negative, or Neutral. Return in the format: Comment → Sentiment.\n\n"

prompt += "\n".join([f"{c}" for c in comments])

ai_query = "Classify the sentiment of the people in 2 lines"

user_prompt = f"User Query: {ai_query}\n\nText:\n{prompt}"

system_prompt = "You are a sentiment analysis engine. Return a 2 line result whether people are positive or negative on elon's new party."

headers = {

"Authorization": f"Bearer {OPENROUTER_API_KEY}",

"Content-Type": "application/json",

"HTTP-Referer": "https://scrapingdog.com", # Mandatory for OpenRouter

"X-Title": "YouTube Comment Sentiment Analyzer"

}

payload = {

"model": "openai/gpt-4.1-nano",

"messages": [

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt}

],

"temperature": 0.2

}

response = requests.post("https://openrouter.ai/api/v1/chat/completions", headers=headers, json=payload)

if response.status_code == 200:

result = response.json()

return result["choices"][0]["message"]["content"].strip()

else:

print("❌ Error:", response.status_code, response.text)

return "Error"

for i in range(0,3):

params = {

"api_key": api_key,

"v": "PKlkLX8oGoY",

"next_page_token":next_page_token

}

response = requests.get(url, params=params)

if response.status_code == 200:

data = response.json()

for x in range(0,len(data['comments'])):

all_comments.append(data['comments'][x]['text'])

next_page_token=data['pagination']['replies_next_page_token']

print(next_page_token)

else:

print(f"Request failed with status code: {response.status_code}")

print(analyze_sentiment(all_comments))

Here are 5 Quick Takeaways:

You can scrape real YouTube comments at scale using Scrapingdog’s YouTube Comment API and Python.

The tutorial shows how to collect comments page by page using

next_page_tokento build a list of all comments.Once scraped, comments are sent to an OpenAI model (GPT-4.1-nano) to generate a 1–2 line sentiment summary of overall public opinion.

This method gives you instant insights into viewers’ emotions toward a video topic e.g., positive, negative, or mixed sentiment.

Using an API-based approach means you don’t need browser automation, proxies, or manual parsing to analyze YouTube comments.

Conclusion

In this tutorial, we combined the power of Scrapingdog’s YouTube Comment API with OpenRouter’s GPT model to analyze real user sentiment at scale, all in Python.

By automating the process of collecting and classifying comments, you save hours of manual work and get instant insights into how audiences truly feel. Whether you’re tracking reactions to a product launch, political event, or viral video, this setup gives you a powerful edge.

And the best part? No browser automation or complex setup required.

Now, go ahead and plug in your own YouTube video, run the script, and see what the internet thinks.