In my last post, we built an SEO keyword rank tracker using Google Sheets with a dedicated API for Google Search.

In this tutorial, we will use Make.com and Scrapingdog’s Google Maps API to automate lead extraction from Google Maps. This read will guide you through the process of building a local lead scraping tool.

Let’s get started with our setup!

What We Need To Build & Automate Local Lead Scraping

- Make.com Account

- Scrapingdog’s Google Maps API

- Google Sheets

If you are unfamiliar with Make (formerly Integromat), it’s a platform that allows users to connect different apps and automate workflows without knowing how to program. For beginners, I would advise you to review this video by Kevin Stratvert once to understand how make.com works quickly.

You can build scenarios in Make.com that assist you with specialized tasks involving coordinating the operation of multiple apps.

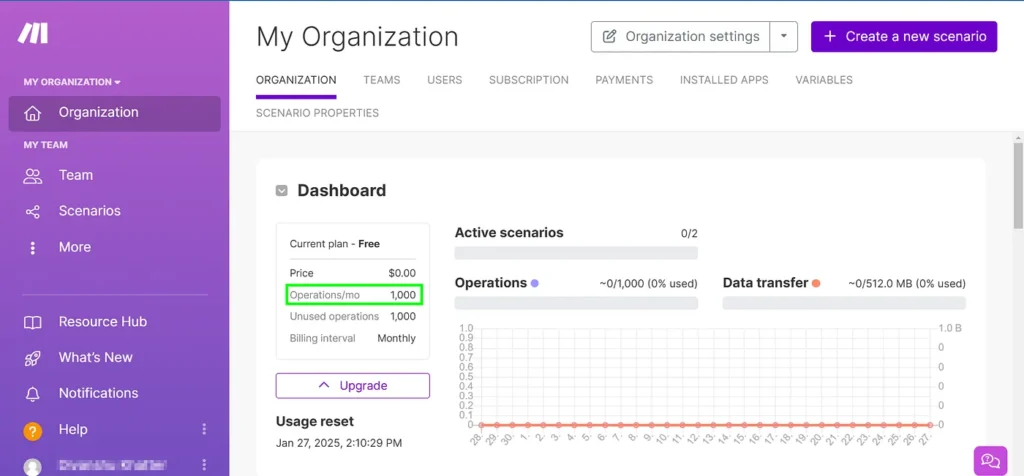

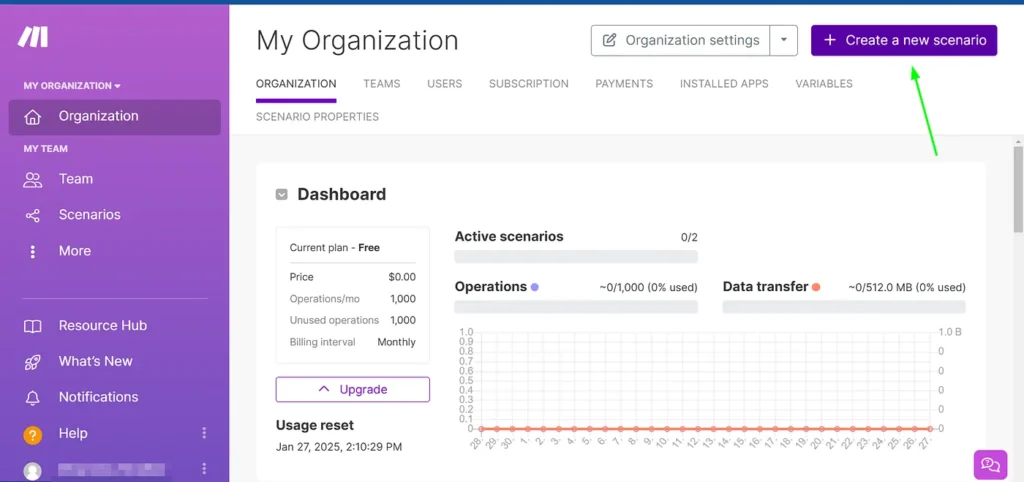

Sign up for free, make.com offers 1000 operations/month in the free tier plan.

When you sign up, you will see this dashboard, where you can track all the details about your account.

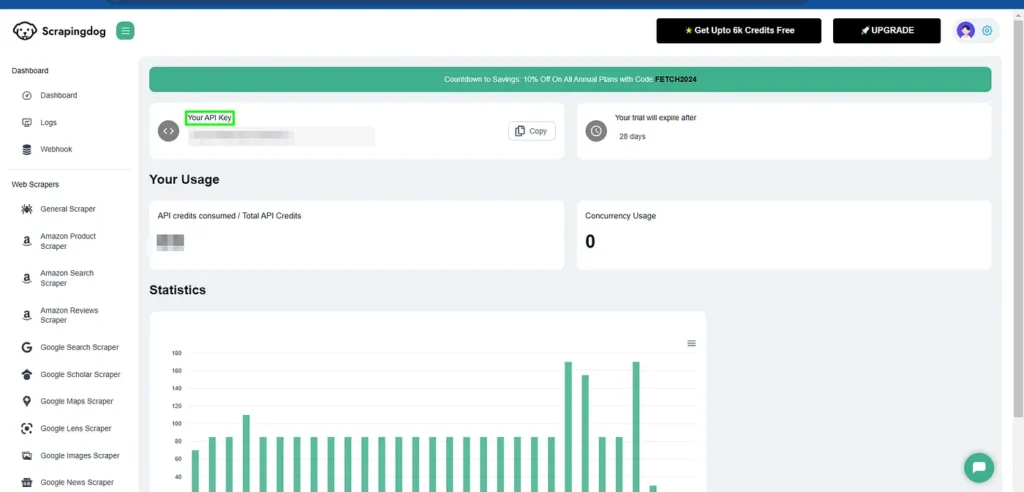

Quickly, let’s set up our Scrapingdog account too. Again, you can sign up for free and get 1000 free credits to spin it.

Once you sign up, on the dashboard you can access your Scrapingdog’s API_KEY (we will use it in building our make.com scenario)

The third thing needed is access to Google Sheets, where we will give inputs (keyword, location) and get the output (phone number). In place of Google Sheets, you can also use Airtable.

Okay, so we are ready with everything, let’s jump on building our Make scenario.

Building Our Make.com Scenario

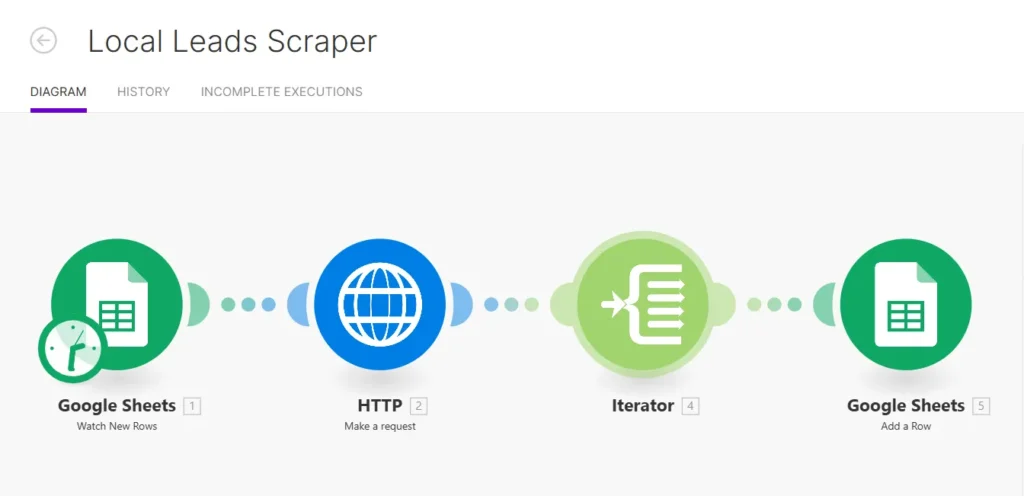

Before building our scenario, let’s understand what each step (or module) is doing here so that you get an idea of the whole process.

Our system starts by monitoring new rows added to a specific Google Sheet. When new data appears, an automation triggers that utilizes these rows as input for an HTTP request. This request fetches data using the Google Maps API based on the inputs provided.

The inputs will be the Keyword (for example, the category of the business you are targeting, like ‘salon’) and the location.

The iterator module processes each row of new data individually, ensuring that the HTTP request and subsequent actions are executed for each entry. This way, no data point is skipped, and each potential lead is processed sequentially.

Finally, data such as phone numbers or addresses extracted from Google Maps, are written back into the Google Sheets in the final module as you can see in the picture above.

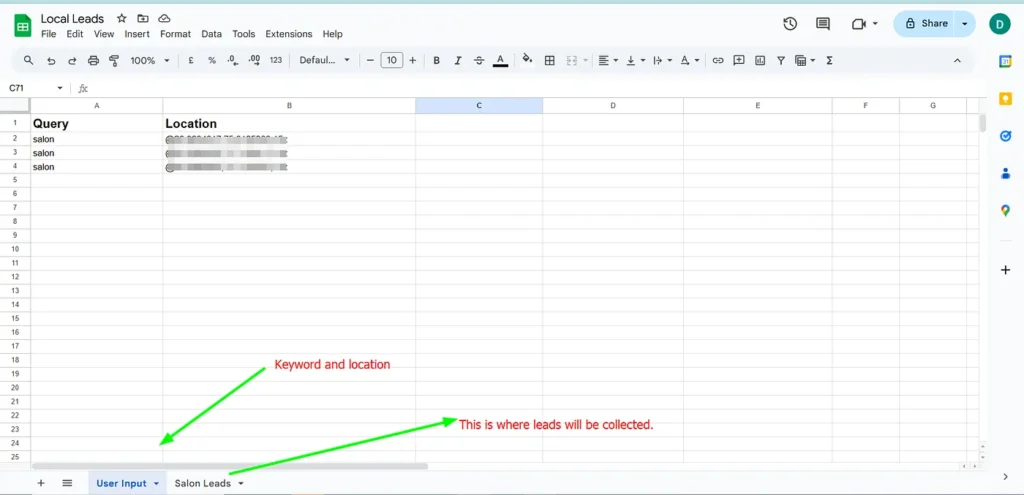

Our Spreadsheet is built in a way wherein in one sheet the user needs to input the keyword & location and in the other sheet we collect leads.

I would advise you to build a Google Spreadsheet with the same name “Local Leads”, this will better help us to understand our scenario.

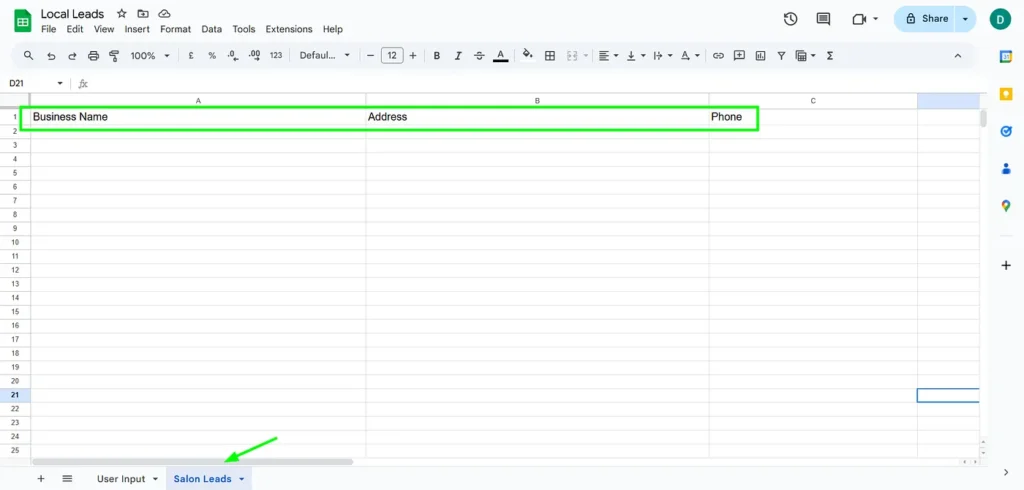

In the second sheet, where we will be collecting Business Names, Addresses, and Phone Numbers, you can have this sheet made too at your end.

Back to building our scenario.

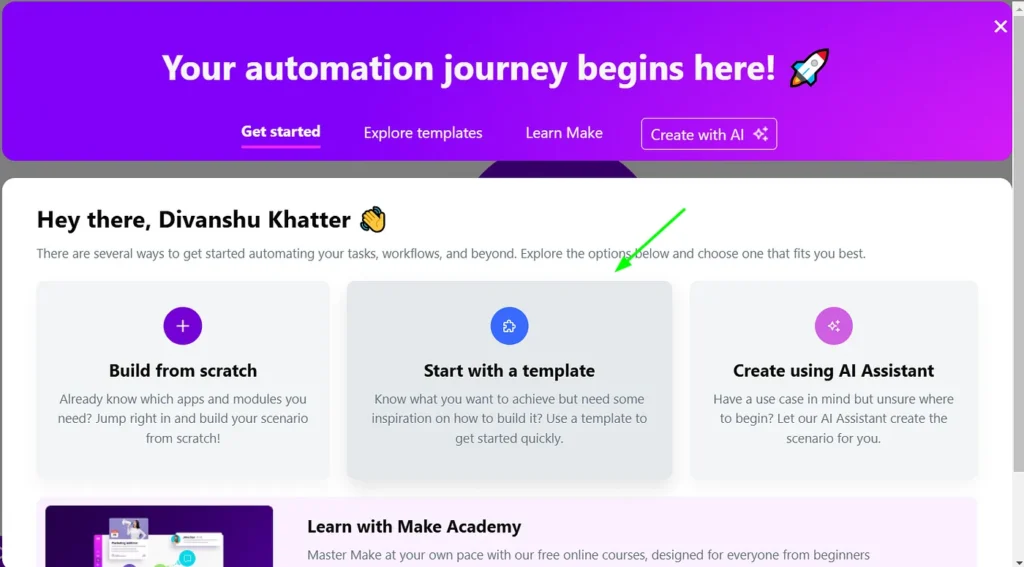

Click on create a scenario in Make.

If this is the first time you are using Make, it will ask you how you would want to proceed, there are templates made in Make that you can readily use.

However, for our use case, we will need to build it from scratch, & hence you need to click on “Build from Scratch”

Here’s a video on how to use this automation⬇️

If you wish to read the blog, please continue to follow along further.

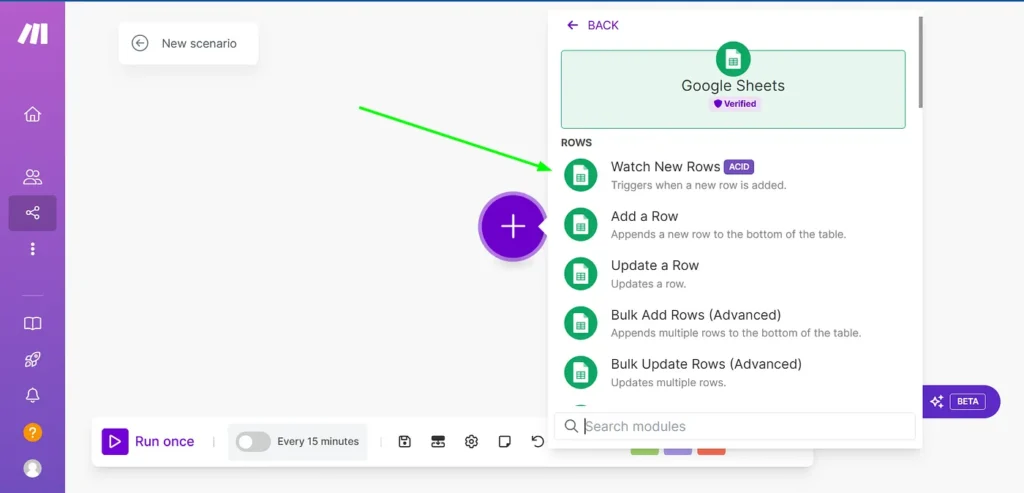

Then at the dashboard, click on the “+” icon and select Google Sheets module ➡ Watch New Rows.

This will create our first module, which will look for whenever a new row is added.

Right-click on the module and click ‘settings’ to map our spreadsheet with this module. Again if it is your first time Make will ask for your permission to connect to Google Sheets.

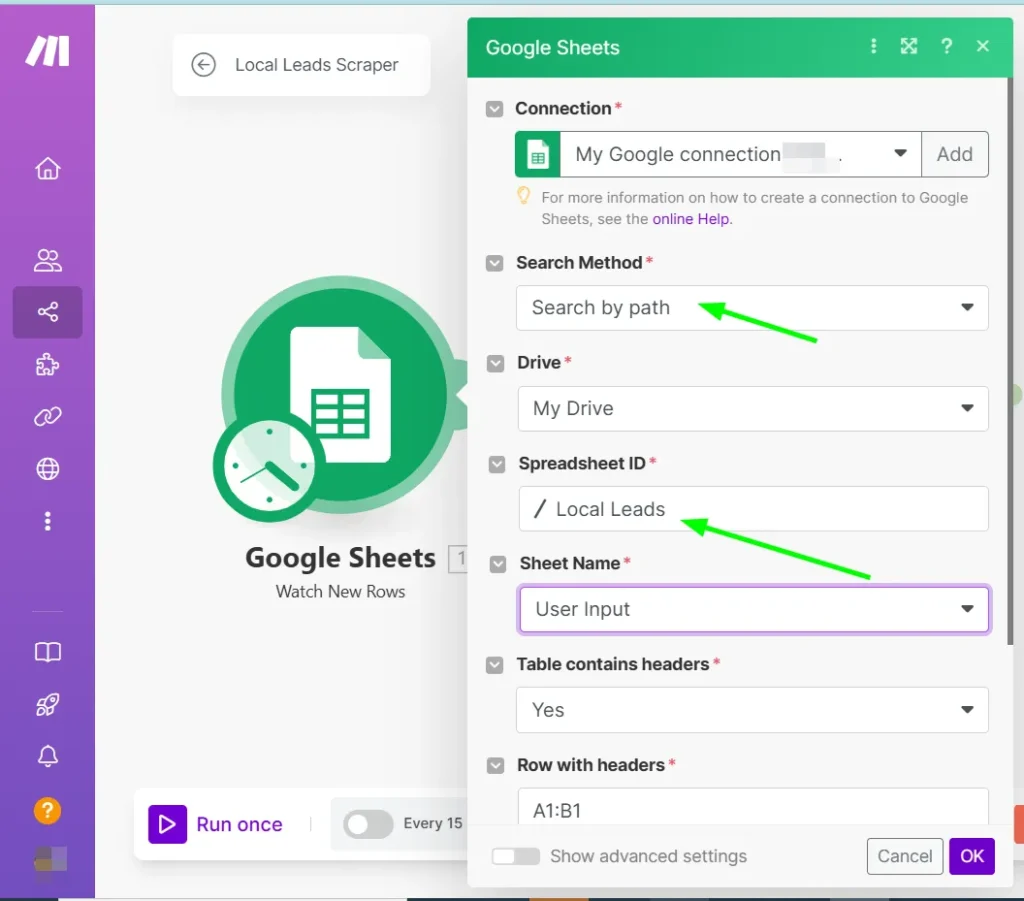

After connecting your account you need to search for your spreadsheet, the method I have chosen is to search by path and locate the name of my spreadsheet “Local Leads”

Also, I am assigning the “User Input” Sheet name from where the trigger will take place.

Filling all the necessary details, you can copy the settings given below in the image for this module.

Connecting the 2nd Module

Now every time a row is filled in our User Input sheet, we will send an HTTP request using Google Maps API.

Add a New Module To our scenario, this time ‘HTTP’ ➡ ‘Make a request’

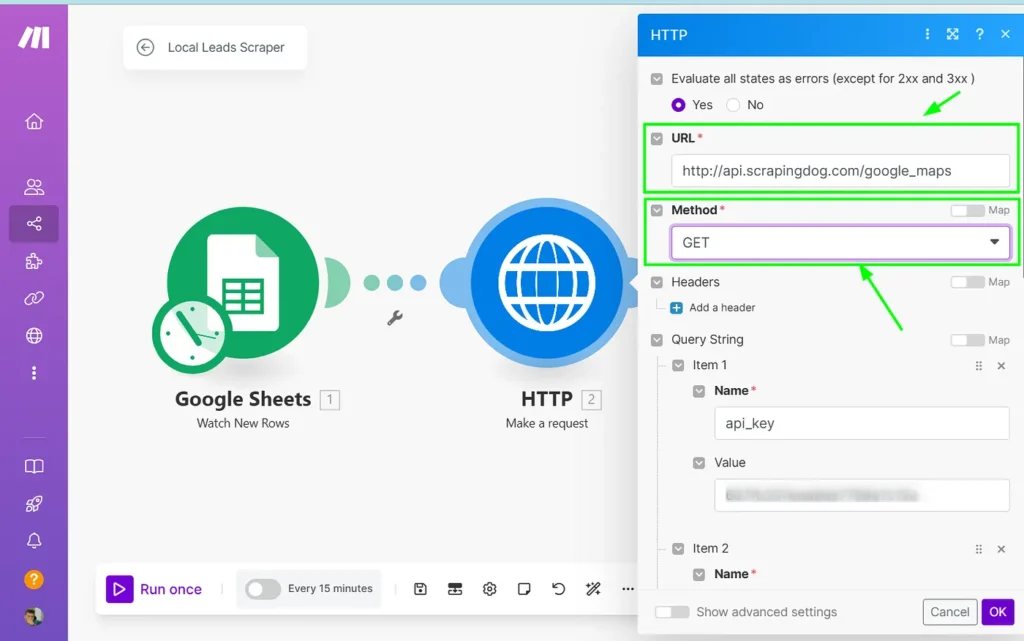

Fill in the details, the URL is the endpoint of Scrapingdog’s Google Maps API, the method we will use GET.

GET method is used to retrieve data from a specified resource on the internet. In this scenario, we’re using it to query the Google Maps API with parameters provided in the Google Sheets. This allows us to fetch location-specific data.

You can read more about Scrapingdog’s Google Maps API documentation to understand how the API works.

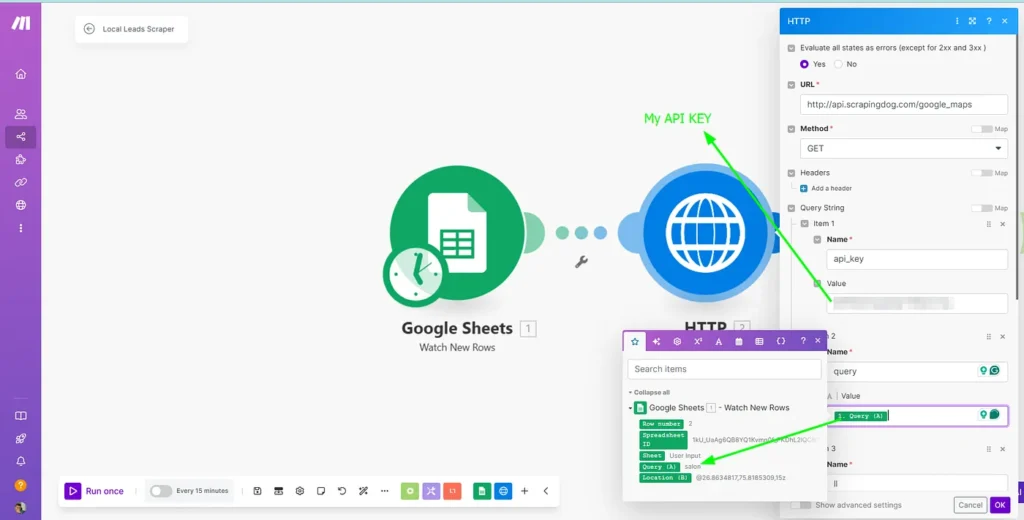

In the query string, all the input parameters are filled and need to be mapped.

For the next input variable, we named it ‘query’ and mapped it to the business category (‘salon’ in our case). We have copy pasted API_KEY, you can get your Key from the Scrapingdog’s dashboard.

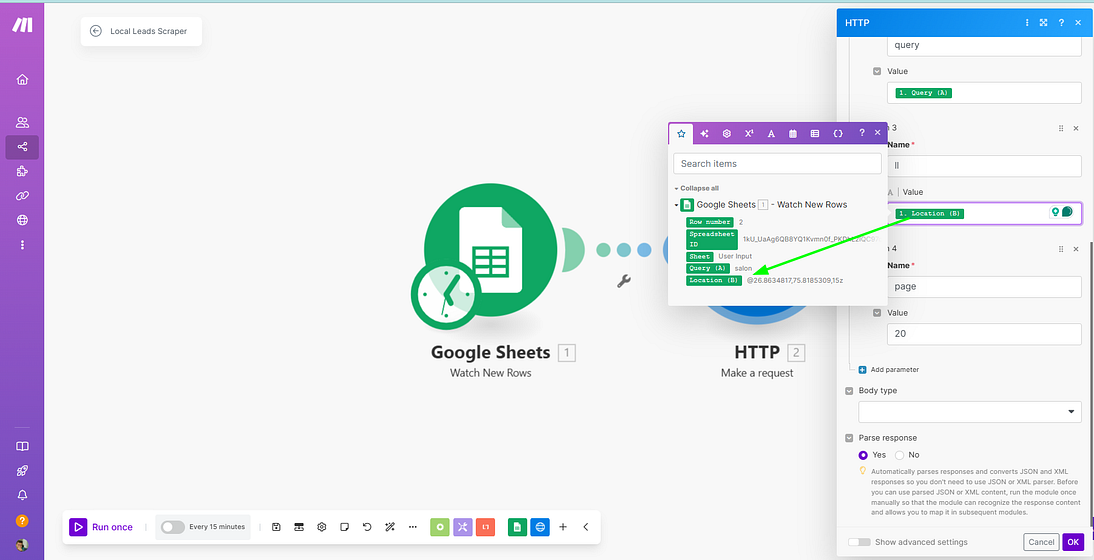

Further, mapping the location too from our spreadsheet.

So this module will take input parameters from our ‘User-Input’ sheet & run to find all the businesses with your query in a location. In the end, check the box for parsing the response to Yes.

Connecting the 3rd Module

We would now add another module “Iterator”. This module is crucial as it will process each item in the response array individually, allowing for detailed manipulation and extraction of specific data points such as phone numbers or addresses from each business listing retrieved.

After setting up the Iterator module, we proceed by mapping the output data to be processed for each entry. This is achieved by configuring the Iterator to handle the search_results array (you can see how the mapping is done, in the above image) which contains the detailed results returned from the HTTP request to the Scrapingdog Google Maps API.

Here’s how the mapping in the Iterator module works:

- Mapping Details: Inside the Iterator module, you map the data fields such as business name, location, and contact details from each entry in the

search_resultsarray. - Individual Processing: For each business listing retrieved, the Iterator breaks down the array into individual elements. This allows each business’s details to be processed separately, ensuring that data like phone numbers and addresses are accurately extracted without mixing up entries.

- Output Configuration: The data extracted from each iteration — like business names and contact information — is then ready to be sent to another module.

Connecting the 4th Module

Up until this point, we are getting the local leads, however, we would add a bare minimum of one last module to store this data.

Remember in our spreadsheet, we had the second sheet with the name “Salon Leads”, this module will help us to update the leads’ data here.

The module will be ‘Google Sheets ➡ Add a Row’

In our leads sheet, we are taking the business names, addresses, and phone number.

Therefore in our last module, the data we get from the iterator we are mapping right to add brown in our sheet “Salon leads”

Save the scenario, and let’s test it.

What’s Location Parameter & How It Is Used

The “location” parameter in the API setup uses GPS coordinates to specify where to apply your search query. These coordinates include latitude, longitude, and zoom level to help refine the search area on Google Maps. For example, if you are looking into data for Austin, Texas, you might use coordinates such as 30.3073477,-98.0853974,10z. Here’s a breakdown:

- Latitude (30.3073477) and Longitude (-98.0853974): These represent the geographical coordinates that define the precise point on the Earth’s surface.

- Zoom level (

10z): This parameter determines how zoomed in or out the map is. Higher numbers mean a closer, more detailed view, while lower numbers show a broader perspective.

This is what you need to define with every query in the ‘User-Input’ sheet every time for a business category.

Automate The Process

Every scenario in Make.com Can be automated to your desired time or any regular interval.

Toggle “Every 15 minutes” to green, this will pop up some options to customize your scheduler.

Here, you can make your scenario run to your desired time easily.

After automating, you can add a module to send you Emails when every time the leads are processed. Besides email, you can configure notifications to send alerts via platforms like Slack.

Conclusion

You can use Scrapingdog’s dedicated APIs with Make.com and Google Sheets to automate various tasks. For instance, the Google Search API can be integrated to build an SEO tool that provides real-time data from search results.

Additionally, do check out our other dedicated APIs to streamline your data extraction capabilities & build tools that use real time data!!