Finding all the URLs on a domain can be tricky!!

If you are someone who is looking to get all website pages, this article is built for you.

Well, we’ll look for different methods (specifically 4) to do this task.

By the end of this read, you’ll have a solid understanding of how web scraping and crawling can help you in this process, and you’ll have all the knowledge with practical techniques to find all the URLs on a domain efficiently.

Let’s get started!!

Find All URLs On A Domain’s Website

Let’s explore some effective ways.

The methods we will cover are as simple as using a Google search, finding links from sitemaps & robots.txt, seeing some SEO crawling tools, and finally, building your script with Python.

These methods are easy to use, and anyone can leverage them to find all the URLs on a website.

Google search technique

A simple and fast method to find URLs is Google Search. You can enter a specific search query to locate website pages.

However, not all pages may be included in the search results. This is because Google may exclude certain pages from its index for reasons like duplicate content, no-indexing from the site owner & many more.

Sitemaps and robots.txt

Examining the website’s sitemap and robots.txt file can provide valuable insights for those comfortable with delving into technical details.

These files contain important information about the website’s pages & no-indexed pages.

Challenges here can be that the domain from which you want all the URLs can have multiple sitemaps, and there might be pages that are 302 redirected at the time when you check the URLs.

Further, if they are using plugins like RankMath or Yoast, and some pages are not self-canonical, i.e, Page A has a canonical tag to page B, the plugin removes Page A from the sitemap.

SEO crawling tools

If you want a straightforward and hassle-free solution, you should try using an SEO crawling tool.

Many of these tools are easy to use and provide detailed insights, but there’s a catch: using them extensively often comes with a cost.

However, if you have a smaller website with fewer than 500 pages, you can find free SEO spider tools that offer plenty of functionality.

Custom scripting

For those comfortable with coding, creating a custom script offers the most control and potentially comprehensive results.

For example, you could target only URLs containing a certain keyword or exclude specific directories. However, it requires technical expertise and time investment. If you’re up for the challenge, creating a script can be rewarding.

Now, let’s explore each of these methods in detail and see how you can use them to find all URLs on a website.

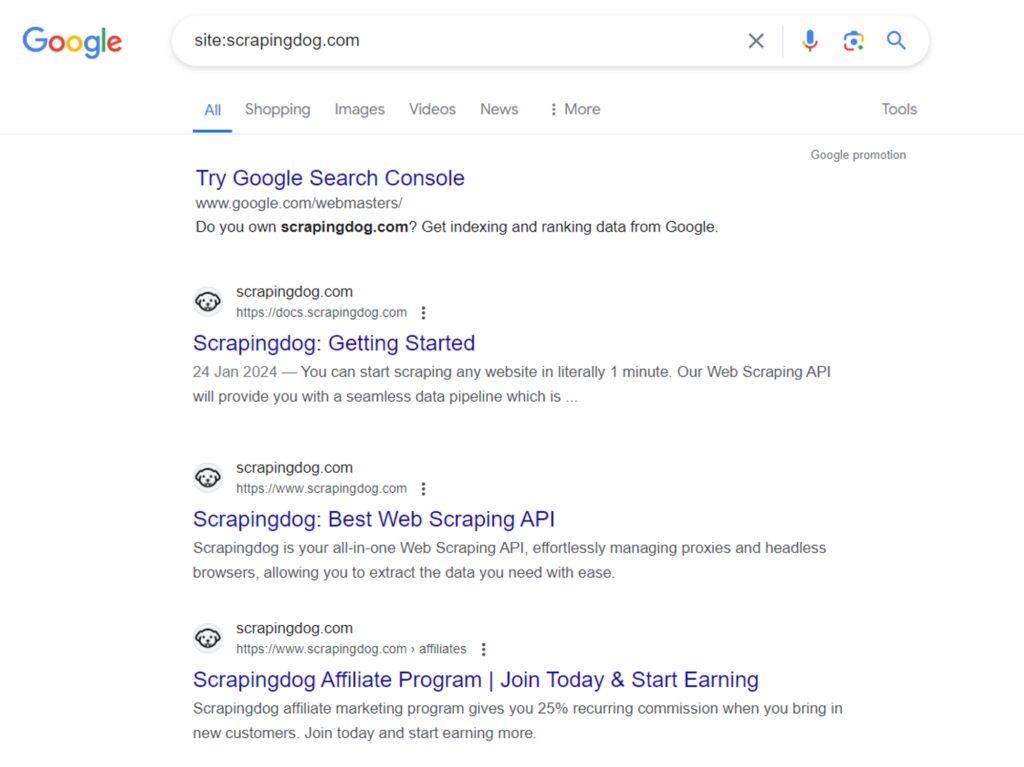

Method 1: Google Site Search

One of the quickest ways to find URLs on a website is with Google’s site search feature. Here’s how to use it:

- Go to Google.com

- In the search bar, type

site:example.com(replaceexample.comwith the website you want to search). - Hit enter to see the list of indexed pages for that website.

Let’s find all the pages on scrapingdog.com. Search for site:scrapingdog.com on Google and hit enter.

Google will return a list of indexed pages for a specific domain.

However, as discussed earlier, Google may exclude certain pages for reasons such as duplicate content, low-quality, or inaccessible pages.

So, the “site:” search query is best for getting rough estimates, but it may not be the most accurate measure.

Using Scrapingdog to find URLs

While Google search is easy, what if you need the page URLs and titles? Manually copying them is inefficient & prone to error!!

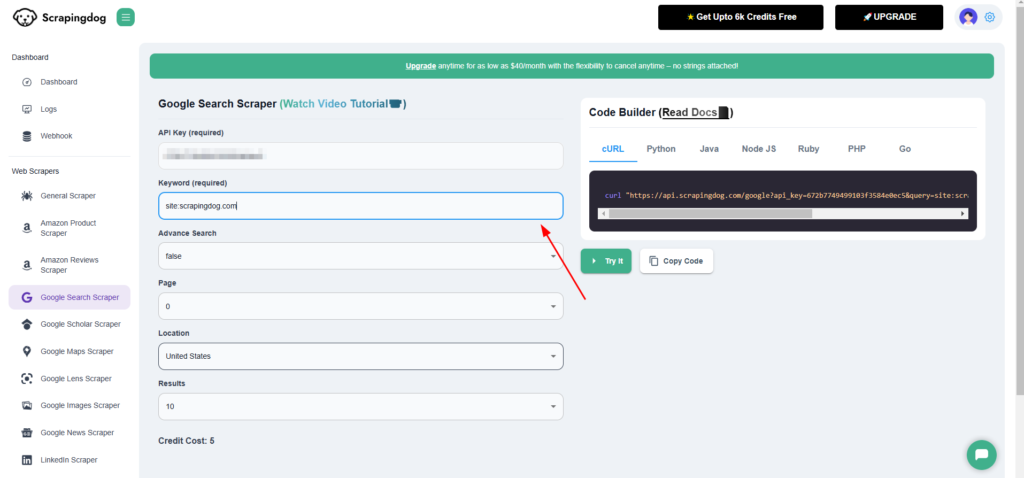

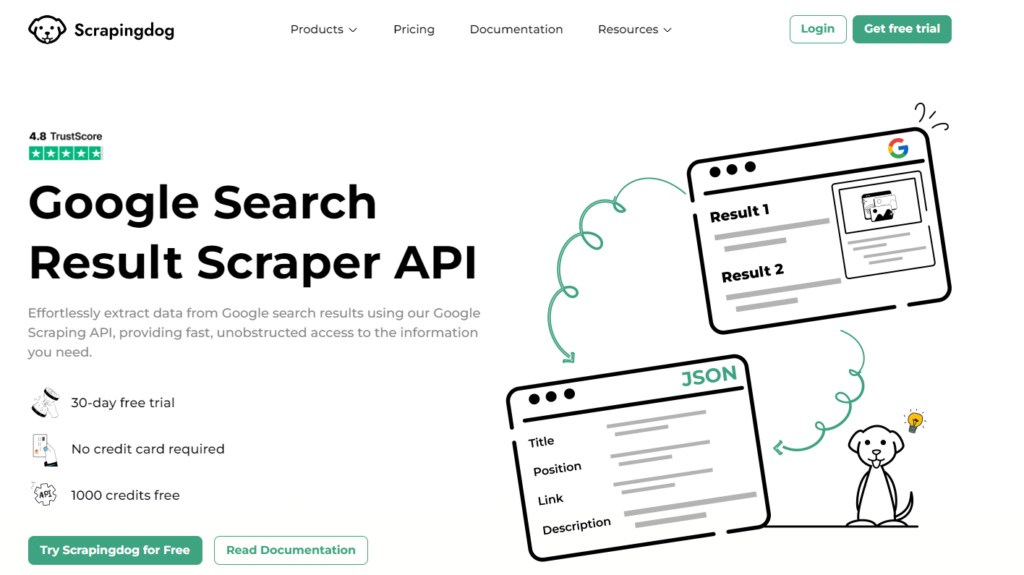

A better approach is to use the Scrapingdog Google Search Result Scraper API. It organizes search results in a user-friendly JSON format. Scrapingdog offers a free trial with 1000 free credits to spin it. You can go and register yourself here.

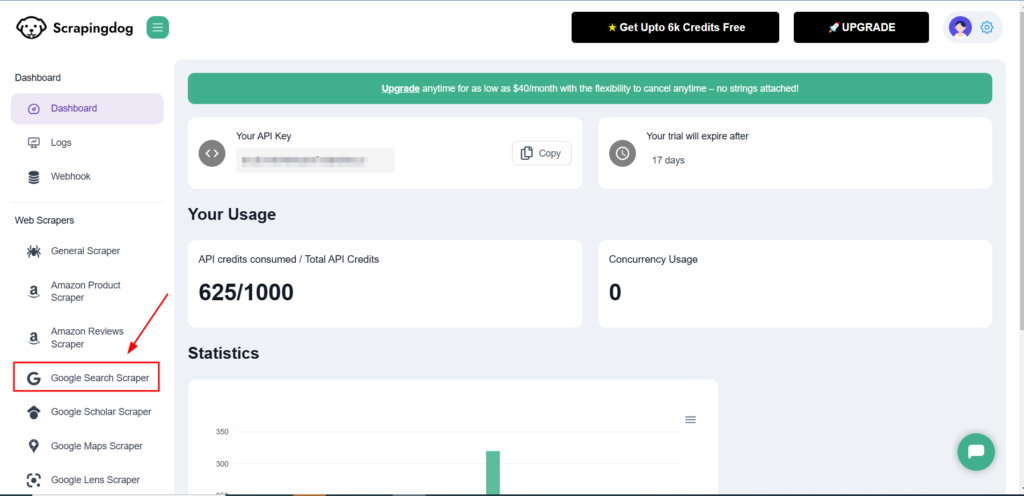

After registering, you’ll see your dashboard with your API key and remaining credits. Ignore the key for now. On the left, find “Google Scraper” and click it.

{

"menu_items": [

{

"title": "Books",

"link": "https://www.google.com/search?q=site:scrapingdog.com&sca_esv=7de4e3da6f1aa72e&gl=us&hl=en&gbv=1&tbm=bks&source=lnms&sa=X&ved=0ahUKEwjv79-0ieuJAxWPk68BHU0QFJcQ_AUIBigB",

"position": 1

},

{

"title": "Shopping",

"link": "https://www.google.com/url?q=/search%3Fq%3Dsite:scrapingdog.com%26sca_esv%3D7de4e3da6f1aa72e%26gl%3Dus%26hl%3Den%26gbv%3D1%26tbm%3Dshop%26source%3Dlnms%26ved%3D1t:200713%26ictx%3D111&opi=89978449&sa=U&ved=0ahUKEwjv79-0ieuJAxWPk68BHU0QFJcQiaAMCAcoAg&usg=AOvVaw2DyOm8HRqmuoH-OBzjAPrM",

"position": 2

},

{

"title": "Images",

"link": "https://www.google.com/search?q=site:scrapingdog.com&sca_esv=7de4e3da6f1aa72e&gl=us&hl=en&gbv=1&tbm=isch&source=lnms&sa=X&ved=0ahUKEwjv79-0ieuJAxWPk68BHU0QFJcQ_AUICCgD",

"position": 3

},

{

"title": "Maps",

"link": "https://www.google.com/url?q=https://maps.google.com/maps%3Fq%3Dsite:scrapingdog.com%26gl%3Dus%26hl%3Den%26gbv%3D1%26um%3D1%26ie%3DUTF-8%26ved%3D1t:200713%26ictx%3D111&opi=89978449&sa=U&ved=0ahUKEwjv79-0ieuJAxWPk68BHU0QFJcQiaAMCAkoBA&usg=AOvVaw2z5wwgtEIdcmS7Su7PcSb1",

"position": 4

},

{

"title": "Videos",

"link": "https://www.google.com/search?q=site:scrapingdog.com&sca_esv=7de4e3da6f1aa72e&gl=us&hl=en&gbv=1&tbm=vid&source=lnms&sa=X&ved=0ahUKEwjv79-0ieuJAxWPk68BHU0QFJcQ_AUICigF",

"position": 5

},

{

"title": "News",

"link": "https://www.google.com/search?q=site:scrapingdog.com&sca_esv=7de4e3da6f1aa72e&gl=us&hl=en&gbv=1&tbm=nws&source=lnms&sa=X&ved=0ahUKEwjv79-0ieuJAxWPk68BHU0QFJcQ_AUICygG",

"position": 6

}

],

"organic_results": [

{

"title": "Scrapingdog: Documentation",

"displayed_link": "https://docs.scrapingdog.com",

"snippet": "Nov 7, 2024 · You can start scraping any website in literally 1 minute. Our Web Scraping API will provide you with a seamless data pipeline that is almost 99.99% unbreakable.",

"link": "https://docs.scrapingdog.com/",

"rank": 1

},

{

"title": "Scrapingdog: Best Web Scraping API",

"displayed_link": "https://www.scrapingdog.com",

"snippet": "Scrapingdog is your all-in-one Web Scraping API, effortlessly managing proxies and headless browsers, allowing you to extract the data you need with ease.",

"link": "https://www.scrapingdog.com/",

"rank": 2

},

{

"title": "Terms of Service - Scraping Dog",

"displayed_link": "https://www.scrapingdog.com › terms",

"snippet": "Apr 3, 2023 · Try Scrapingdog for Free! Get 1000 free credits to spin the API. No credit card required!",

"link": "https://www.scrapingdog.com/terms/",

"rank": 3

},

{

"title": "Scrapingdog Blog's - Master The Art of Web Scraping",

"displayed_link": "https://www.scrapingdog.com › blog",

"snippet": "Designed with you in mind, our web scraping tutorials cater to any skill level. Each guide is straightforward and packed with useful insights.",

"link": "https://www.scrapingdog.com/blog/",

"rank": 4

},

{

"title": "Scrapingdog Affiliate Program | Get 25% Recurring Commission",

"displayed_link": "https://www.scrapingdog.com › affiliates",

"snippet": "We welcome you to our our affiliate program where we help you to earn a passive income. Scrapingdog is a web scraping API through which you can scrape any ...",

"link": "https://www.scrapingdog.com/affiliates/",

"rank": 5

},

{

"title": "Privacy Policy - Scraping Dog",

"displayed_link": "https://www.scrapingdog.com › privacy",

"snippet": "Apr 3, 2023 · We collect information by fair and lawful means, with your knowledge and consent. We also let you know why we're collecting it and how it will ...",

"link": "https://www.scrapingdog.com/privacy/",

"rank": 6

},

{

"title": "About - Scraping Dog",

"displayed_link": "https://www.scrapingdog.com › about",

"snippet": "Scrapingdog is dedicated to transforming how businesses extract web data, making every data point easily accessible & actionable.",

"link": "https://www.scrapingdog.com/about/",

"rank": 7

},

{

"title": "Buy Premium Datacenter Proxies | Free Trial - Scrapingdog",

"displayed_link": "https://www.scrapingdog.com › datacenter-proxies",

"snippet": "Rating 4.8 (489) · $40.00 to $1,000.00 · Business/ProductivityAccess datacenter proxies with a pool of shared and dedicated proxies for data extraction. No credit card required. 1000 credits free.",

"link": "https://www.scrapingdog.com/datacenter-proxies/",

"rank": 8

},

{

"title": "An Economical Alternative To ScrapingBee - Scrapingdog",

"displayed_link": "https://www.scrapingdog.com › scrapingbee-alternative",

"snippet": "Rating 4.8 (481) Scrapingdog is a better and more powerful alternative to Scrapingbee. Save almost 40% while using Scrapingdog.",

"link": "https://www.scrapingdog.com/scrapingbee-alternative/",

"rank": 9

},

{

"title": "Web Scraping Common Questions - Scrapingdog",

"displayed_link": "https://www.scrapingdog.com › webscraping-problems",

"snippet": "Here you will find answers to the most common problems people may encounter while scraping data from websites.",

"link": "https://www.scrapingdog.com/webscraping-problems/",

"rank": 10

}

],

"pagination": {

"page_no": {}

}

}

You can also automate the process of finding all URLs via Google search using a no-code automation tool like Make or n8n.

I have built one automation that you can use in Make using Scrapingdog’s Google Search API.

I have created a GIF to show how the setup works. To keep the GIF small, I have fetched only 10 results, you can keep it to 100.

This automation uses a Google sheets wherein you have to just type the domain name, and the sheet will give you 100 URLs of the domain.

Here is the blueprint for this automation import into your make.com account with your scrapingdog’s API KEY.

Method 2: Sitemaps and robots.txt

Another way to find website URLs is by using website monitoring software to check the site’s sitemap and robots.txt files. While this approach is more technical, it can yield more detailed results. These files offer valuable information about a website’s structure and content. Let’s explore how sitemaps and robots.txt files can help us uncover all a website’s URLs.

Using sitemaps

A sitemap is an XML file listing all important website pages for search engine indexing. Webmasters use it to help search engines understand the website’s structure and content for better indexing.

Every decent website has a sitemap as it improves Google rankings and is considered a good SEO practice. To learn how to create and optimize one effectively, read the article for practical tips.

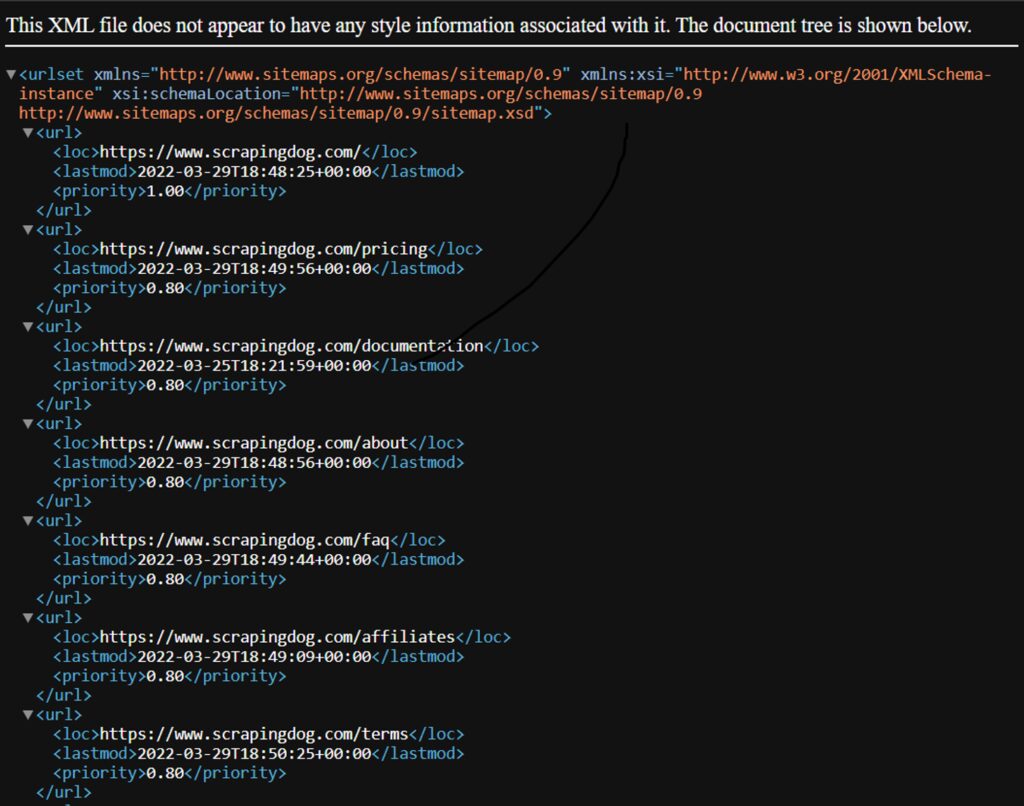

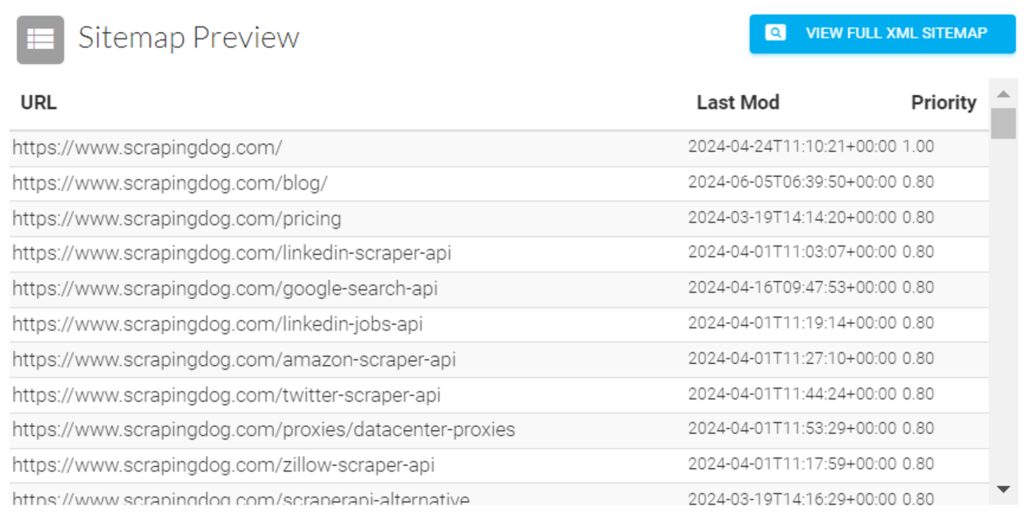

Here’s what a standard sitemap looks like:

The <loc> element specifies the page URL, <lastmod> indicates the last modification time, and <priority> signifies the relative importance for search engines (higher priority means more frequent crawling).

Now, where to find a sitemap? Check for /sitemap.xml on the website (e.g., https://www.scrapingdog.com/sitemap.xml)

Websites can have multiple sitemaps in various locations, including: /sitemap.xml.gz, /sitemap_index.xml, /sitemap_index.xml.gz, /sitemap.php, /sitemapindex.xml, /sitemap.gz.

Most websites mention the number of sitemaps they have under the domain in the robots.txt file, which we are going to discuss next.

Using robots.txt

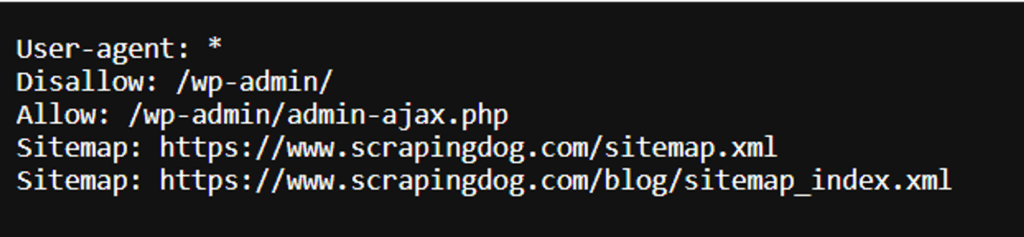

The robots.txt file instructs search engine crawlers on which pages to index and which ones to exclude from indexing. It can also specify the location of the website’s sitemap. The file is often located at the /robots.txt path (e.g., https://www.scrapingdog.com/robots.txt).

Here’s an example of a robots.txt file. Some routes are disallowed for indexing. The sitemap location is also present.

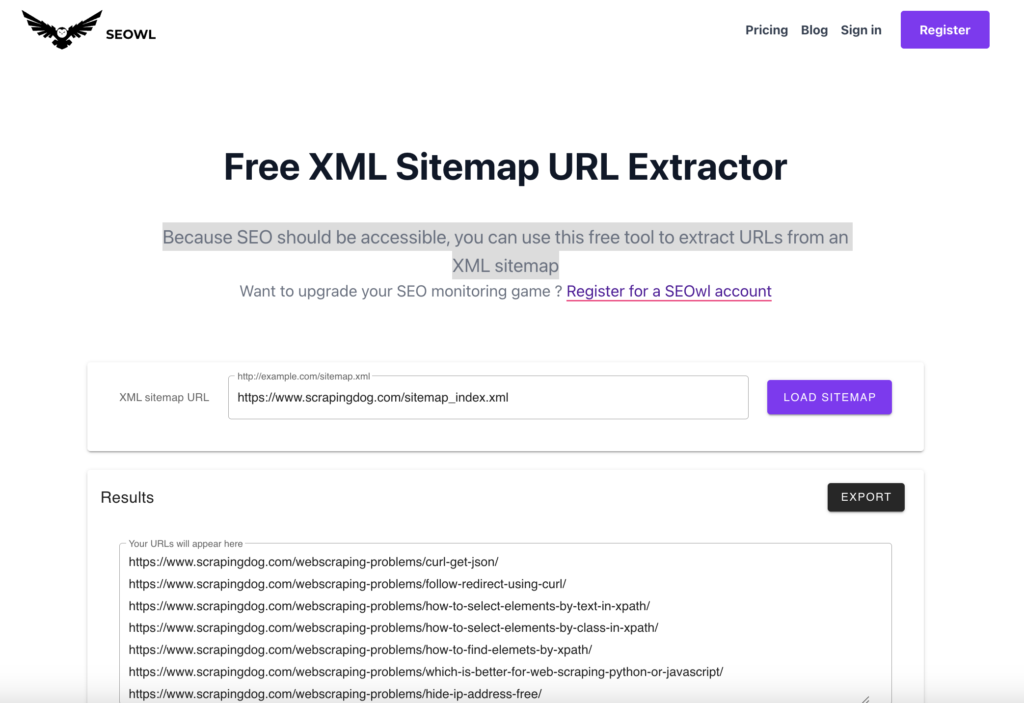

You need to visit both sitemaps and find all the URLs within the website. Note that, for smaller sitemaps, you can manually copy the URLs from each tag. But for larger sitemaps, consider using an online tool to convert the XML format to a more manageable format, such as CSV. There are free tools available, like the one Seowl sitemap extractor.

Method 3: SEO Crawling Tools

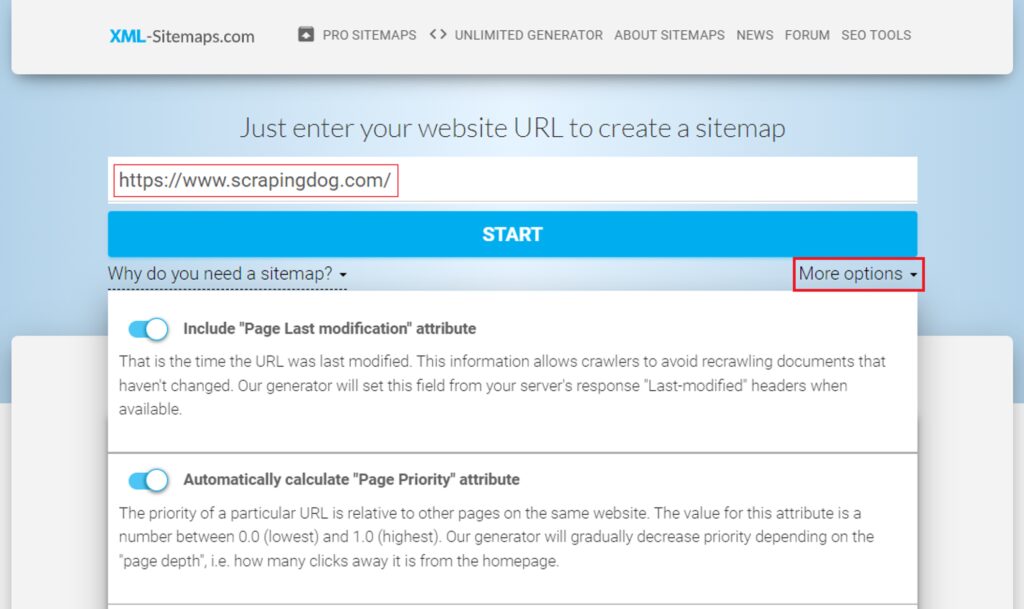

Now let’s see how SEO crawling tools help us find all website pages. There are various SEO crawlers in the market, we’ll explore the free tool XML-Sitemaps.com. Enter your URL and click “START” to create a sitemap. This tool is suitable when you need to quickly create a sitemap for a small website (up to 500) pages.

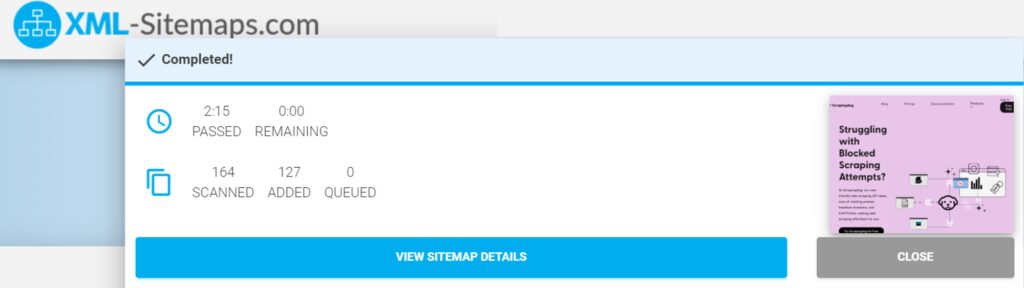

The process will start and you will see the number of pages scanned (167 in this case) and the number of pages indexed (127 in this case). This indicates that only around 127 of the scanned pages are currently indexed in Google Search.

You can download the XML sitemap file or receive it via email and put it on your website afterward.

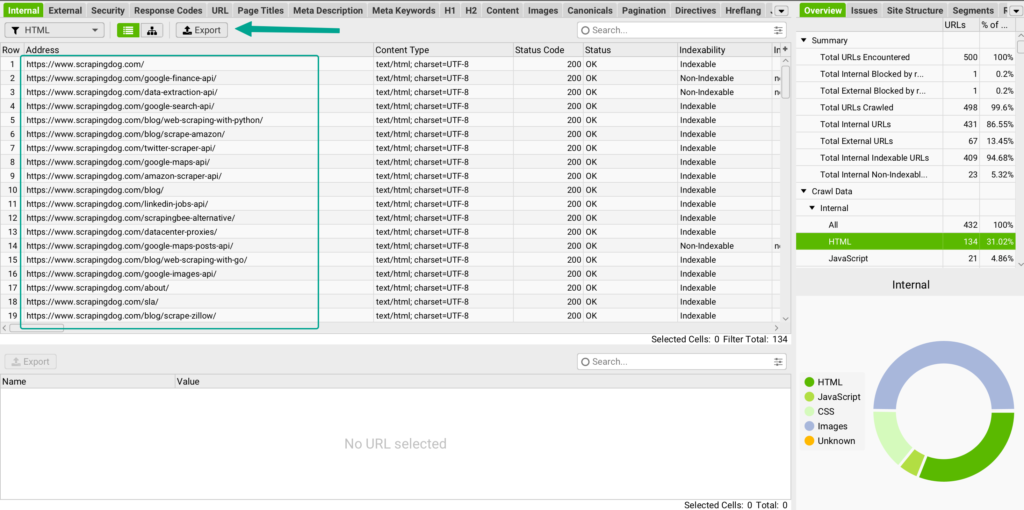

Screamingfrog is another free tool that can help you with exporting URLs from a domain.

Keep in mind that the free version allows you to get 500 URLs from a single domain.

To use it, you have to download the software on your machine & unlike SaaS, it will crawl the domain from your setup only.

The paid plan comes with a license key that you can buy.

You can export the list and keep it in CSV inside a folder.

Method 4: Building Your Crawler Script

If you’re a developer, you can build your crawler script to find all URLs on a website.

This method offers more flexibility and control over crawling compared to previous methods. It allows you to customize behavior, handle dynamic content, and extract URLs based on specific patterns or criteria.

You can use any language for web crawling, such as Python, JavaScript, or Golang. In this example, we will focus on Python.

Its simplicity and rich ecosystem make it a popular choice for web crawling tasks.

Python offers a vast range of libraries and frameworks specifically designed for web crawling and data extraction, including popular ones like Requests, BeautifulSoup, and Scrapy.

Using Python libraries

First, we’ll create a Python script using BeautifulSoup and Requests to extract URLs from a website sitemap.

Install the libraries using pip:

pip install beautifulsoup4 requests

Next, let’s create a Python file (in my case, main.py) and import the necessary dependencies:

import requests

from bs4 import BeautifulSoup as Soup

import csv

Next, define the extract_sitemap_data function. The extract_sitemap_data function takes the URL of a sitemap as input and returns a list of URL entries found in the sitemap.

This function uses BeautifulSoup to parse the XML content of the sitemap.

It recursively extracts data from nested sitemaps, if any, and also parses the URL entries from the current sitemap.

def extract_sitemap_data(url):

if not url:

raise ValueError("Please provide a valid sitemap URL.")

try:

response = requests.get(url)

# Raise an exception for unsuccessful requests (status codes other than 200)

response.raise_for_status()

except (ValueError, requests.exceptions.RequestException) as e:

print(f"Error fetching sitemap data from {url}: {e}")

return []

soup = bs(response.content, "xml")

# Extract data from nested sitemaps

url_entries = []

for sitemap in soup.find_all("sitemap"):

nested_url = sitemap.find("loc").text

url_entries.extend(extract_sitemap_data(nested_url))

# Extract data from current sitemap URLs

url_entries.extend(_parse_url_entries(soup))

return url_entries

Next, the _parse_url_entries function is defined. It takes a BeautifulSoup object representing the XML content of a sitemap and extracts URL entries along with their attributes such as location (loc), last modified time (lastmod), and priority (priority). It returns a list of URL entries.

def _parse_url_entries(soup):

url_entries = []

attributes = ["loc", "lastmod", "priority"]

for url in soup.find_all("url"):

entry = []

for attr in attributes:

element = url.find(attr)

entry.append(element.text if element else "n/a")

url_entries.append(entry)

return url_entries

def process_sitemaps(sitemaps):

all_entries = []

for sitemap_url in sitemaps:

entries = extract_sitemap_data(sitemap_url)

all_entries.extend(entries)

# Save the extracted data to a CSV file

save_to_csv(all_entries)

import requests

from bs4 import BeautifulSoup as bs

import csv

def extract_sitemap_data(url):

if not url:

raise ValueError("Please provide a valid sitemap URL.")

try:

response = requests.get(url)

# Raise an exception for unsuccessful requests (status codes other than 200)

response.raise_for_status()

except (ValueError, requests.exceptions.RequestException) as e:

print(f"Error fetching sitemap data from {url}: {e}")

return []

soup = bs(response.content, "xml")

# Extract data from nested sitemaps

url_entries = []

for sitemap in soup.find_all("sitemap"):

nested_url = sitemap.find("loc").text

url_entries.extend(extract_sitemap_data(nested_url))

# Extract data from current sitemap URLs

url_entries.extend(_parse_url_entries(soup))

return url_entries

def _parse_url_entries(soup):

url_entries = []

attributes = ["loc", "lastmod", "priority"]

for url in soup.find_all("url"):

entry = []

for attr in attributes:

element = url.find(attr)

entry.append(element.text if element else "n/a")

url_entries.append(entry)

return url_entries

def process_sitemaps(sitemaps):

all_entries = []

for sitemap_url in sitemaps:

entries = extract_sitemap_data(sitemap_url)

all_entries.extend(entries)

# Save the extracted data to a CSV file

save_to_csv(all_entries)

def save_to_csv(data, filename="data.csv"):

with open(filename, "a", newline="") as csvfile:

writer = csv.writer(csvfile)

writer.writerows(data)

sitemaps = [

"<https://www.scrapingdog.com/sitemap.xml>",

"<https://www.scrapingdog.com/blog/sitemap_index.xml>"

]

process_sitemaps(sitemaps)

What if a website doesn’t have a sitemap?

Although sitemaps are common, some websites may not have one. No worries!

A third-party Python library ultimate-sitemap-parser can help. To install it, simply run the command pip install ultimate-sitemap-parser.

Here’s the code:

import csv

from usp.tree import sitemap_tree_for_homepage

# Retrieve all pages from the sitemap of the given domain

def fetch_pages_from_sitemap(domain):

raw_pages = []

sitemap_tree = sitemap_tree_for_homepage(domain)

for page in sitemap_tree.all_pages():

raw_pages.append(page.url)

return raw_pages

# Filter out duplicate pages and return a list of unique page links

def filter_unique_pages(raw_pages):

unique_pages = []

for page in raw_pages:

if page not in unique_pages:

unique_pages.append(page)

return unique_pages

# Save the list of unique pages to a CSV file

def save_pages_to_csv(pages, filename):

with open(filename, mode="w", newline="") as file:

writer = csv.writer(file)

for page in pages:

writer.writerow([page])

if __name__ == "__main__":

domain = "<https://scrapingdog.com/>"

raw_pages = fetch_pages_from_sitemap(domain)

unique_pages = filter_unique_pages(raw_pages)

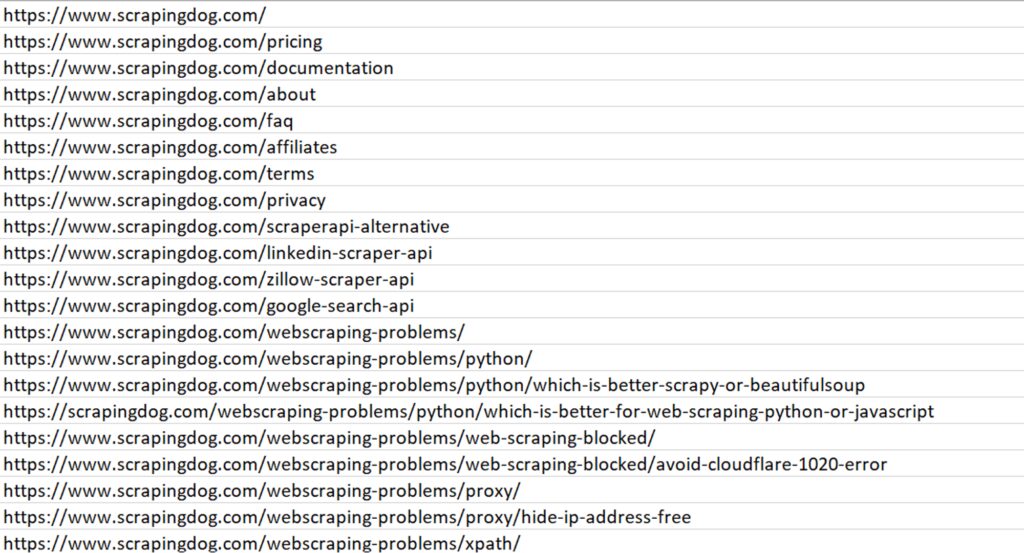

save_pages_to_csv(unique_pages, "data.csv")

sitemap_tree_for_homepage function and processes it to find all indexed web pages. This results in a list containing all the web pages the library could find.

The second function takes the previously created list and removes all duplicates. This leaves you with a clean list of every unique URL the website is hosting.

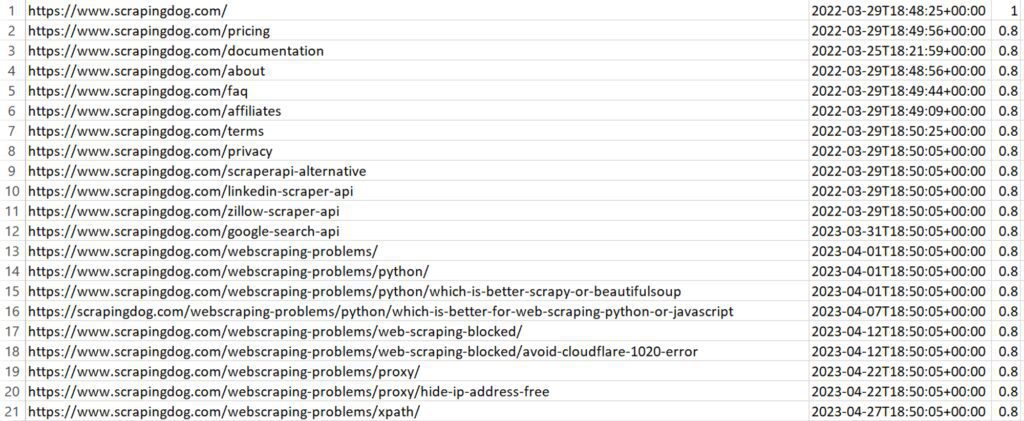

The result is:

This code serves as a great starting point. However, if you’re looking for a more robust solution, you can refer to our detailed guide on Building a Web Crawler in Python.

Using Scrapingdog to send requests

Now that you know how to scrape Google search results, let’s explore a solution that can help you scrape millions of Google pages without being blocked.

We’ll use Scrapingdog Google Search Result Scraper API for this task. This API handles everything from proxy rotation to headers. You just have to send a GET request, and in return, you will get parsed JSON data.

import requests

import json

api_key = "YOUR_API_KEY"

url = "<https://api.scrapingdog.com/google/>"

params = {

"api_key": api_key,

"query": "site:scrapingdog.com",

"results": 150,

"country": "in",

"page": 0

}

response = requests.get(url, params=params)

if response.ok:

data = response.json()

# Save the data to a JSON file

with open('scrapingdog_data.json', 'w') as json_file:

json.dump(data, json_file, indent=4)

print("Data saved to scrapingdog_data.json")

else:

print(f"Request failed with status code: {response.status_code}")

The code is simple. We are sending a GET request to https://api.scrapingdog.com/google along with some parameters. For more information on these parameters, you can again refer to the documentation.

Once you run this code, you will get a beautiful JSON response.

What if you need results from a different country?

Google shows different results based on location, but you can adjust.

Just change the ‘country’ parameter in the code above. For example, to get results from the UK, use ‘gb’ (its ISO code). You can also extract the desired number of results by changing the ‘results’ parameter.

What All You Can Do With These URLs

You now have a CSV file containing URLs. What can you do with them?

For your objective, you can extract the desired information from each webpage, like text, images, videos, metadata, or any other relevant content.

Once the data is scraped, you can organize and analyze it. This process could involve storing it in a database, generating reports, or visualizing it to identify trends or patterns.

There are plenty of resources available to assist you in the data extraction process. Below are some informative articles that provide a wealth of information.

1. Web Crawling using Javascript & Nodejs

2. Web Scraping with Javascript and Nodejs

Finally, take a look at Scrapingdog Google API.

This user-friendly web scraping API manages rotating proxies, headless browsers, and CAPTCHAs, making web scraping effortless for you. This allows you to focus on the data and insights you truly need.