TL;DR

- Use Scrapingdog’s API (1k free credits) to fetch Zoopla listing pages.

- Parse with Python (

requests+BeautifulSoup) to extract price, address, description. - Paginate by increasing the page number; collect rows and export to

zoopla.csvwith pandas. - Or skip parsing: send

ai_query+ai_extract_rulesto get structured JSON directly.

Zoopla is one of the UK’s leading property portals, packed with real estate listings, rental data, and housing market insights. Whether you’re a developer building a property comparison tool or a researcher tracking market trends, accessing this data programmatically can be incredibly useful.

In this guide, we’ll show you how to scrape property listings from Zoopla using Python, covering everything from navigating pagination to extracting key details like price, location, and property type, all while being mindful of best scraping practices. If you’re just starting out with web scraping, I highly recommend reading Web Scraping with Python. It’s a great resource for building a strong foundation.

Requirements To Scrape Data From Zoopla

I assume that you have already installed Python on your machine. If not, you can download it from here. Now, create a folder by any name you like. I am naming the folder as zoopla.

mkdir tut

Install these 3 libraries inside this folder.

requestsfor making an HTTP connection with the target website.BeautifulSoupfor parsing the raw data.Pandasfor storing data in a CSV file.

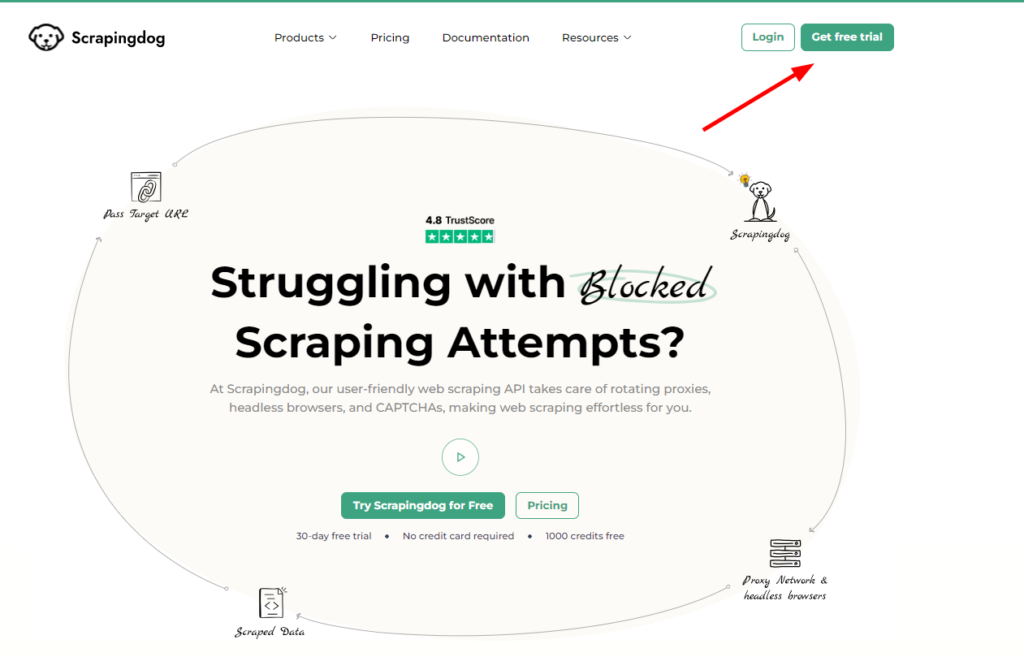

Final step before moving to the coding part would be to sign up for a web scraping API. In this tutorial, we are going to use Scrapingdog.

Now, we should create a Python file where we can write the scraping code. I am naming the file as zoopla.py.

Let's Start Scraping Zoopla with Python

Before we start coding the scraper, take a moment to read Scrapingdog’s documentation; it’ll give you a clear idea of how we can use the API to scrape Zoopla at scale.

Once you have read the documentation, it is better to decide what information you want to extract from Zoopla in advance.

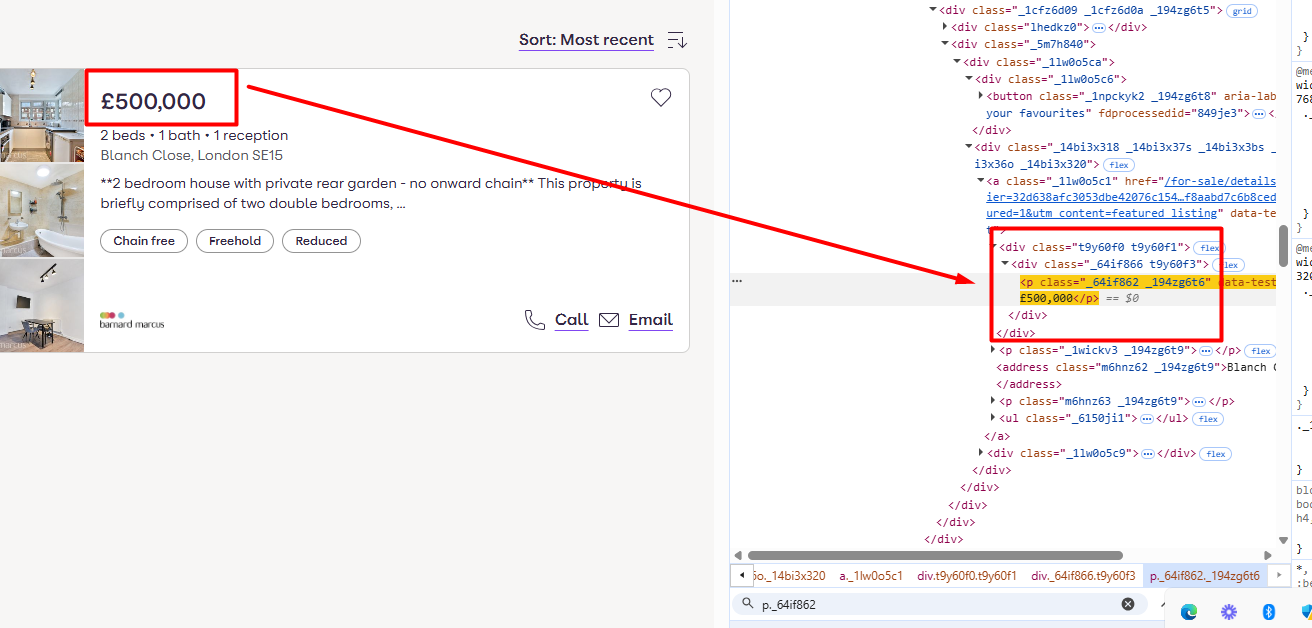

We are going to scrape the price, address, and description of the property. Let’s find the location of each data point in the DOM.

The pricing is stored inside the p tag with the class _64if862.

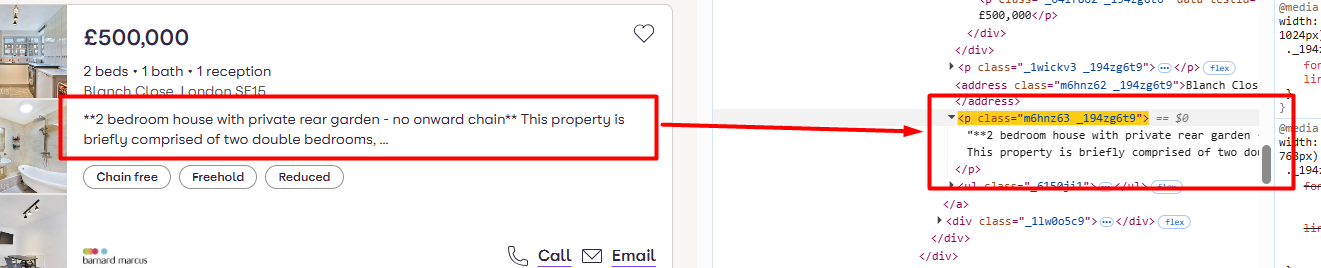

The description is stored inside the p tag with the class m6hnz63.

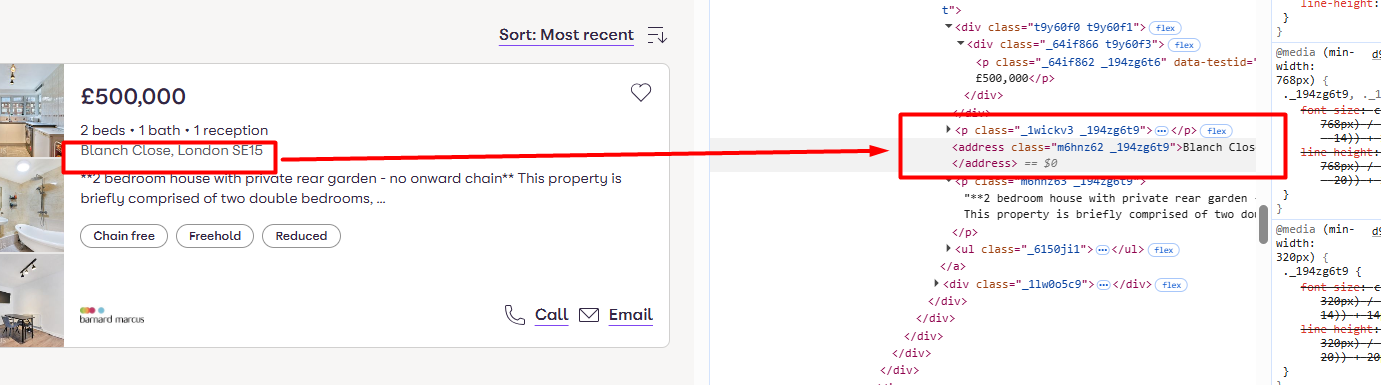

The address is located inside the address tag itself.

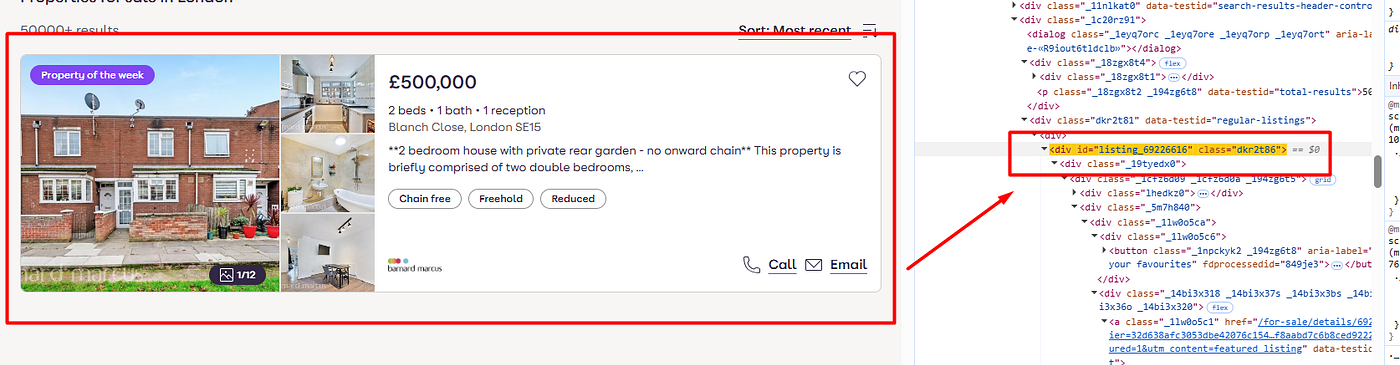

Each property is located inside a div tag with the class dkr2t86.

Let’s code now.

Downloading raw HTML

import requests

from bs4 import BeautifulSoup

import pandas as pd

l=[]

obj={}

params={

'api_key': 'your-api-key',

'url': 'https://www.zoopla.co.uk/for-sale/property/london/?q=london&search_source=home&pn=2',

'dynamic': 'false',

}

response = requests.get("https://api.scrapingdog.com/scrape", params=params)

print(response.status_code)

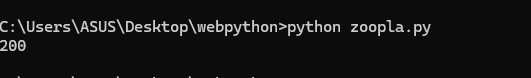

This is the basic Python code where we are making a GET request to the Scrapingdog API. Remember to use your own API key in the above code. Once you run the code, you should see 200 status code indicating a successful scrape.

Parsing the data with BeautifulSoup

import requests

from bs4 import BeautifulSoup

import pandas as pd

l=[]

obj={}

params={

'api_key': 'your-api-key',

'url': 'https://www.zoopla.co.uk/for-sale/property/london/?q=london&search_source=home&pn=2',

'dynamic': 'false',

}

response = requests.get("https://api.scrapingdog.com/scrape", params=params)

soup = BeautifulSoup(response.text, 'html.parser')

allData = soup.find_all("div",{"class":"dkr2t86"})

for data in allData:

try:

obj["price"]=data.find("p",{"class":"_64if862"}).text

except:

obj["price"]=None

try:

obj["address"]=data.find("address",{"class":"m6hnz62"}).text

except:

obj["address"]=None

try:

obj["description"]=data.find("p",{"class":"m6hnz63"}).text

except:

obj["description"]=None

l.append(obj)

obj={}

print(l)

The code is pretty simple, but let me explain to you the logic behind it.

- Finds all

<div>elements with class"dkr2t86"and stores them inallData. - Loops through each element in

allData. - Tries to extract the price from a

<p>tag with class"_64if862". Sets toNoneif not found. - Tries to extract the address from an

<address>tag with class"m6hnz62". Sets toNoneif not found. - Tries to extract the description from a

<p>tag with class"m6hnz63". Sets toNoneif not found. - Stores all extracted data in a dictionary called

obj. - Appends

objto the listl. - Resets

objto an empty dictionary for the next loop. - Prints the final list

lcontaining all extracted records.

Once you run this code, you should see a beautiful JSON response like this.

Handling Pagination

When you click on the II page from the bottom of the page, the URL of the page becomes https://www.zoopla.co.uk/for-sale/property/london/?q=london&search_source=home&pn=2. As you can see, a page parameter by the name pn appears within the URL, which can be used for changing the page number.

We have to run a for loop that can automatically change the page number within the URL and make a separate API call on every iteration.

import requests

from bs4 import BeautifulSoup

import pandas as pd

l=[]

obj={}

for i in range(1,11):

params={

'api_key': 'your-api-key',

'url': 'https://www.zoopla.co.uk/for-sale/property/london/?q=london&search_source=home&pn={}'.format(i),

'dynamic': 'false',

}

response = requests.get("https://api.scrapingdog.com/scrape", params=params)

soup = BeautifulSoup(response.text, 'html.parser')

allData = soup.find_all("div",{"class":"dkr2t86"})

for data in allData:

try:

obj["price"]=data.find("p",{"class":"_64if862"}).text

except:

obj["price"]=None

try:

obj["address"]=data.find("address",{"class":"m6hnz62"}).text

except:

obj["address"]=None

try:

obj["description"]=data.find("p",{"class":"m6hnz63"}).text

except:

obj["description"]=None

l.append(obj)

obj={}

print(l)

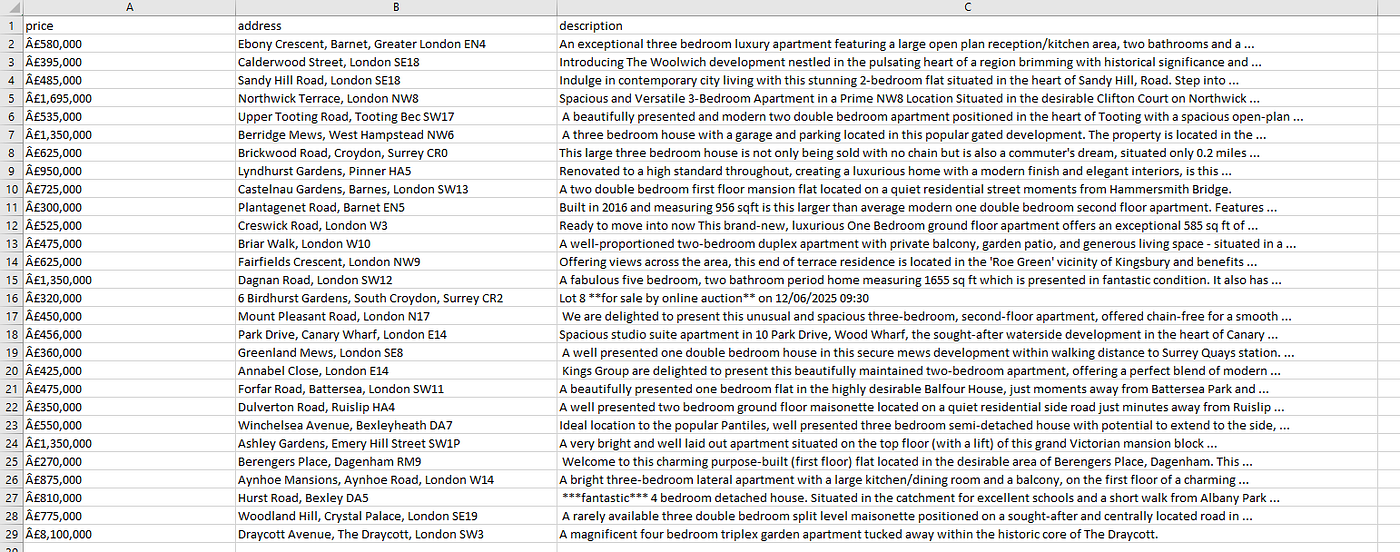

Saving data to CSV

Using the pandas library, we can save this data to a CSV file. Let’s see how it can be done.

df = pd.DataFrame(l)

df.to_csv('zoopla.csv', index=False, encoding='utf-8')

- Creates a DataFrame

dffrom the listl. - Saves the DataFrame to a CSV file named

zoopla.csv. - Disables the index column in the CSV using

index=False.

Once you run it, you will find a CSV file by the name zoopla.csv.

Complete Code

You can, of course, scrape other details as well, but as of now, the code will look like this.

import requests

from bs4 import BeautifulSoup

import pandas as pd

l=[]

obj={}

params={

'api_key': 'your-api-key',

'url': 'https://www.zoopla.co.uk/for-sale/property/london/?q=london&search_source=home',

'dynamic': 'false',

}

response = requests.get("https://api.scrapingdog.com/scrape", params=params)

soup = BeautifulSoup(response.text, 'html.parser')

allData = soup.find_all("div",{"class":"dkr2t86"})

for data in allData:

try:

obj["price"]=data.find("p",{"class":"_64if862"}).text

except:

obj["price"]=None

try:

obj["address"]=data.find("address",{"class":"m6hnz62"}).text

except:

obj["address"]=None

try:

obj["description"]=data.find("p",{"class":"m6hnz63"}).text

except:

obj["description"]=None

l.append(obj)

obj={}

df = pd.DataFrame(l)

df.to_csv('zoopla.csv', index=False, encoding='utf-8')

Get Structured Data without Parsing using Scrapingdog AI Scraper

If you want structured data without writing parsing logic or using BeautifulSoup, you can use AI parsing instead. Just submit a simple query, and the data gets parsed for you, no custom code required. You can read more about this parameter over here.

import requests

response = requests.get("https://api.scrapingdog.com/scrape", params={

'api_key': 'your-api-key',

'url': 'https://www.zoopla.co.uk/for-sale/property/london/?q=london&search_source=home&pn=1',

'dynamic': 'false',

'ai_query': 'Give me the price of each property in json format'

'ai_extract_rules': '{"price":"$xyz"}'

})

print(response.text)

This will generate a JSON response that looks like this.

{"price":"£425,000"},{"price":"£800,000"},{"price":"£575,000"},{"price":"£650,000"},{"price":"£1,100,000"},{"price":"£3,600,000"},{"price":"£450,000"},{"price":"£625,000"},{"price":"£1,350,000"},{"price":"£475,000"},{"price":"£950,000"},{"price":"£360,000"},{"price":"£685,000"},{"price":"£435,000"},{"price":"£1,695,000"},{"price":"£320,000"},{"price":"£450,000"},{"price":"£6

Advantages of this approach

- No code required — Easily extract structured data without writing any parsing logic.

- Faster implementation — Skip HTML structure analysis and get results instantly.

- Handles messy HTML — AI can intelligently extract data even from inconsistent or nested layouts.

- Reduces maintenance — No need to update scrapers when websites change minor HTML tags or classes.

- Ideal for non-devs — Makes web scraping accessible to users without programming experience.

- Cleaner output — Directly get structured JSON or CSV-ready data.

Key takeaways:

Zoopla listings can be scraped to extract structured data like property prices, locations, descriptions, and key features for analysis.

A Python setup using requests and parsing libraries allows you to collect and organize real-estate data efficiently.

Search pages and pagination need to be handled properly to gather data across multiple result pages.

Zoopla has anti-scraping protections, so headers, rate control, and smart request handling are important.

Structured property data can be used for market research, price tracking, and real-estate intelligence.

Conclusion

Scraping property listings from Zoopla with Python and Scrapingdog opens up a world of possibilities for real estate analysis, price tracking, and market research. With libraries like requests, BeautifulSoup, or even automation tools like PuppeteerYou can extract valuable data at scale. Whether you’re building a real estate dashboard or feeding data into an ML model, Python makes the process efficient and flexible.