The num=100 parameter just got removed. What does that mean for the industry, depending on this?

Using this parameter, rank tracking tools, used to scrape all 100 results in one go. But after Google removed this, there was shock in the industry; businesses were shaken by this move from Google.

According to Search Engine Land, 77% of the sites lost visibility, which is a huge number considering Google is still the number one source of traffic for many brands.

It definitely affected the tools, and even the big brands like Ahrefs, SEMrush, which were giants in this domain, panicked as this unofficial update from Google arrived.

What’s next? How do rank trackers adapt?

Before the fixes, a quick note on intent: why Google made this move and what it signals for SEO.

Was Google after this billion-dollar industry of SEO tools?

And the answer is “NO”, Google smartly wanted to stop AI bots from crawling their results, including the big-time rival ChatGPT.

The change wasn’t aimed at rank trackers; they were collateral damage.

How Rank Trackers Are Going To Deal With This Change

Scraping simply works on a simple fundamental: if the browser has all the 100 results on one page, it can scrape them.

With the num=100 parameter getting removed, you can now only see 10 results.

To get 100 results, you would need to scrape pages 1, 2, 3….10, all of them now.

This means the API call will increase, specifically 10 times. And so will be the cost of your rank tracking tool.

We at Scrapingdog had the same issue wherein our rank tracking tool stopped working. Since a significant portion of our traffic comes from Google organic searches, we needed to find a solution.

Luckily, the main ingredient to build this recipe was a product that we already sell to our customers. Yes, I am referring to the Google Search API.

So, we thought, why not build a basic rank tracking tool for ourselves using some no-code tool, and this API?

Building A Rank Tracker using n8n & Google SERP API

You need to have access to n8n & Google SERP API from Scrapingdog that will scrape the search engine. I would also link to the blueprint at the end of this tutorial with a set of instructions to make it personalized for you.

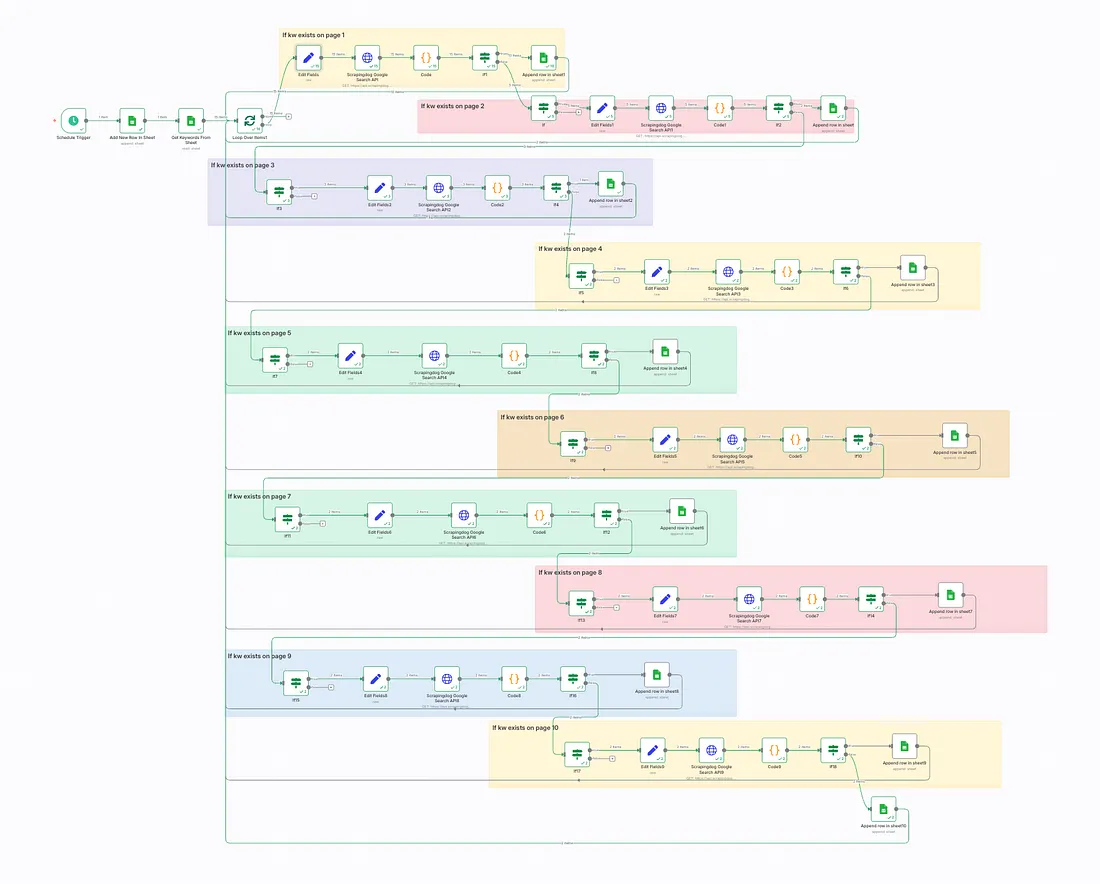

The Idea was to create a basic rank tracker that is optimized for cost as well as efficiency. We are not scraping all 10 pages upfront, i.e., if the keyword is found on page 1, the workflow automatically takes the second keyword and gets results for the next keyword.

This way, we save on the API cost, and we don’t have to pay for all 10 results.

I made a video tutorial on this topic too, here it is: –

If you prefer to read, follow along.

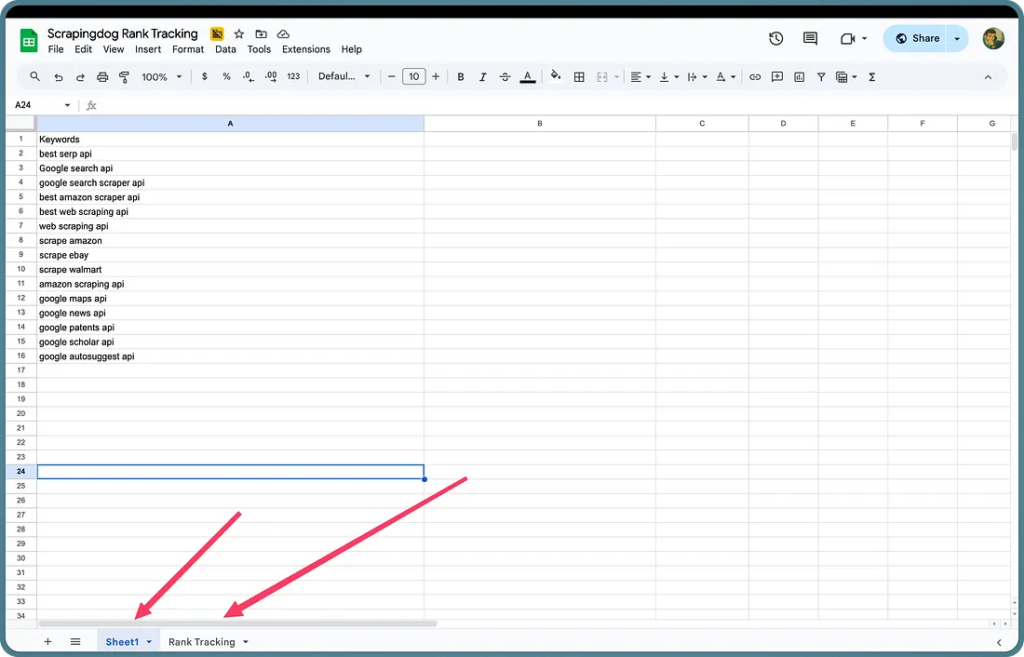

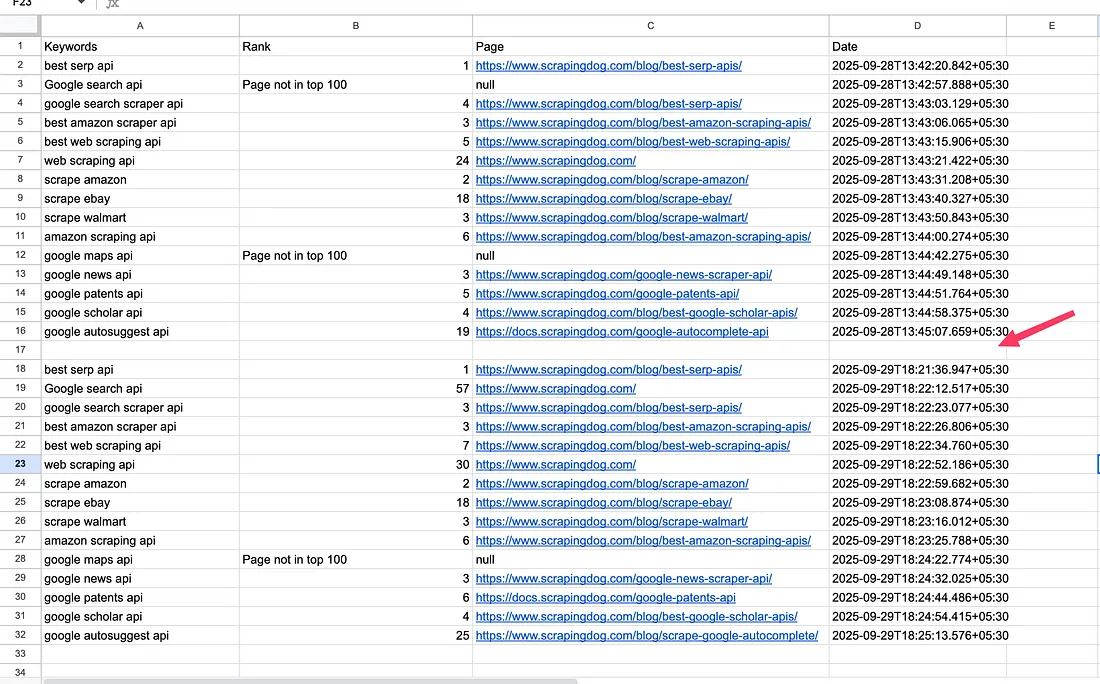

Here is the Google Sheets, wherein we have keywords in tab-1 and we are recording results in tab-2.

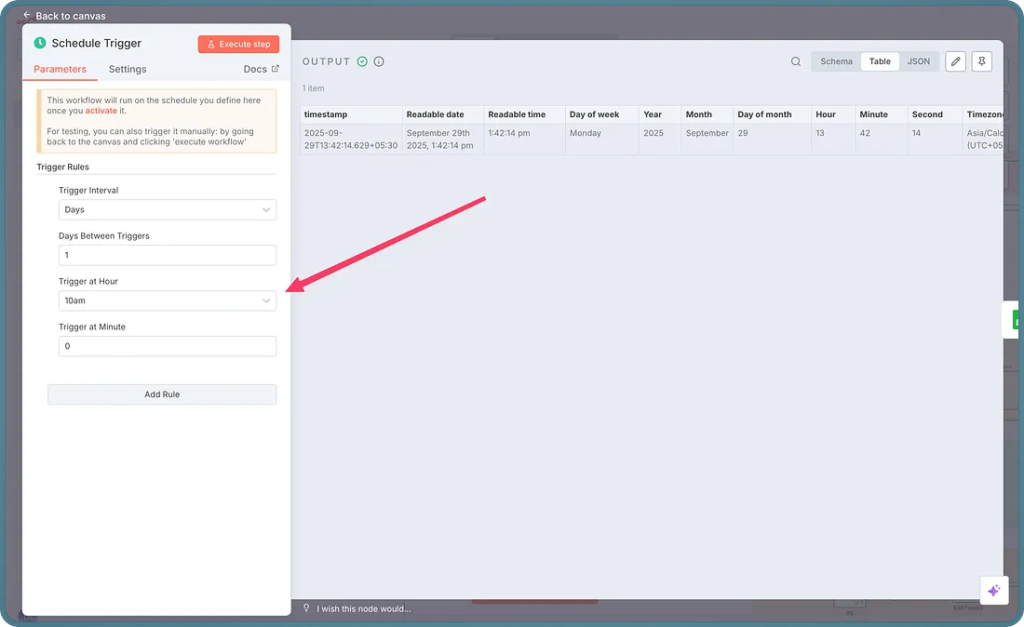

The whole workflow starts with a trigger, which is scheduled for every day.

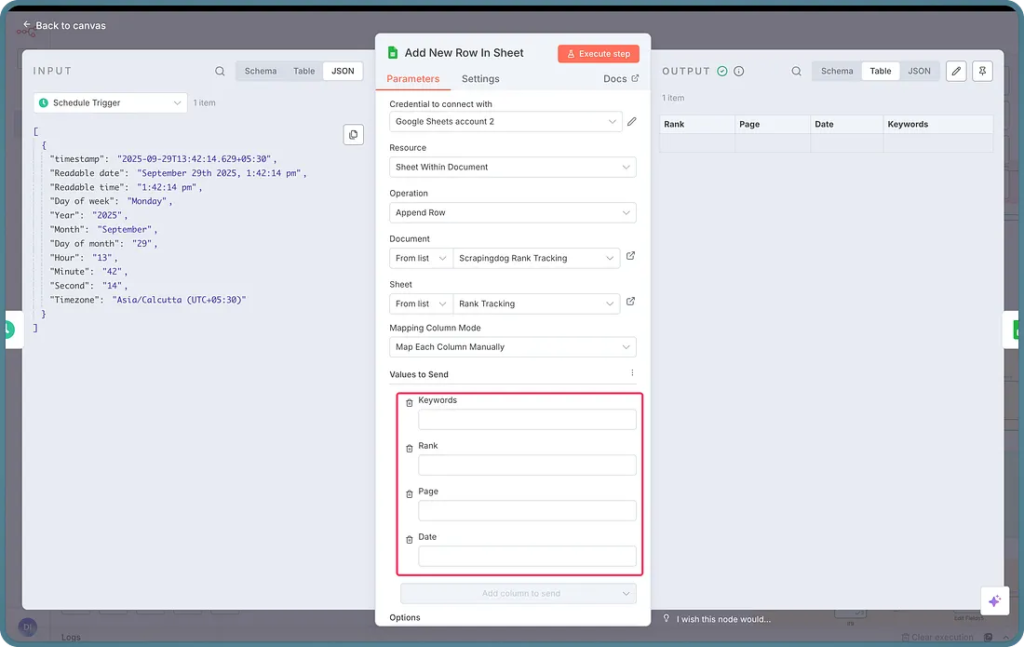

The next node in n8n is adding a blank row, so we differentiate between the days of tracking. Here is the configuration of that node.

This will add a blank row like this below ⬇️

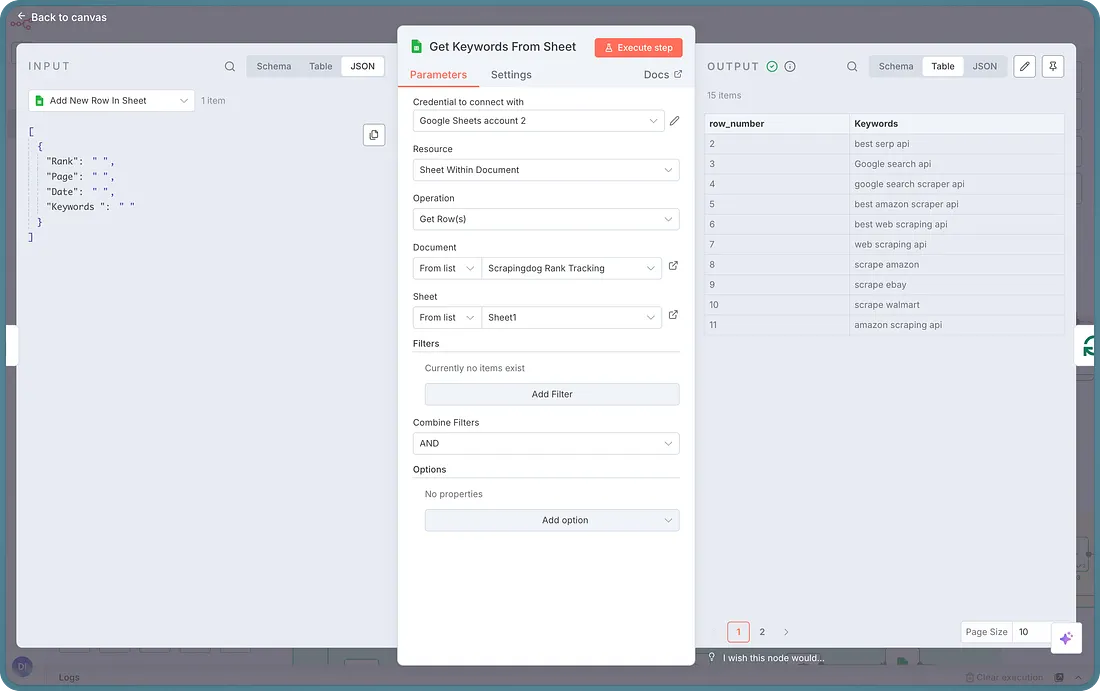

The next node in our workflow would be to fetch all the keywords from tab-1 for which we want to rank track.

As you can see on the right side, the node is fetching all the keywords in the output.

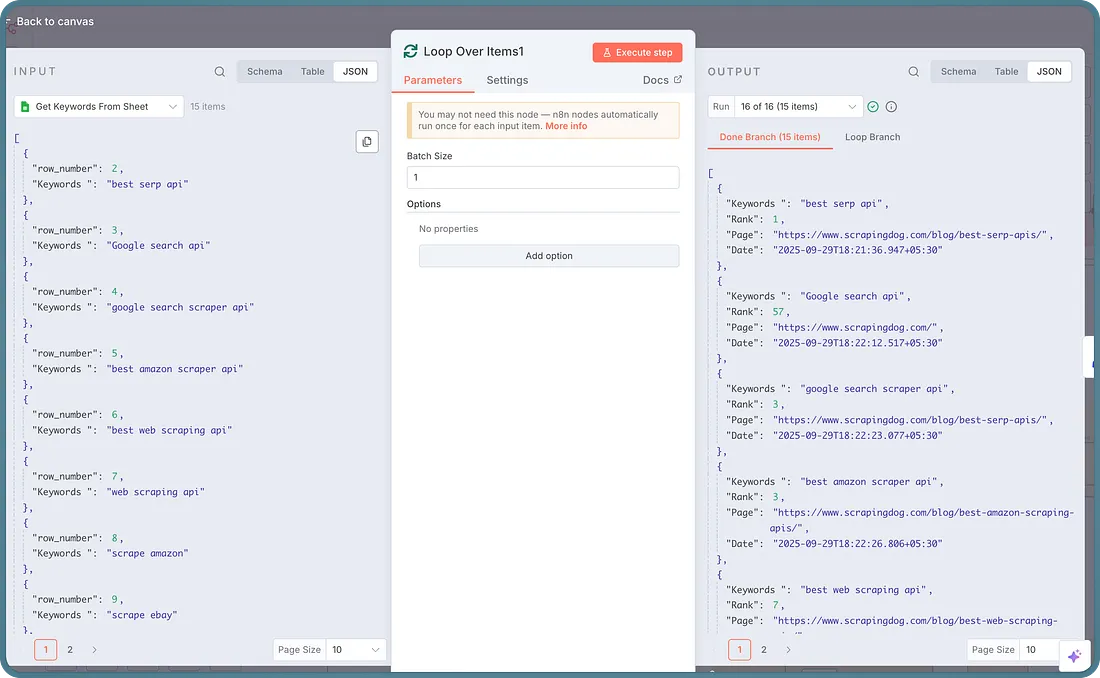

Now, logically we would want to get the data for each keyword one by one & for the same, we would loop through those keywords.

The next node is the Loop node, and here is the configuration ⬇️

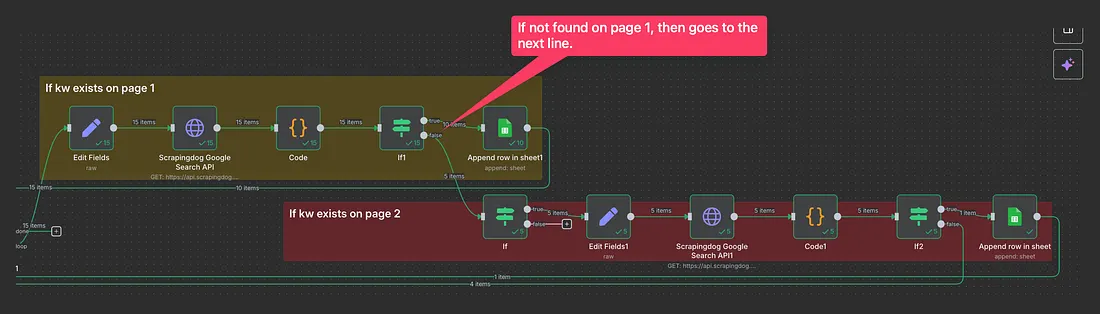

Essentially, what is happening here is, the workflow takes one keyword from our flow, determines if it ranks on page 1, and if not, then transfers it to page 2.

Therefore, a loop here becomes an essential component of our workflow.

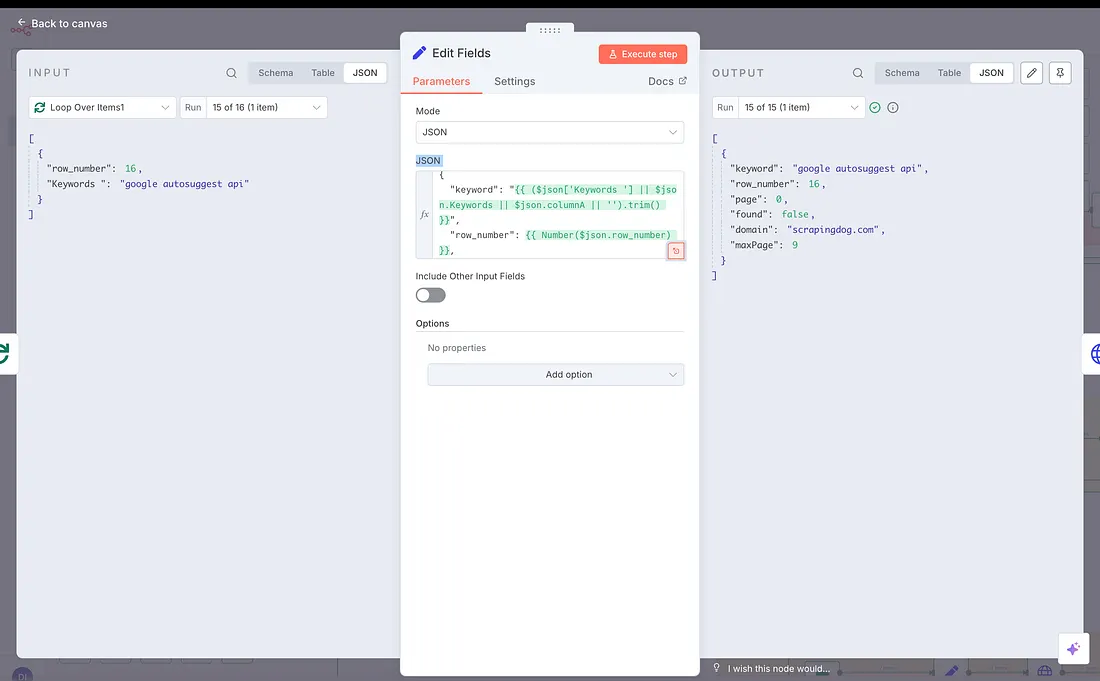

The next node is “Edit Field” where we are setting up some of the fields for further data in our workflow.

Essentially, we are taking the Kw, row number of the kw, setting page=0 to default (to get the data from the first page), found=false (we use this field to determine if for a page the keyword is found or not, setting default to false), the domain for which we are tracking, max page is the total number of pages i.e. 9 for 10th page (For the first page its value is 0).

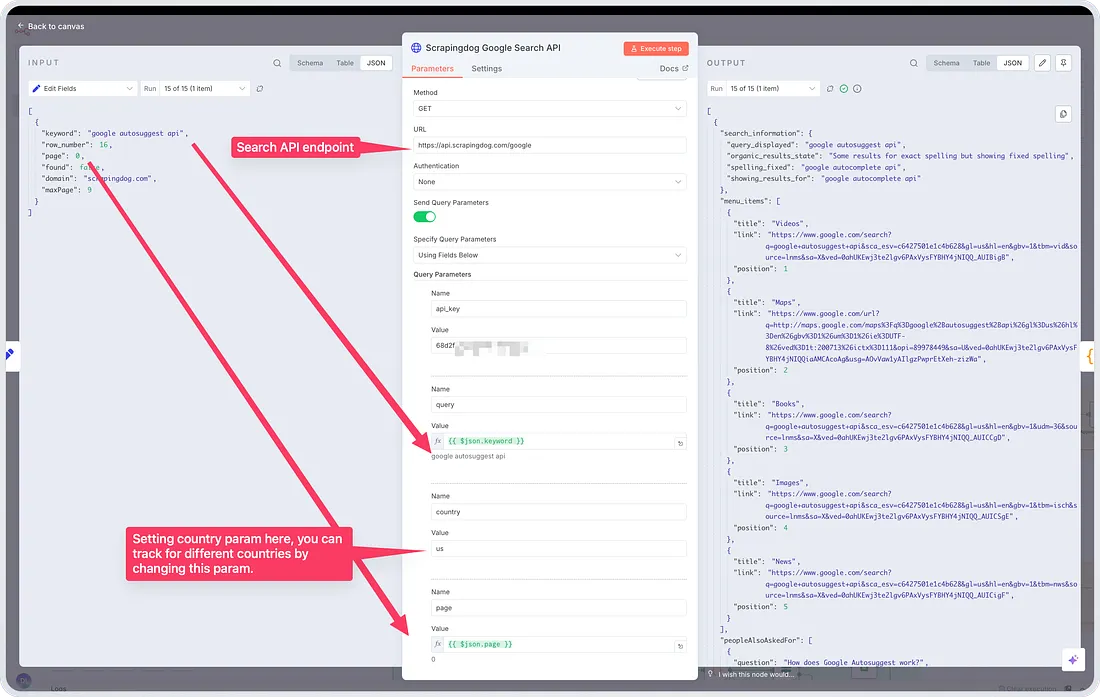

We then pass some of this data to our next node, which isan HTTP node.)

Here is the configuration of the HTTP request ⬇️

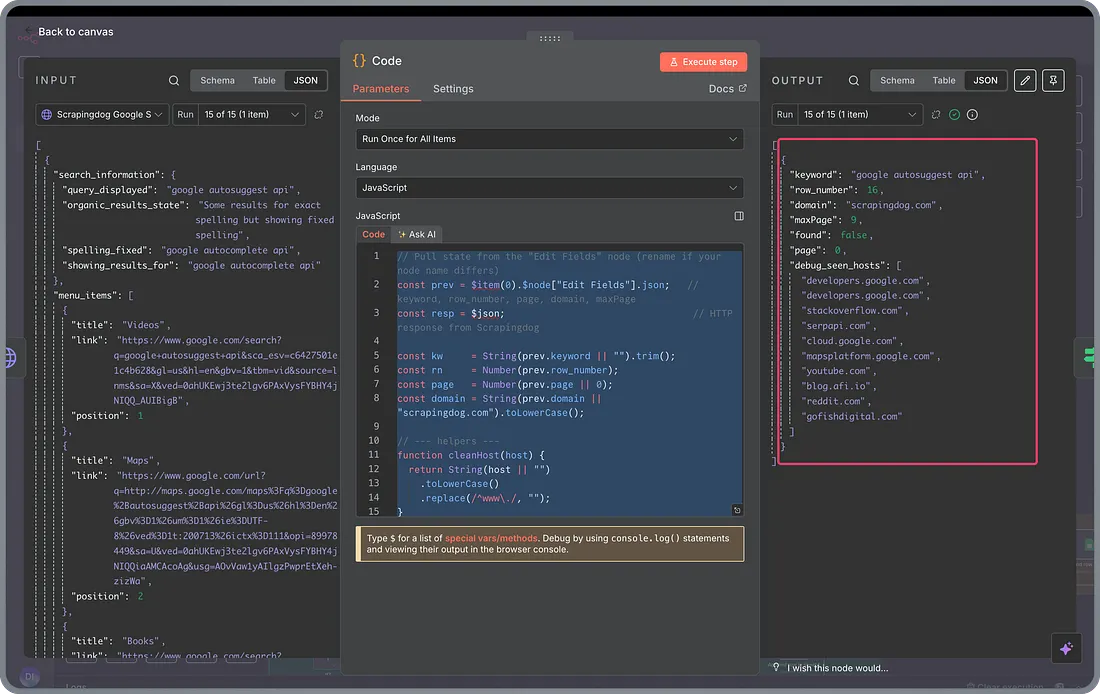

Now, after scraping the 1st page result, logically we need to find if any of our domain’s links exist on page 1, and for that we will use a JavaScript code in the “Code” node.

Here is the JavaScript code that I have used:

// Pull state from the "Edit Fields" node (rename if your node name differs)

const prev = $item(0).$node["Edit Fields"].json; // keyword, row_number, page, domain, maxPage

const resp = $json; // HTTP response from Scrapingdog

const kw = String(prev.keyword || "").trim();

const rn = Number(prev.row_number);

const page = Number(prev.page || 0);

const domain = String(prev.domain || "scrapingdog.com").toLowerCase();

// --- helpers ---

function cleanHost(host) {

return String(host || "")

.toLowerCase()

.replace(/^www\./, "");

}

function hostFromDisplayedLink(s) {

// displayed_link can be like "https://www.scrapingdog.com › blog › post"

// Try to extract the host safely.

if (!s) return "";

try {

// If it looks like a real URL, parse it.

if (/^https?:\/\//i.test(s)) return new URL(s).hostname;

} catch {}

// Fallback: take first token up to slash/space/»/›

const m = String(s).match(/[a-z0-9.-]+\.[a-z.]+/i);

return m ? m[0] : "";

}

function isMatch(url, displayedLink, wanted) {

// Prefer real URL host if present

try {

const host = cleanHost(new URL(url).hostname);

if (host === wanted || host.endsWith("." + wanted)) return true;

} catch {}

// Fallback to displayed_link host

const dispHost = cleanHost(hostFromDisplayedLink(displayedLink));

if (dispHost && (dispHost === wanted || dispHost.endsWith("." + wanted))) return true;

return false;

}

function normalizePerRank(o, index) {

// Prefer explicit rank/position if present; convert 0-based to 1-based; else use index+1

const raw = Number(o.rank ?? o.position ?? NaN);

if (Number.isFinite(raw)) return raw >= 1 ? raw : raw + 1;

return index + 1;

}

// --- pick organic results (Scrapingdog shape variants) ---

const organic =

resp.organic_results ||

resp.results ||

resp.data?.organic_results ||

resp.data?.results ||

[];

let hit = null;

let perRank = null;

// scan results

for (let i = 0; i < organic.length; i++) {

const o = organic[i];

const url = o.link || o.url || "";

const displayed = o.displayed_link || o.displayed_url || "";

if (isMatch(url, displayed, domain)) {

perRank = normalizePerRank(o, i); // 1..10

hit = o;

break;

}

}

if (hit) {

const overallRank = page * 10 + perRank;

return [{

keyword: kw,

row_number: rn,

domain,

maxPage: Number(prev.maxPage ?? 9),

found: true,

page,

perRank,

overallRank,

url: hit.link || hit.url || "",

title: hit.title || "",

snippet: hit.snippet || ""

}];

} else {

// helpful debug: what hosts did we see on this page?

const seenHosts = organic.slice(0, 10).map(o => {

const url = o.link || o.url || "";

let host = "";

try { host = new URL(url).hostname; } catch { /* ignore */ }

if (!host && o.displayed_link) host = hostFromDisplayedLink(o.displayed_link);

return cleanHost(host);

});

return [{

keyword: kw,

row_number: rn,

domain,

maxPage: Number(prev.maxPage ?? 9),

found: false,

page,

debug_seen_hosts: seenHosts

}];

}

Note: You don’t need to understand programming or code for that matter, this is the code that I have taken from GPT while making the LLM model understand my workflow.

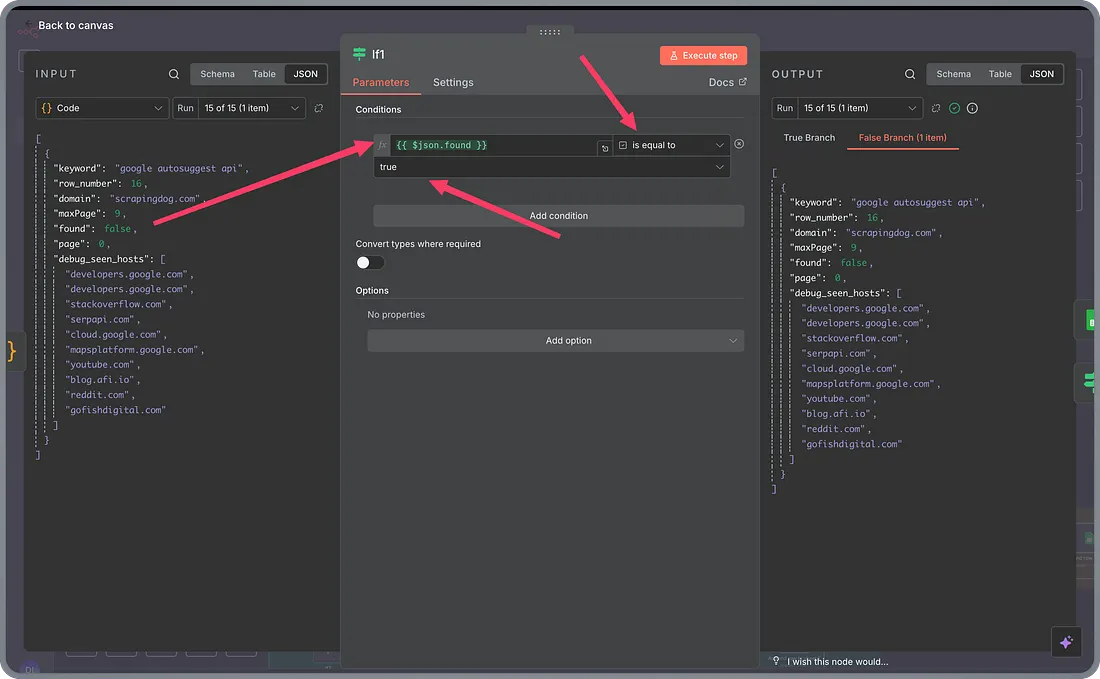

Here’s how the node gives the output: –

You can see the found data point; it gets to true when it finds the link on a page, and the automation loops back for the next keyword, if any.

And therefore, in the next node, we determine if the “found” data point is true or not. If it is, we take the data into Google Sheets; if it is false, we get results for the next page & repeat the same process.

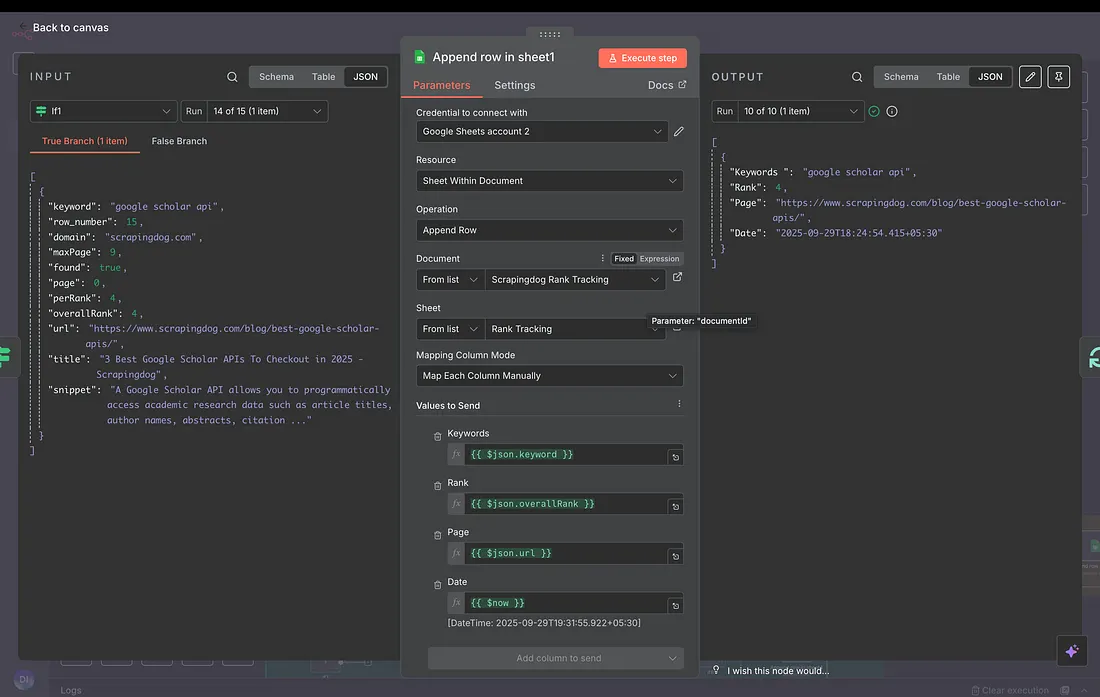

The final step is to append rows in our Spreadsheet, assuming the kw was found on Page 1.

Here is the configuration for that node.

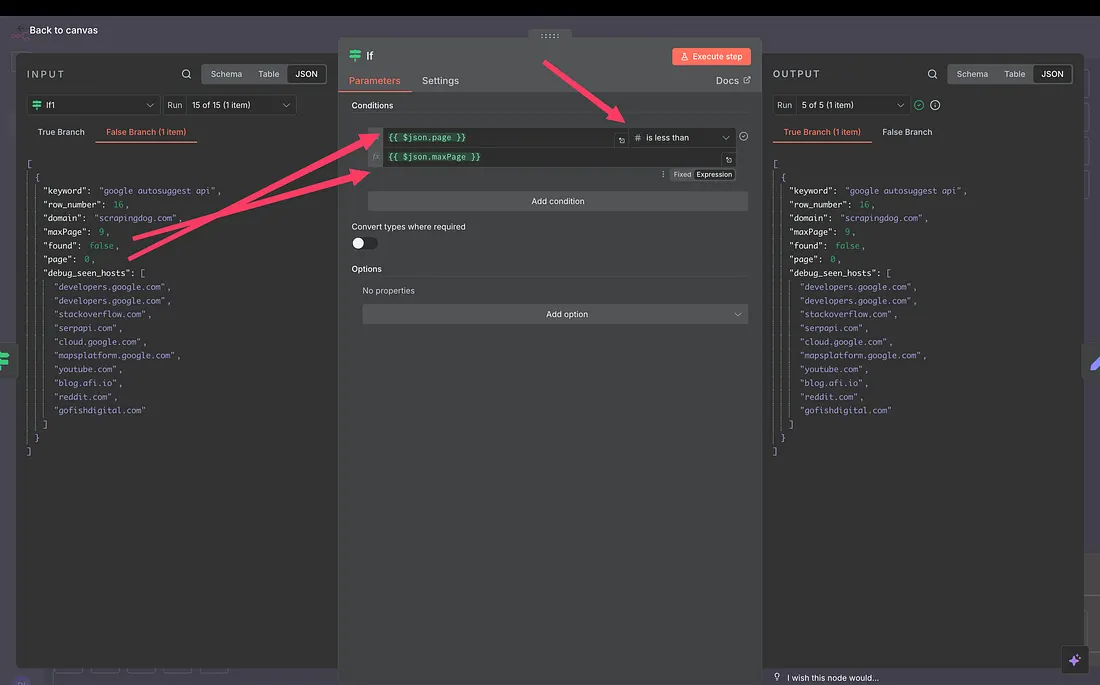

Let’s move back a little to the IF node, where we decide whether to append rows in Google Sheets or not, we talked about what happens when it is True.

Here’s what happens when it is False.

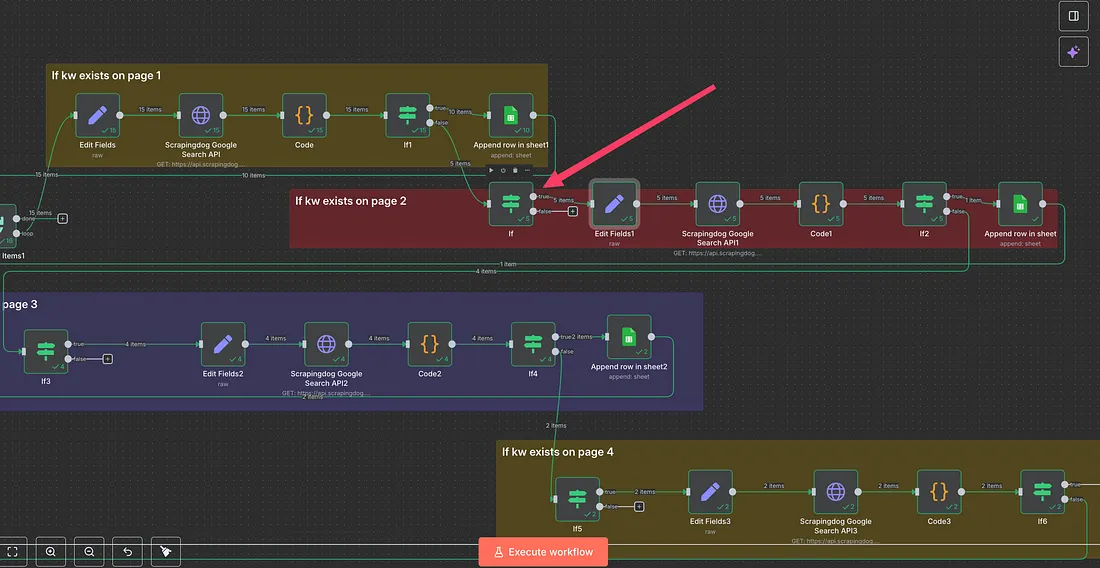

Let me explain to you what happens when it looks for results on the next page.

The next IF node checks if the page we are currently on is not greater than max pages i.e. 9

Technically, we are just checking if the page we want the result for is not after the 10th page.

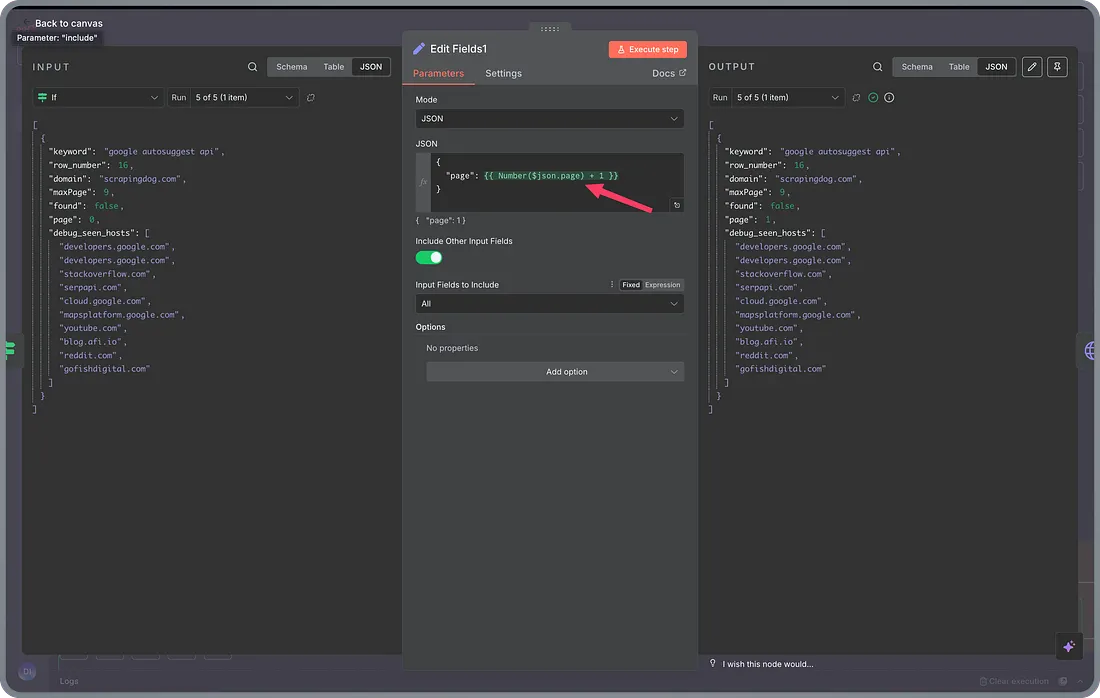

Once checked via this IF node, we increase the page number by 1 here.

After increasing the page number, we pass this to our Scrapingdog’s API and collect the next 10 results, then find if these results have our page in there. If it is there, we append to Google Sheets; if not, we call the next page.

This happens until page 10. If the result is not found here, too, we append Google Sheets with “Page not in top 100” in the rank column and “null” in the page column.

Finally, here is the blueprint for this automation that you can use for your domains.

Note: — You need to build your own Google Sheet and connect it to the n8n workflow. For the domain you are tracking, change the domain name in the “Edit fields1” node. I have told the ways to set it up in the video given above, or you can go to the video link here.

How To Further Optimize The Cost for Your Rank Tracker

Although the template has already been made to save you money, here are some more tips that can optimize your workflow.

- Track keywords that are on 3–5 pages at most — this way, your API cost is reduced further. Also, if you are into SEO, you would know the important keywords that your domain has, so it is good to avoid cost and not track keywords after the 5th page.

- Select the API that is economical — this one is a choice, but since costing is one of the factors that needs to be considered, you should choose the API that is economical. We have made a list of the best search scraping APIs that you can refer to. The best thing is that each of the APIs mentioned in the linked blog offers some free credits to test the API before committing to a paid plan.

- Track twice or thrice in a week — Again, daily tracking is an option, but to reduce the cost further, your workflow can be optimized to track 2–3 times a week. This would again totally depend on your needs.

Conclusion

In any case, if you need our help to set up the rank tracking workflow for your brand, do let us know on the website chat, and we would be happy to help you out there.

Once you set it up, start tracking your workflow. If everything looks good, you can schedule the first node to a desired daily time.