You build one link, it gets removed after a few days, and you are left unnoticed until you manually recheck it.

Well, glad you checked it manually, but what about managing multiple websites and links?

Is it possible to check it manually in this case, unless you are a superhuman?

But if you are not a superhuman, which I suppose is true for most of us, then either you can hire someone to manage this, which again is prone to error.

Or there is a fine way to go around this. You can build a simple automation that automatically manages it periodically.

Yes, popular SEO tools manage this as one of the features in their products, but the cost at which they operate is very high, especially if you are a freelancer. I am sure it will hurt your pocket, & you cannot bear that cost.

Why we felt the need to build this workflow

I manage marketing at Scrapingdog, and yes, we build links using Slack communities, connections on LinkedIn, or via emails. Up until now, we were using a database where we noted down all of these links, with the anchor text and target page.

I recently noticed some of our links getting removed and felt an urgency to manage this thing. Fortunately, we had a web scraping API of our own that can help us build this workflow, and I will teach you how you can do this for your own projects as well, at a very minimal cost that will not hurt your pockets.

Moreover, as I said, this automation can perfectly manage multiple domains’ link tracking through a single spreadsheet, which makes it a more flexible option.

Tools Used To Build This Workflow

- Scrapingdog (Web Scraper API) — To extract the HTML of the page where the anchor text & link exist.

- n8n — To bind up all the logics.

- Google Sheets — To manage the database where we record all the mishaps that happen with our links.

Just before we build our workflow, you need to prepare your own database (Google Sheets)

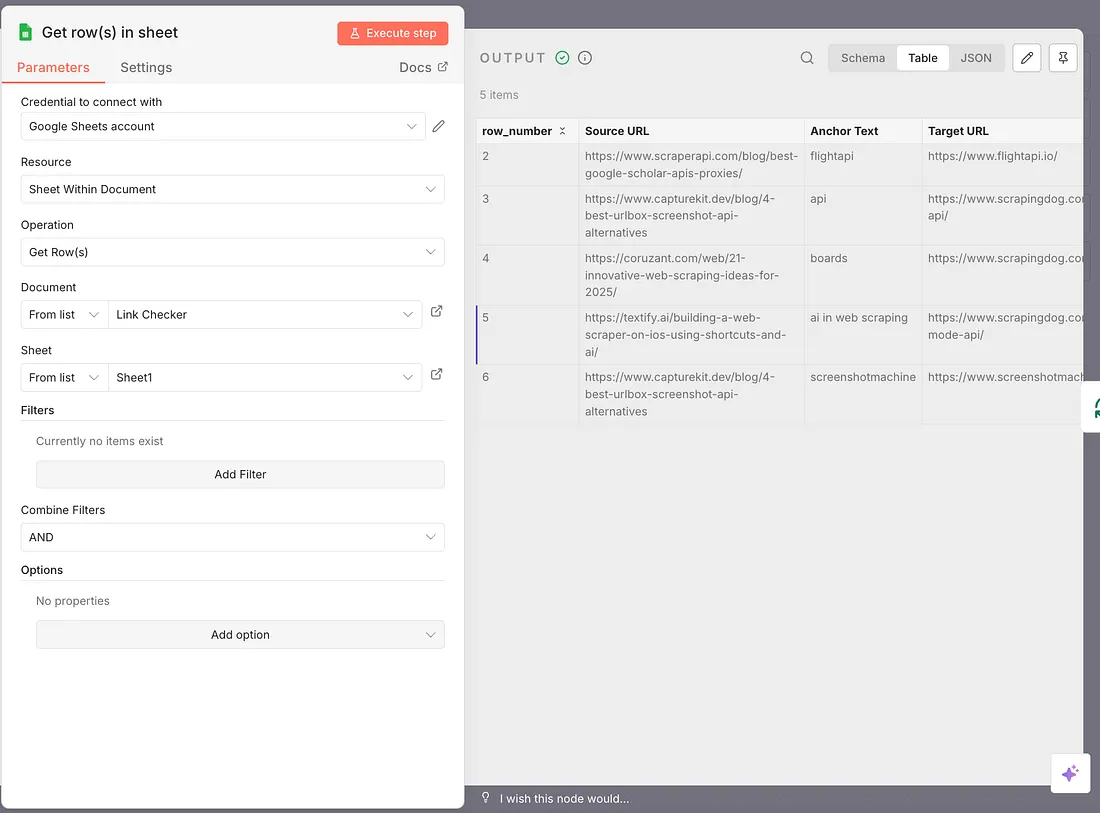

This would look something like this: this is the copy of my original sheet, & here is the link to it.

Let’s now start building our workflow!

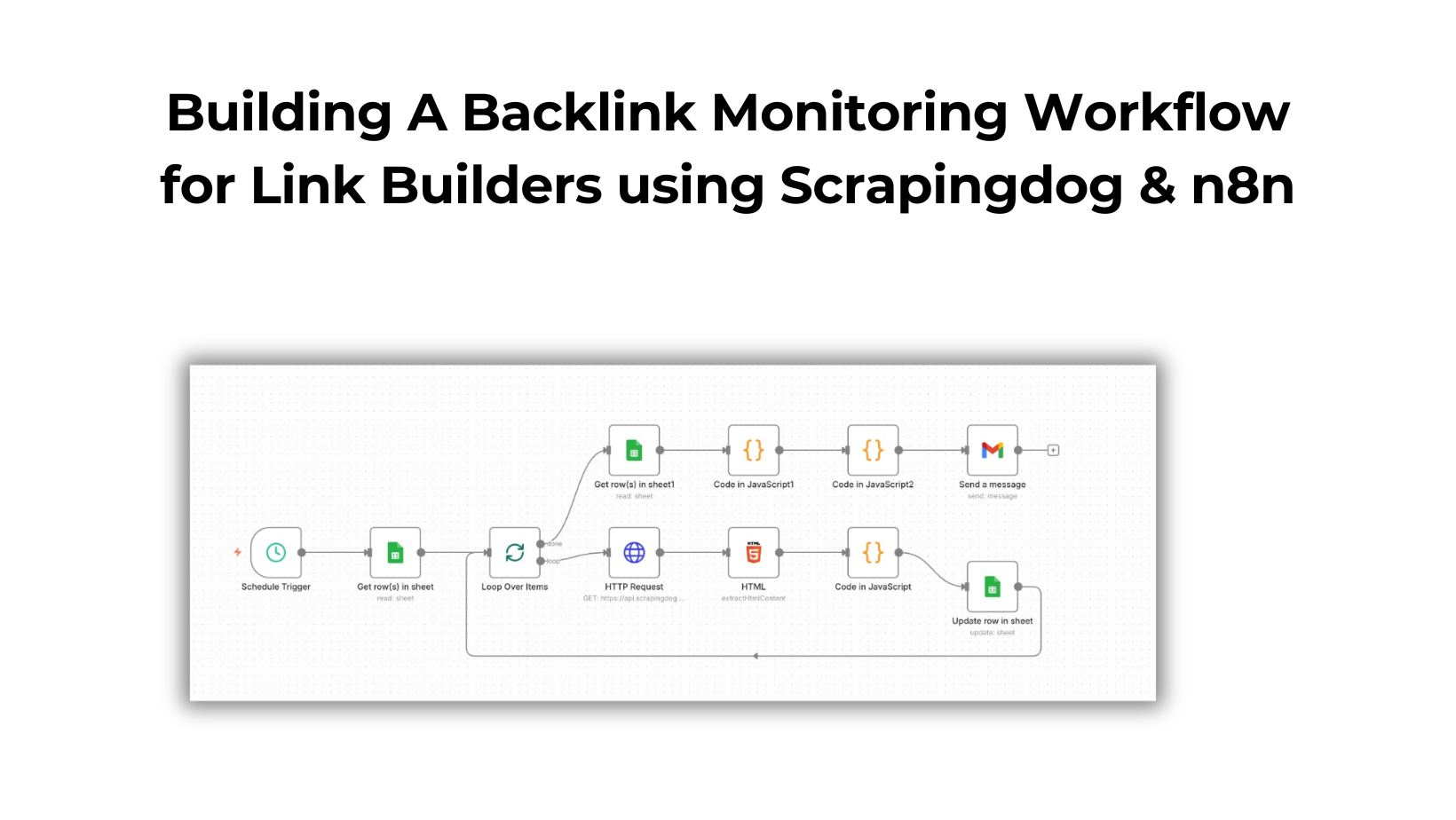

Building Our Workflow

Sign up for n8n if you don’t have a self-hosted or premium version. Once you do, you land upon the n8n canvas → Build a new workflow in there.

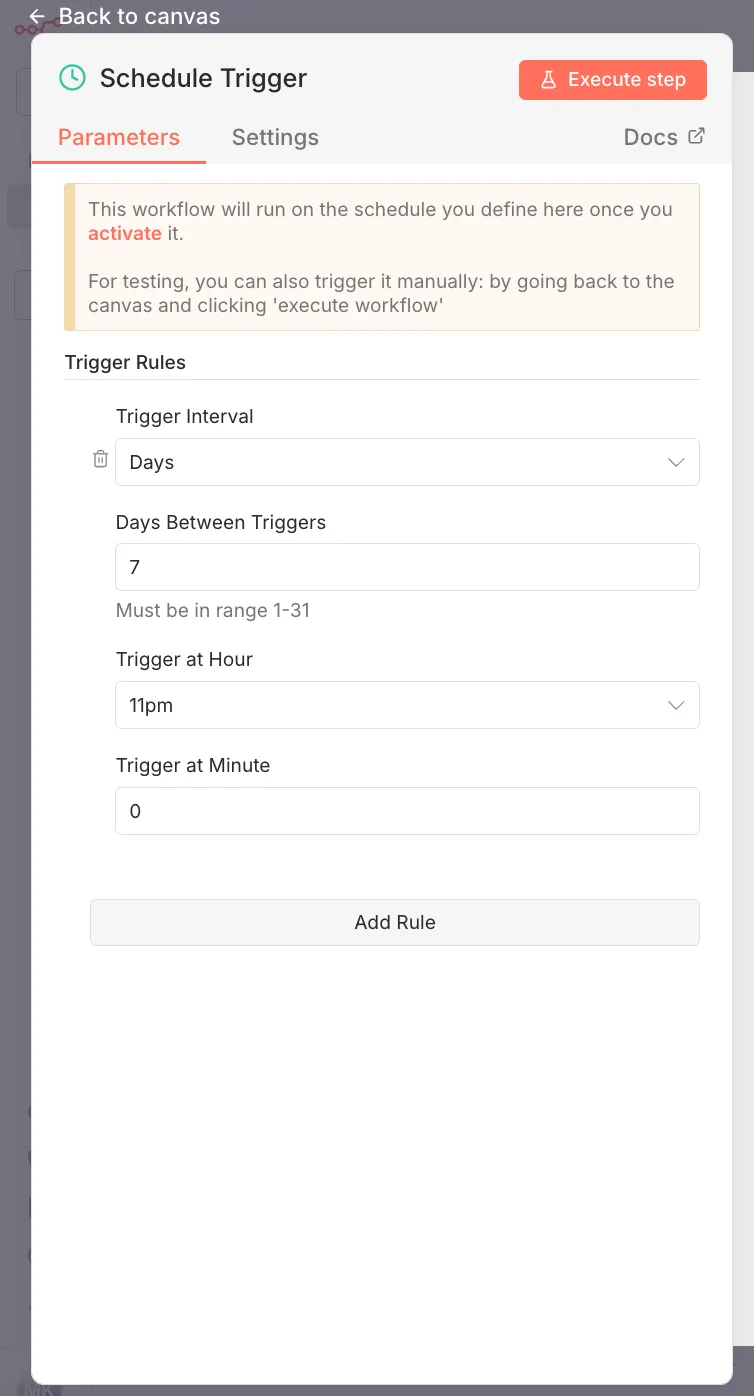

If you are somewhat familiar with how these no-code tools work, you know that each of these tools starts with a trigger.

We are using “Schedule Trigger” that is scheduled to run once per week. You can set this time according to you.

The next node will be to call the Google Sheets node. Every time you secure a link, you have to fill up this sheet with 3 data points i.e., “source url”, “anchor text”, and the “target URL”

My next node will pick the data from these fields; the operation is ‘Get Rows.’ Map it to the right spreadsheet name, with the right tab & there is no filter applied, since we want to check all the links every time our workflow runs.

Here, the output of this node will look like, if you have filled it with some links in there.

Great, as you can see, we have all those 3 data points in the output. We will now use Scrapingdog’s Web Scraping API to extract the HTML from source URLs.

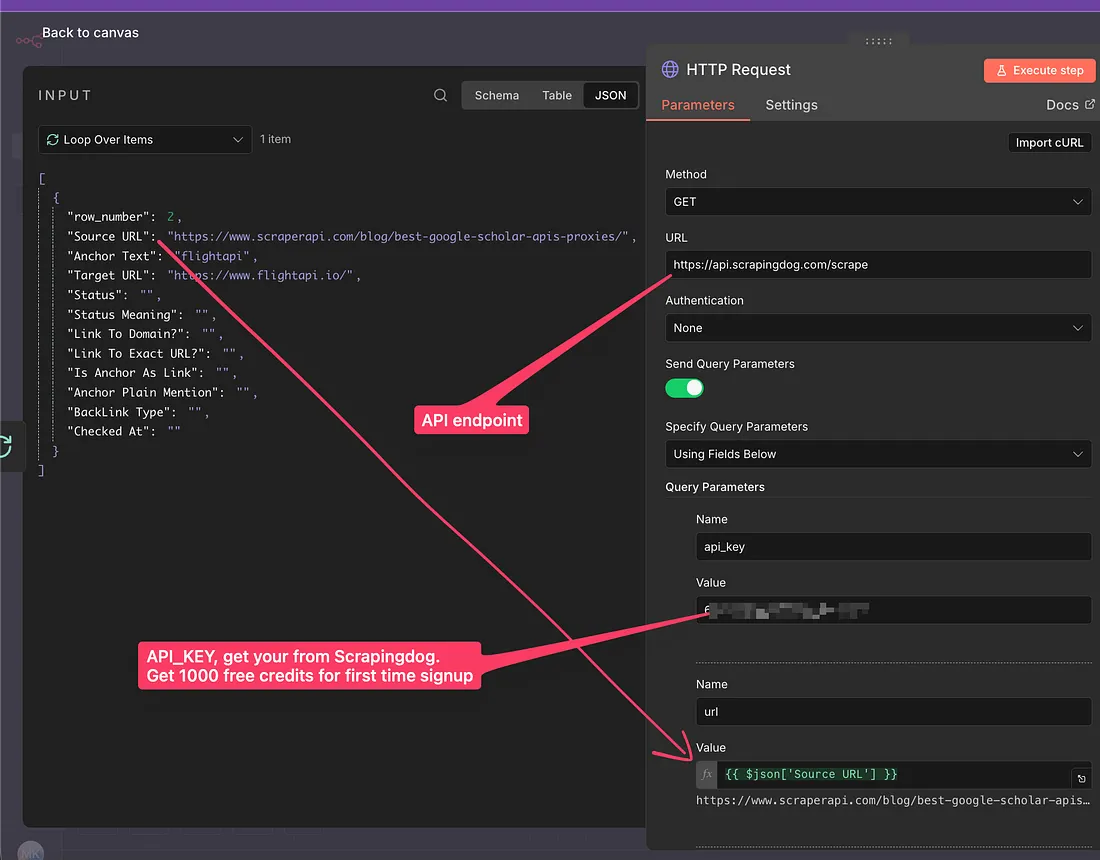

To use this, we are using an HTTP node. You can read more about this API in the document here.

But before that, since we have multiple URLs, we need a loop node before the HTTP node so that we can scrape every link in the list.

The loop node’s configuration looks simple. After that, we will connect to the HTTP node in the loop output.

This will give the HTML of the website.

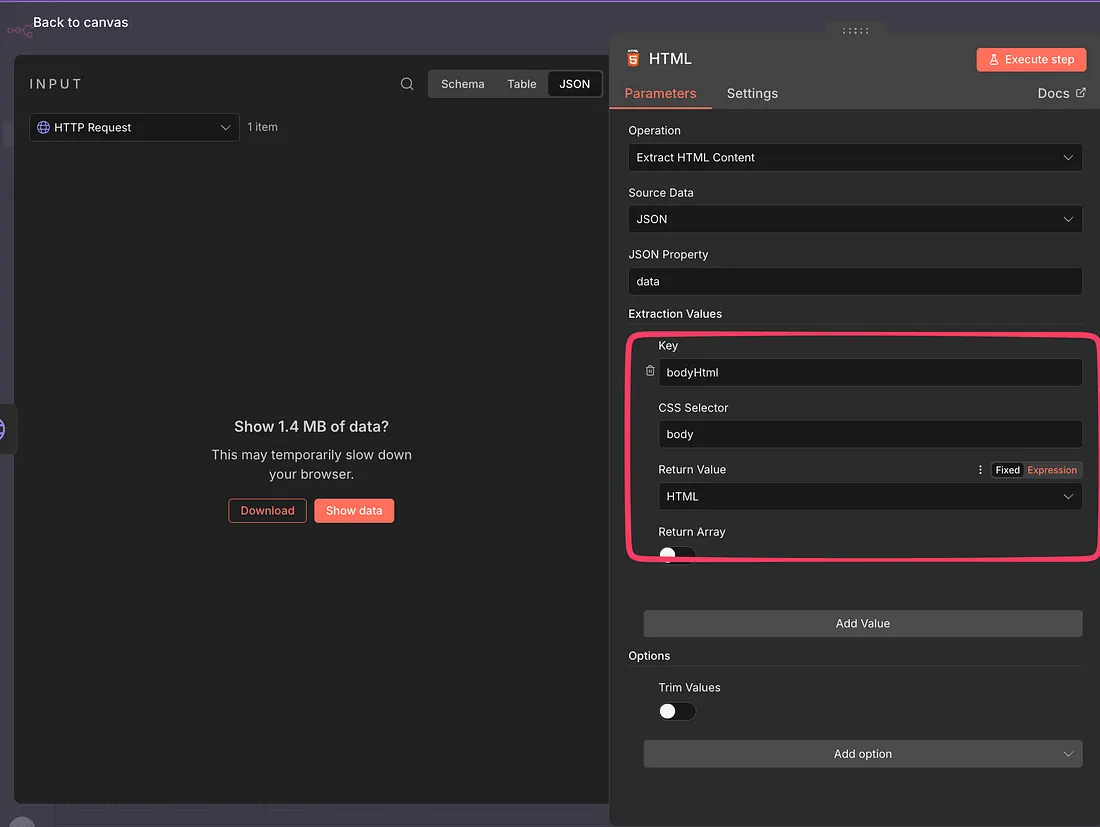

So all the links that you will build will be in the body of the content & this makes sense as these will be the links that will profit you. Hence, we will not use all the HTML, rather the body of the HTML & this is what we will do in the next node.

The next node is an HTML node, & here is the configuration of this node. I won’t go into the nitty-gritty of details.

This will filter out the body from the whole HTML of the page.

Next, I will use the JavaScript code that helps our workflow detect a few points that are: –

- Is there any link that points to my domain — True/False

- Is there any link that matches with target url I provided — True/False

- Does the anchor given by me have my target link — True/False

- Is there anchor present but no link — True/False

- Whether the link is dofollow, nofollow, sponsored, or UGC

Based on these few points, I made the report & finally updated it in Google Sheets.

This is how the loop node continues. We finally get this data in our spreadsheet and connect it back to the loop node.

Sending an Email Alert About the Reports

Until now, your Google Sheets have been updated in a periodic manner depending on the trigger you have placed.

We will take our workflow a step further, where whenever all the link data gets updated, a notification is sent to us via email.

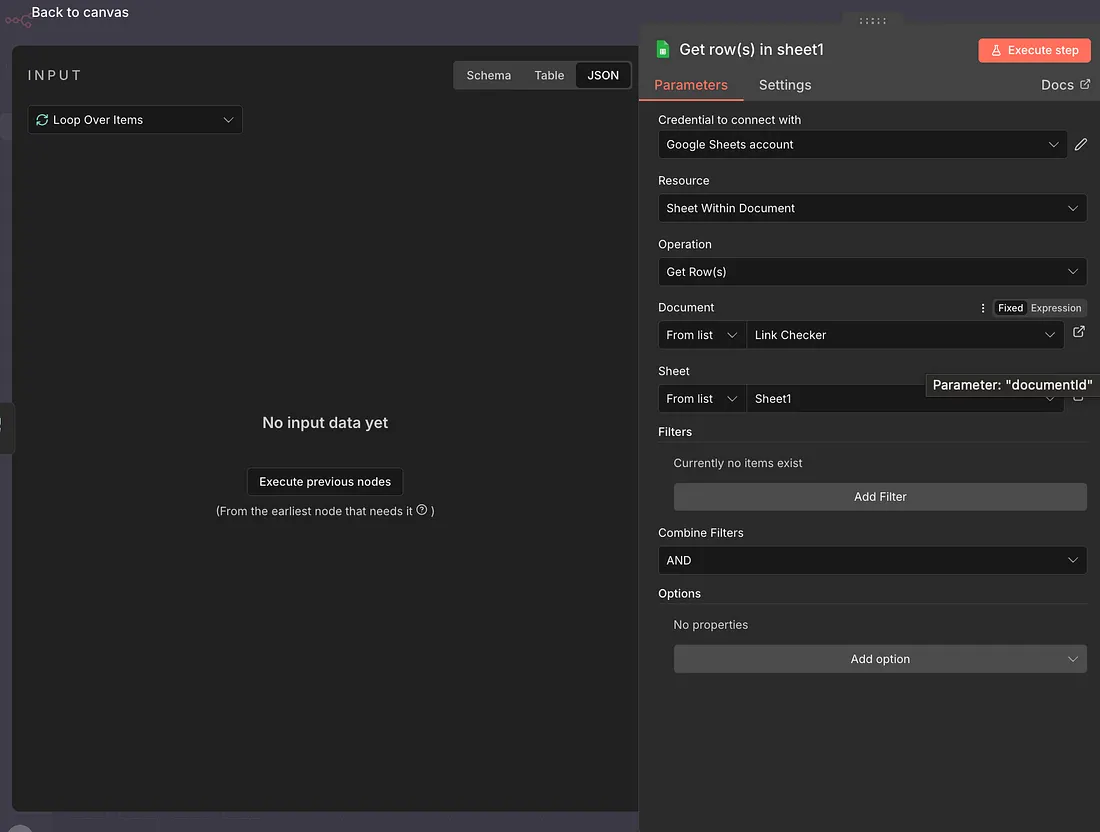

So from the loop node, after the done output, we get all the rows from the spreadsheet again using Google Sheets → Get Rows Operation

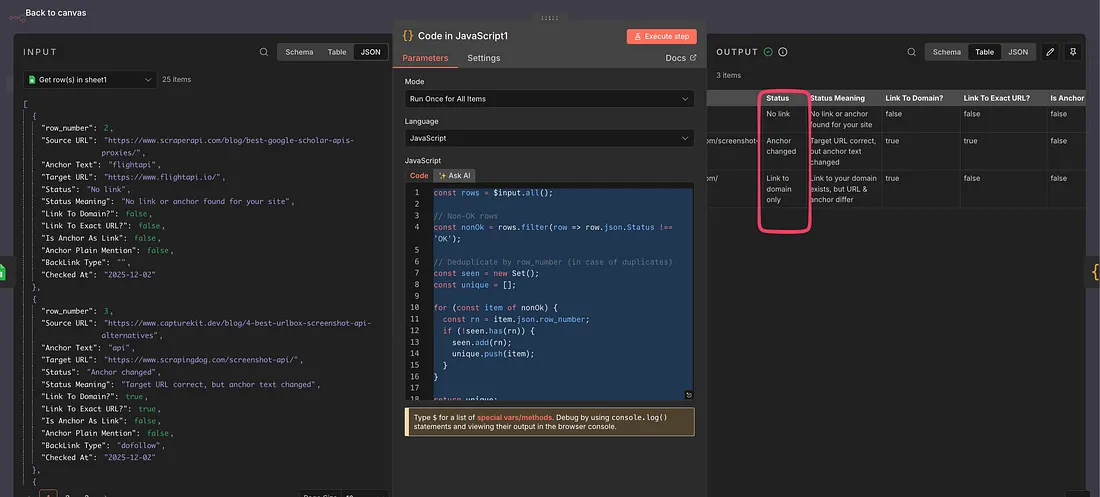

Now there is a code node, with JavaScript code, that will take all the rows that have a status other than OK.

So a status is OK when your anchor and target url match, but if any of them don’t, the Status is different.

Here are all the statuses:-

Let’s connect our next node, which is code, and we will have a JS code in there that filters out any rows that have Status ≠ OK.

Here is the JS code that we used, although you will get that with the blueprint I will share.

const rows = $input.all();

// Non-OK rows

const nonOk = rows.filter(row => row.json.Status !== 'OK');

// Deduplicate by row_number (in case of duplicates)

const seen = new Set();

const unique = [];

for (const item of nonOk) {

const rn = item.json.row_number;

if (!seen.has(rn)) {

seen.add(rn);

unique.push(item);

}

}

return unique;

The output from this node would look something like this

Now, since we have all the rows that have Status ≠ OK, we will draft an email using HTML.

The last node is a code node, which again has JavaScript code that drafts an email in HTML, which we will use in our last node.

Here is the code for the same: –

// Get all "bad" rows from previous node

const items = $input.all();

const rows = items.map(i => i.json);

// If there are no issues, still send a small “all good” report.

const today = new Date().toISOString().slice(0, 10);

// Build summary by Status (Anchor changed, URL changed, etc.)

const statusCounts = {};

for (const r of rows) {

const s = r.Status || 'Unknown';

statusCounts[s] = (statusCounts[s] || 0) + 1;

}

// Summary rows HTML

let summaryRowsHtml = '';

for (const [status, count] of Object.entries(statusCounts)) {

summaryRowsHtml += `

<tr>

<td style="padding:8px 12px;border:1px solid #eee;">${status}</td>

<td style="padding:8px 12px;border:1px solid #eee;text-align:right;">${count}</td>

</tr>

`;

}

if (!summaryRowsHtml) {

summaryRowsHtml = `

<tr>

<td style="padding:8px 12px;border:1px solid #eee;">No issues 🎉</td>

<td style="padding:8px 12px;border:1px solid #eee;text-align:right;">0</td>

</tr>

`;

}

// Detail rows HTML

let detailRowsHtml = '';

for (const r of rows) {

const sourceUrl = r['Source URL'] || '';

const anchor = r['Anchor Text'] || '';

const targetUrl = r['Target URL'] || '';

const status = r.Status || '';

const meaning = r['Status Meaning'] || '';

const type = r['BackLink Type'] || r.relType || '';

const checked = r['Checked At'] || '';

detailRowsHtml += `

<tr>

<td style="padding:8px 12px;border:1px solid #eee;word-break:break-all;">

<a href="${sourceUrl}" style="color:#2563eb;text-decoration:none;">${sourceUrl}</a>

</td>

<td style="padding:8px 12px;border:1px solid #eee;">${anchor}</td>

<td style="padding:8px 12px;border:1px solid #eee;word-break:break-all;">

<a href="${targetUrl}" style="color:#2563eb;text-decoration:none;">${targetUrl}</a>

</td>

<td style="padding:8px 12px;border:1px solid #eee;">${status}</td>

<td style="padding:8px 12px;border:1px solid #eee;">${meaning}</td>

<td style="padding:8px 12px;border:1px solid #eee;">${type}</td>

<td style="padding:8px 12px;border:1px solid #eee;">${checked}</td>

</tr>

`;

}

if (!detailRowsHtml) {

detailRowsHtml = `

<tr>

<td colspan="7" style="padding:16px 12px;border:1px solid #eee;text-align:center;color:#6b7280;">

No non-OK backlinks for this run. All monitored links are OK ✅

</td>

</tr>

`;

}

const totalIssues = rows.length;

const html = `

<!doctype html>

<html>

<head>

<meta charset="utf-8" />

<title>Backlink Issues Report</title>

</head>

<body style="margin:0;padding:24px;font-family:-apple-system,BlinkMacSystemFont,Segoe UI,Roboto,Helvetica,Arial,sans-serif;background:#f3f4f6;">

<table role="presentation" style="max-width:900px;margin:0 auto;background:#ffffff;border-radius:8px;border:1px solid #e5e7eb;padding:24px;">

<tr>

<td>

<h1 style="margin:0 0 4px;font-size:24px;color:#111827;">Backlink Issues Report</h1>

<p style="margin:0 0 16px;color:#6b7280;font-size:14px;">

Date: <strong>${today}</strong><br/>

Non-OK links in this run: <strong>${totalIssues}</strong>

</p>

<h2 style="margin:24px 0 8px;font-size:18px;color:#111827;">Summary by Status</h2>

<table cellpadding="0" cellspacing="0" style="width:100%;border-collapse:collapse;font-size:14px;">

<thead>

<tr style="background:#f9fafb;">

<th align="left" style="padding:8px 12px;border:1px solid #eee;">Status</th>

<th align="right" style="padding:8px 12px;border:1px solid #eee;">Count</th>

</tr>

</thead>

<tbody>

${summaryRowsHtml}

</tbody>

</table>

<h2 style="margin:32px 0 8px;font-size:18px;color:#111827;">Link Details</h2>

<table cellpadding="0" cellspacing="0" style="width:100%;border-collapse:collapse;font-size:13px;">

<thead>

<tr style="background:#f9fafb;">

<th style="padding:8px 12px;border:1px solid #eee;text-align:left;">Source URL</th>

<th style="padding:8px 12px;border:1px solid #eee;text-align:left;">Anchor</th>

<th style="padding:8px 12px;border:1px solid #eee;text-align:left;">Target URL</th>

<th style="padding:8px 12px;border:1px solid #eee;text-align:left;">Status</th>

<th style="padding:8px 12px;border:1px solid #eee;text-align:left;">Status Meaning</th>

<th style="padding:8px 12px;border:1px solid #eee;text-align:left;">Backlink Type</th>

<th style="padding:8px 12px;border:1px solid #eee;text-align:left;">Checked At</th>

</tr>

</thead>

<tbody>

${detailRowsHtml}

</tbody>

</table>

<p style="margin:24px 0 0;font-size:12px;color:#9ca3af;text-align:center;">

Generated automatically by your backlink checker workflow.

</p>

</td>

</tr>

</table>

</body>

</html>

`;

// Return a SINGLE item carrying the HTML + some meta

return {

json: {

html,

date: today,

totalIssues,

},

};

And here is the blueprint for this automation that you can use as is in your workflow.

Download this blueprint on your machine and import it into your n8n canvas. Further, you need Scrapingdog’s API_KEY, which you can get by signing up for free in your dashboard.

Here is a video tutorial of this workflow.

In case you need any help setting this up, you can reach out to us on chat & we are happy to help!