LinkedIn Scrapers are quite in demand in 2025. LinkedIn has the largest pool of corporates available today.

So obviously, you can find many people on this platform who could have the same interests as yours. But websites like LinkedIn can serve you with data that can be used for selling goods and products.

LinkedIn has more than 800 million active users where people share their work experience, skills, and achievements daily. If you scrape and use this data wisely then you can generate a lot of leads for your business.

Of course, this data can also be used for other purposes like finding the right candidate for the job or maybe enriching your own CRM.

In this article, we will talk about the best LinkedIn scrapers in the market. Using these scrapers you can scrape Linkedin at scale.

Advantages of using LinkedIn Scrapers Instead of Collecting Data Manually

- You will always stay anonymous. On each request, a new IP will be used to scrape a page. Your IP will always be hidden.

- The pricing will be less as compared to the official API.

- You can get parsed JSON as the output.

- 3rd Party APIs can be customized according to demands.

- 24*7 support is available with many of them.

Some Challenges of Scraping LinkedIn at Scale

If you have researched enough, you must by now know that there are tools with which you can scrape a few hundred profiles/jobs without any problem.

These tools either use your LinkedIn Profile/LinkedIn Sales Navigator which makes them limited in terms of their abilities to extract data.

The main problem arises when you scrape LinkedIn at scale. Some of them are ⬇️

- Legal and Ethical Issues:

- User Agreement Violation: LinkedIn’s terms of service prohibit the scraping of their website. Engaging in this activity can result in legal action against the perpetrators.

- Privacy Concerns: Extracting user data without consent violates privacy norms and can lead to severe repercussions. I have discussed in detail about the legality of scraping LinkedIn in this blog.

- User Agreement Violation: LinkedIn’s terms of service prohibit the scraping of their website. Engaging in this activity can result in legal action against the perpetrators.

- Technical Challenges:

- Rate Limits: LinkedIn monitors and restricts frequent and massive data requests. An IP address can be temporarily banned if it makes too many requests in a short period.

- Dynamic Content Loading: LinkedIn uses AJAX and infinite scrolling to load content dynamically. Traditional scraping methods often fail to capture this kind of content.

- Complex Website Structure: LinkedIn’s DOM structure is intricate, and elements might not have consistent class or ID names. This can make the scraping process unstable.

- Captchas: LinkedIn employs captchas to deter automated bots, making scraping even more challenging.

- Cookies and Sessions: Managing sessions and cookies is necessary to mimic a real user browsing pattern and avoid detection.

- Rate Limits: LinkedIn monitors and restricts frequent and massive data requests. An IP address can be temporarily banned if it makes too many requests in a short period.

- Maintenance Issues:

- Frequent Changes: LinkedIn, like other modern web platforms, frequently changes its user interface and underlying code. This means scrapers need constant updating to remain functional.

- Data Quality: Ensuring the scraped data’s accuracy, relevancy, and completeness can be challenging, especially at scale.

- Frequent Changes: LinkedIn, like other modern web platforms, frequently changes its user interface and underlying code. This means scrapers need constant updating to remain functional.

- Infrastructure and Costs:

- Large-scale Scraping: Scraping at scale requires a distributed system, proxy networks, and cloud infrastructure, increasing the complexity and costs.

- Data Storage: Storing vast amounts of scraped data efficiently and securely is another challenge.

- Large-scale Scraping: Scraping at scale requires a distributed system, proxy networks, and cloud infrastructure, increasing the complexity and costs.

- Anti-Scraping Mechanisms:

- Sophisticated Detection: LinkedIn employs sophisticated bot detection mechanisms. Mimicking human-like behavior becomes essential to avoid detection.

- Continuous Monitoring: LinkedIn monitors for suspicious activities and can block accounts or IP addresses even if you successfully scrape data.

- Sophisticated Detection: LinkedIn employs sophisticated bot detection mechanisms. Mimicking human-like behavior becomes essential to avoid detection.

Advantages of using LinkedIn Scrapers Instead of Collecting Data Manually

- You will always stay anonymous. A new IP will be used on each request to scrape a page. Your IP will always be hidden.

- The pricing will be lower than the official API.

- You can get parsed JSON as the output.

- 3rd Party APIs can be customized according to demands.

- 24*7 support is available.

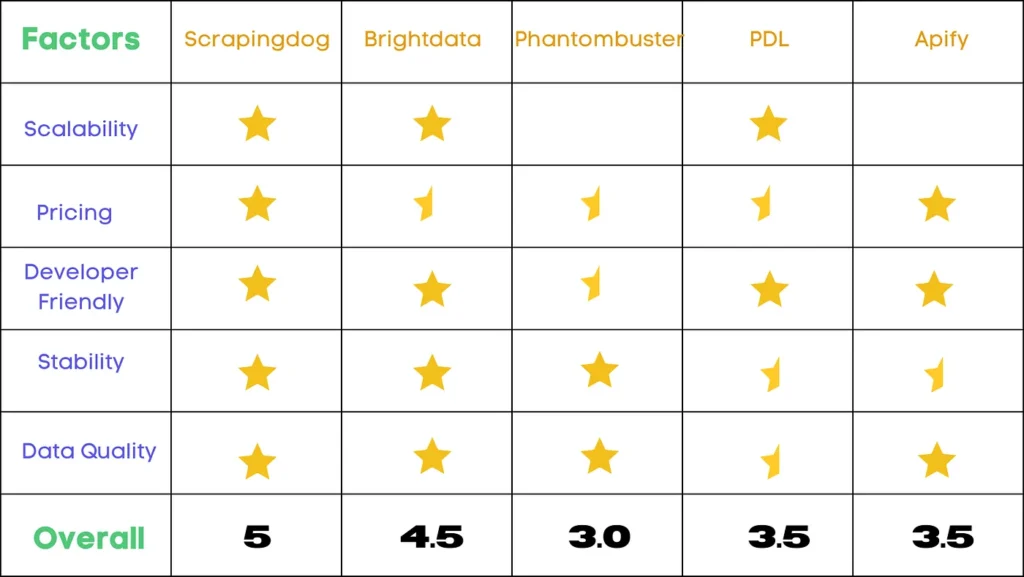

Best LinkedIn Scrapers [In 2025]

We will be judging these LinkedIn lead Scraper APIs based on 5 attributes.

- Scalability means how many pages you can scrape in a day.

- Pricing of the API. What is the cost of one API call?

- Developer-friendly refers to the ease with which a software engineer can use the service.

- Stability refers to how much load a service can handle or for how long the service is in the market.

- Data Quality refers to how old the data is.

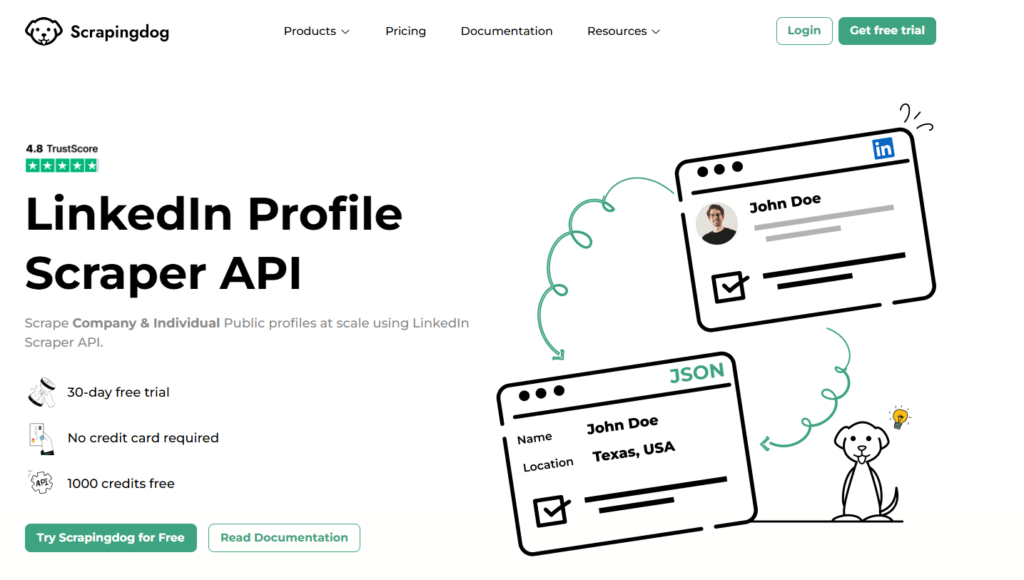

Scrapingdog’s LinkedIn Scraper API

Scrapingdog offers a simple and easy-to-use LinkedIn Scraper API.

This API can be used to scrape either a person’s profile or a company profile. Other than this Scrapingdog offers LinkedIn Jobs scraper to scrape job data from this platform.

Scalability

Well, you can scrape around 1 million profiles from Scrapingdog’s LinkedIn Scraping API be it job data or profile data.

Pricing

The enterprise pack will cost $1k per month and you can scrape 110k profiles. Each profile will cost $0.009.

Developer Friendly

The documentation is self-explanatory and the user can test the API directly from the dashboard without setting up any coding environment.

Stability

Scrapingdog has been in the market for 5 years now and has more than 200 users which proves its stability in scraping LinkedIn at scale.

Data Quality

We always scrape fresh data.

Here’s a video that demonstrates how you can use the API to scrape data at scale. ⬇️

Brightdata LinkedIn Scraper

Brightdata, along with its large proxy network also provides LinkedIn Scrapers.

Scalability

Their scalability is great but the error rate might go up due to disturbances in their proxy pool. Bright data goes down frequently.

Pricing

Their solution could be a little expensive. The per-profile cost is around $0.05.

Developer Friendly

The whole documentation is quite easy to read and there are request builders which can help you get started quickly.

Stability

No doubt Brightdata has the biggest proxy pool in the market but they frequently go down sometimes. But overall their infrastructure is quite solid.

Data Quality

They do scrape fresh data like Scrapingdog.

Phantombuster

Phantombuster is another Linkedin scraper provider. Recently they have changed their messaging from web scraping services to lead generation services.

Scalability

Using Phantombuster you can only scrape 80 profiles in a day that too with your credentials and cookies. This may lead to the blocking of your account. Hence it cannot be used for mass scraping.

Pricing

The pricing for this service is not clear on their website.

Developer Friendly

No documentation is available on the website.

Stability

They are simply using your cookies to scrape the LinkedIn profile. This service is not at all stable because, after 50 profiles, LinkedIn will block your account and the cookies will no longer remain valid.

Data Quality

You will get fresh data.

People Data Labs

PDL provides data enrichment technology. You can use their APIs to find valuable insights for any prospect.

Scalability

PDL is designed for small projects. You cannot scrape thousands of profiles with it in a short time frame. So, the scalability is not great.

Pricing

It will cost you $0.28 per profile. This makes them very very costly.

Developer Friendly

The documentation is nice which makes them developer-friendly.

Stability

If you are ok with old data then it is stable.

Data Quality

The data you get will be from an old database because they don’t scrape it fresh. They have data sources that they renew at regular intervals.

Apify

Apify is another great LinkedIn scraper. You can scrape person’s profile as well as the company profile.

Scalability

Their API only works if you pass cookies from your logged-in LinkedIn account. So, if you pass cookies from a normal account, you will be able to scrape a maximum of 50 profiles, and if you are passing cookies from a premium account, you will scrape a maximum of 500 profiles. Once you reach these limits your account will be banned by Linkedin and logged out of your account.

So, Apify is for those who just want to scrape a very small number of profiles.

Pricing

They provide a 3-day free trial after that they charge $25 per month.

Developer Friendly

Documentation is very clear and whether you are a developer or a non-developer you will be able to scrape profiles using Apify. It provides a hook through which you can scrape profiles using make.com.

Stability

Service is completely stable and you can scrape a small number of profiles very easily without getting blocked.

Data Quality

You will always get fresh data from their API. Since you are passing the cookies then you will get data like skills, history, etc which are absent in almost all the other scrapers.

Final Verdict

We have compiled a report considering all the factors above. This report is the result of a comprehensive analysis of each API.

Although they may appear similar at first glance, further testing reveals that only a small number of APIs (one or two) are stable and suitable for production purposes.

Therefore, it is essential to evaluate the options based on your specific requirements and choose the most appropriate one from the given list.

I hope this list and my testing will help you pick one of them. If you liked the article do consider sharing it.