When it comes to collecting location-based information, scraping Google Maps is one of the best options, offering everything from phone numbers to reviews and other details like operating hours, current open status, ratings, etc.

However, manually collecting this data can be super time-consuming, prone to errors, and inefficient.

In this article, we’ll explore how to automate the process using Scrapingdog’s Google Maps API and Python.

Whether you’re looking to extract reviews, phone numbers, or other key details, this guide will show you how to efficiently scrape Google Maps data to generate local leads or even B2B lead generation, depending on the business you do.

Where Can You Use this Scraped Data from Google Maps?

- You can use this data to generate leads. With every listing, you will get a phone number to reach out to.

- Reviews can be used for sentiment analysis of any place.

- Posts shared by the company can be used for real-estate mapping.

- Competitor analysis by scraping data like reviews and ratings of your competitor.

- Enhancing existing databases with real-time information from Google Maps.

Preparing the Food

- I am assuming you have already installed Python on your machine if not, then you can install it from here. Now, create a folder where you will keep your Python script. I am naming the folder as

maps.

mkdir maps

pip install requests

pip install pandas

requests will be used for making an HTTP connection with the data source and pandas will be used for storing the extracted data inside a CSV file.

Once this is done, sign up for Scrapingdog’s trial pack.

The trial pack comes with 1000 free credits, which is enough for testing the API. We now have all the ingredients to prepare the Google Maps scraper.

Let’s dive in!!

Making the First Request

Let’s say I am a manufacturer of hardware items like taps, showers, etc., and I am looking for leads near my New York-based factory.

Now, I can use data from Google Maps to find hardware shops near me.

With the Google Maps AP, I can extract their phone numbers and then reach out.

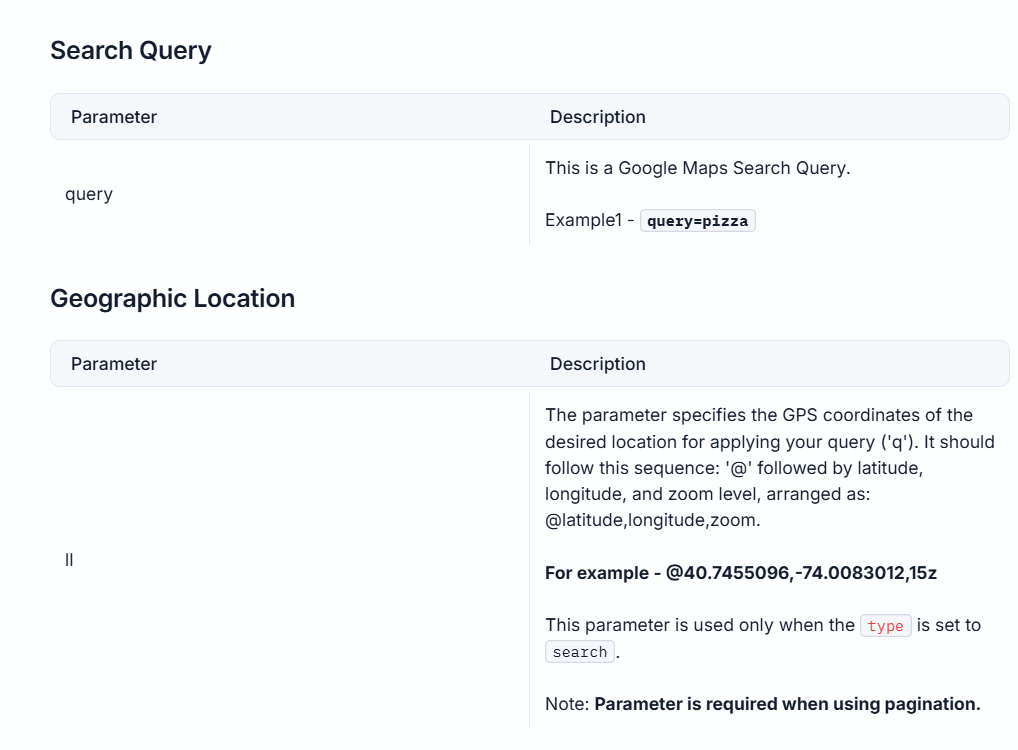

If you look at the Google Maps API documentation, you’ll notice that it requires two mandatory arguments in addition to your secret API key- coordinates and a query. (see the image below)

The coordinates represent New York’s latitude and longitude, which you can easily extract from the Google Maps URL for the location. The query will be “hardware shops near me.”

Before writing a single line of code, you can try this directly from your dashboard. Just enter the coordinates and query, and hit enter.

As you can see in the above GIF, you got a beautiful JSON response that shows every little detail, like operating hours, title, phone number, etc.

Also, you can watch the video below, in which I have quickly explained how you can use Scrapingdog Google Maps API.

We can code this in Python and save the data directly to a CSV file. Here, we will use Pandas library.

By the way, a Python code snippet is ready for you on the right-hand side of the dashboard.

Just copy that and paste it into a Python file inside your maps folder. I am naming this file as leads.py.

import requests

import pandas as pd

api_key = "your-api-key"

url = "https://api.scrapingdog.com/google_maps"

obj={}

l=[]

params = {

"api_key": api_key,

"query": "hardware shops near me",

"ll": "@40.6974881,-73.979681,10z",

"page": 0

}

response = requests.get(url, params=params)

if response.status_code == 200:

data = response.json()

for i in range(0,len(data['search_results'])):

obj['Title']=data['search_results'][i]['title']

obj['Address']=data['search_results'][i]['address']

obj['DataId']=data['search_results'][i]['data_id']

obj['PhoneNumber']=data['search_results'][i]['phone']

l.append(obj)

obj={}

df = pd.DataFrame(l)

df.to_csv('leads.csv', index=False, encoding='utf-8')

else:

print(f"Request failed with status code: {response.status_code}")

Once we got the results, we iterated over the JSON response. Inside the loop, for each result, it extracts the title, address, and phone number and stores them in a dictionary obj.

After the loop finishes, the list l, which now contains all the extracted data, is converted into a Pandas DataFrame.

Finally, the DataFrame is saved to a CSV file named leads.csv. It also ensures that the file doesn’t include row numbers (index) and encodes the file in UTF-8.

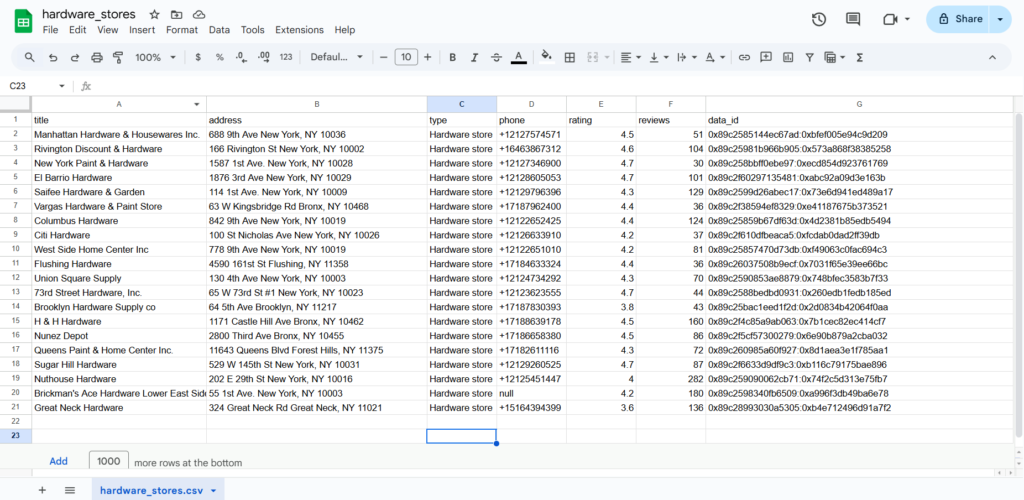

Once you run this code, a leads.csv file will be created inside the folder maps.

This file now contains all the phone numbers and the addresses of the hardware shops. Now, I can call them and convert them into clients.

If you are not a developer and want to learn how to pull this data into Google Sheets, I have built a guide on scraping websites using Google Sheets, Using this guide, you will understand how to pull data from any API to a Google sheet.

This file now contains all the phone numbers and some related information about the hardware shops. Now, I can call them and convert them into clients.

If you are not a developer and want to learn how to pull this data into Google Sheets, I have built a guide on scraping websites using Google sheets, using this guide you will understand to pull data from any API to a Google sheet.

Scraping Reviews from Google Maps

So, after getting the list of nearby hardware shops, what if you want to know what their customers are saying about them? This is the best way to determine whether a business is genuinely thriving.

For this task, we will use the Google Reviews API to retrieve details about when and what rating a customer has given after receiving a service from a particular shop.

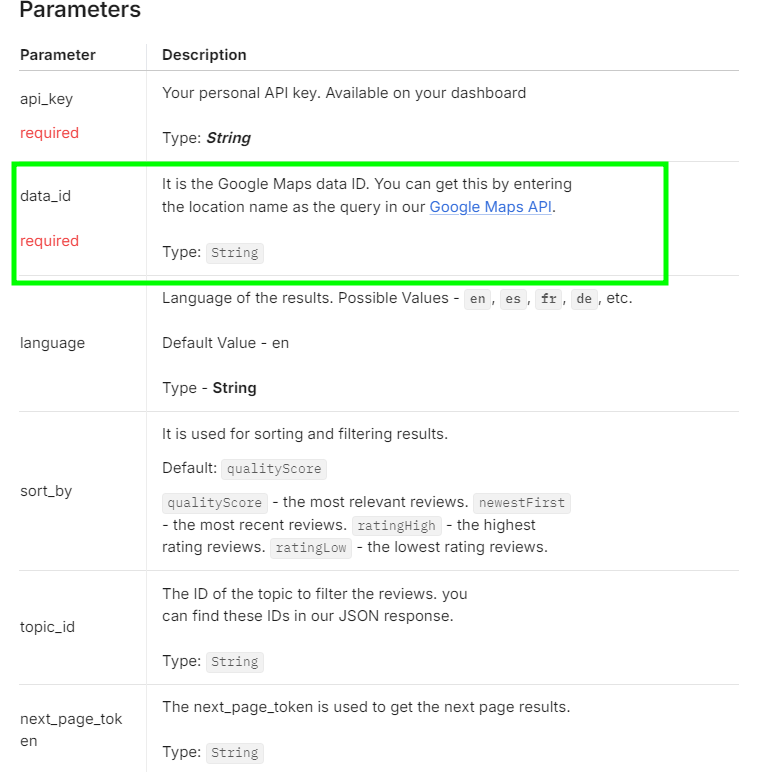

Extracting reviews with this API is straightforward. Before proceeding, be sure to refer to the API documentation.

While reading the docs, you will see that an argument by the name data_id is required. (see below⬇️)

This is a unique ID assigned to each place on Google Maps. Since we have already extracted the Data ID from our previous code while gathering listings, there is no need to manually search for the place on Google Maps to obtain its ID.

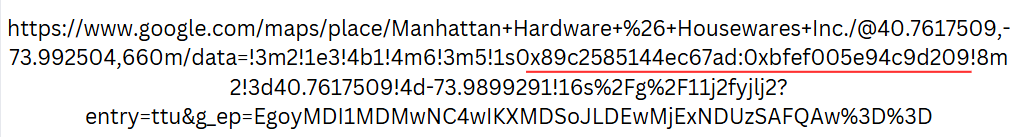

I am going to use this Data ID: 0x89c2585144ec67ad:0xbfef005e94c9d209 for a business named “Maps” located at 688 9th Ave, New York, NY 10036.

However, if you want to extract the Data ID manually, you can do so by searching for the business on Google Maps. After searching, you will find the Data ID in the URL, as shown here:

In this URL, you will see a string starting with 0x89 and ending with ‘!’. Like in the above URL, it is 0x89c2585144ec67ad:0xbfef005e94c9d209, and yes, you have to consider everything before ‘!’.

Marked in the image below ⬇️ is what I am referring to.

Let’s code this in Python and extract the reviews.

import requests

api_key = "your-api-key"

url = "https://api.scrapingdog.com/google_maps/reviews"

params = {

"api_key": api_key,

"data_id": "0x89c2585144ec67ad:0xbfef005e94c9d209"

}

response = requests.get(url, params=params)

if response.status_code == 200:

data = response.json()

print(data)

else:

print(f"Request failed with status code: {response.status_code}")

Once you run this code you will get this beautiful JSON response.

[

{

"link": "https://www.google.com/maps/reviews/data=!4m8!14m7!1m6!2m5!1sChdDSUhNMG9nS0VJQ0FnSURyLU9QSXlnRRAB!2m1!1s0x0:0xbfef005e94c9d209!3m1!1s2@1:CIHM0ogKEICAgIDr-OPIygE|CgwIhv3PtAYQsPaQxAM|?hl=en-US",

"rating": 5,

"date": "7 months ago",

"source": "Google",

"review_id": "ChdDSUhNMG9nS0VJQ0FnSURyLU9QSXlnRRAB",

"user": {

"name": "Tony Westbrook",

"link": "https://www.google.com/maps/contrib/111872779354186950334?hl=en-US&ved=1t:31294&ictx=111",

"contributor_id": 111872779354186960000,

"thumbnail": "https://lh3.googleusercontent.com/a-/ALV-UjWfJlalEYAG74ndEEFN_o17j8S1H1toiSIe7HJtvky9yQoI1lHU0w=s40-c-rp-mo-br100",

"local_guide": false,

"reviews": 33,

"photos": 28

},

"snippet": "I have been looking all over for a flyswatter. I walked right in and there was one and it was even blue my favorite color. They also had quite a selection. I also needed some fly ribbon. They had a nice selection. Great prices. Very helpful. Well stocked! Friendly.",

"details": {},

"likes": 0,

"response": {

"date": "7 months ago",

"snippet": ""

}

},

{

"link": "https://www.google.com/maps/reviews/data=!4m8!14m7!1m6!2m5!1sChZDSUhNMG9nS0VJQ0FnSURRNlpiTWR3EAE!2m1!1s0x0:0xbfef005e94c9d209!3m1!1s2@1:CIHM0ogKEICAgIDQ6ZbMdw|CgsIttrSswUQgNjxFg|?hl=en-US",

"rating": 3,

"date": "9 years ago",

"source": "Google",

"review_id": "ChZDSUhNMG9nS0VJQ0FnSURRNlpiTWR3EAE",

"user": {

"name": "Ben Strothmann",

"link": "https://www.google.com/maps/contrib/114870865461021130065?hl=en-US&ved=1t:31294&ictx=111",

"contributor_id": 114870865461021130000,

"thumbnail": "https://lh3.googleusercontent.com/a-/ALV-UjUCNRewMdLRFVRehId-kl6Sng0n-LACg75xzr2XmsfbougA6047=s40-c-rp-mo-br100",

"local_guide": false,

"reviews": 13,

"photos": null

},

"details": {},

"likes": 2,

"response": {

"date": "",

"snippet": ""

}

},

.....

As you can see, you got the rating, review, name of the person who posted the review, etc. The total rating is positive towards this business. Rather than judging manually, you can also perform sentiment analysis to judge the behaviour or customer’s mood more accurately.

Conclusion

Using Python is a good way to start Google Maps scraping, however if you want to scale this process using an API is the best choice.

Scrapingdog gives a Google Maps API for building a hassle free solution.

If you like this article, do share it on social media, and if you have any questions or suggestions do reach out to me on live chat here🙂

Frequently Asked Questions

Yes, it is legal to scrape data from Google Maps.

Different platforms have different stances on scraping, for example, we recently wrote an article on whether scraping LinkedIn is legal or not. A general rule of thumb is however, if the data is publicly available, it is scrapable.

Additional Resources

- Building Make.com Automation to Generate Local Leads from Google Maps

- Web Scraping Google Search Results using Python

- Automating Competitor Sentiment Analysis For Local Businesses using Scrapingdog’s Google Maps & Reviews API

- How to Scrape Google Local Results using Scrapingdog’s API

- Best Google Maps Scraper APIs: Tested & Ranked

- Extracting Emails by Scraping Google

- Web Scraping Google Scholar using Python

- Web Scraping Google Shopping using Python

- Web Scraping Google News using Python

- Web Scraping Google Finance using Python

- Web Scraping Google Images using Python

- Analyzing Footfall Near British Museum by Scraping Popular Times Data from Google Maps