Web scraping is extracting data from a particular source and using it later for further purposes or end-use cases.

For a small dataset — say, ten data points — it’s perfectly manageable to copy and paste everything into a spreadsheet or database by hand. The real challenge arises when the volume of data becomes significantly larger.

Manual methods are not only tedious but also prone to errors, which is why businesses often turn to automated “web harvesting” techniques. These solutions streamline the process of gathering large quantities of online data and help maintain accuracy at scale.

In this article, we will explore how businesses can make use of web data extraction, the ethical considerations involved, and some of the most effective tools for getting started.

How Do Businesses Can Use Web Scraping?

Web Scraping has a lot of potential if looked at from a broader perspective. Not only does it give you the data, but it can also help you to keep track of some data points repeatedly, say, for example, monitoring price.

Here are some use cases in which businesses can leverage the most by extracting the data.

Using Web Scraping for Machine Learning Applications

Machine learning models thrive on data, and the what’s the better place for up to date information then web. Businesses can harvest publicly available data to feed into their machine algorithms and train them. Using data from online reviews, competitor’s sites, social media & more, businesses can train models that drive smarter decisions & automation.

Here are key areas where companies are using data extracted from the web to power their ML applications.

- Customer Sentiment Analysis

- Price Optimization with Dynamic Pricing

- Competitor Monitoring and Market Intelligence

- Predictive Analytics and Trend Forecasting

- Enabling Personalized Recommendation Systems

How to Use Web Scraping for Lead Generation

Web scraping can be a powerful tool for businesses to generate leads. Instead of manually searching and copying details, companies can quickly gather large volumes of public data about potential customers.

This helps build up-to-date prospect databases fast, so sales teams spend less time researching and more time selling. Web scraping allows businesses to pull in contact details, company info, and social data from the web in minutes, enabling them to build robust lead lists with minimal effort.

Many organizations (from startups to Fortune 500 firms) already leverage these techniques to enhance their outreach and lead generation strategies.

Using Web Scraping to Improve SEO Performance

If organic traffic is one of your main sources of leads or revenue, monitoring your search performance is essential. Web scraping can play a big role here by collecting detailed data directly from Google’s search engine results pages (SERPs).

You can extract information such as titles, meta descriptions, “people also ask” queries, and related questions — insights that help you identify what’s working and what could be improved in your SEO strategy. Additionally, scraping allows you to track keyword rankings for your domain as well as for competitors, giving you a clearer picture of where you stand and where opportunities exist to outrank rivals.

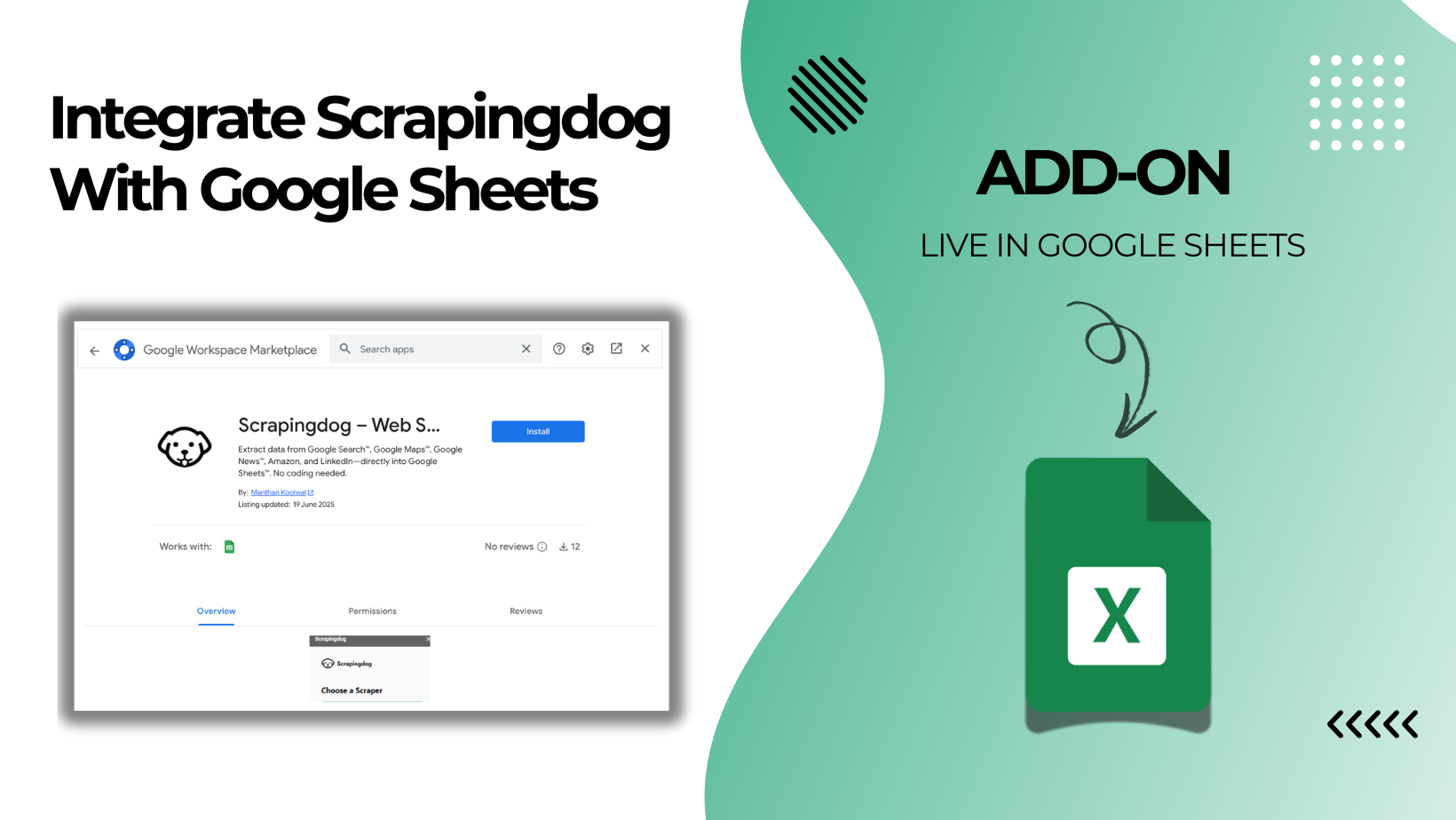

Many paid SEO tools rely on Google SERP APIs to gather these data points. However, if you want more control (and potentially lower costs), you can build your system using an API that scrapes search results (not in the scope of this blog, but we wrote some articles on it wherein we created a Rank tracker using Google Sheets & Scrapingdog’s Google Search API).

Read More: What is Search Engine Scraping?

We recently wrote a blog on using web scraping for lead generation, which can be a helpful resource if you are someone who is looking to generate leads using scraped data.

You can scrape LinkedIn, Google Maps, Yelp, Yellow Pages, etc., to generate leads, depending upon the domain you are generating leads for.

How to Use Web Scraping for Price Tracking and Monitoring

One of the important use cases that businesses can use web scraping for is tracking competitor prices. That way, they can optimize their pricing strategy & maintain the profit margins.

To track them, they scrape the prices of the competitors at regular intervals, and therefore, at each interval, they can monitor the changes, if any!!

Specifically for e-commerce brands, they need to monitor their competitors at different marketplaces like Amazon, eBay, etc.

There can be more use cases that one can use web scraping for; however, just to give you a point of understanding, these are a few of those.

Let’s now understand how businesses can extract data using scraping.

Best Web Scraping Tools and Methods To Scrape Web

Custom Scripts Using Programming Languages

If you are somewhat tech savvy, you can build your web scrapers using programming languages of your choice. We have created numerous tutorials wherein we have explained how you create your own web scrapers.

Here is a list of a few: –

Using No-Code Tools

There are readily available tools out there that you can use if you are not very tech-savvy. These tools can do the basic web scraping for you & give the output in a spreadsheet format.

Both of these methods work fine for a small set of data. However, if you want to scale up the process, these methods can’t help. In that case, you would have to use an API only.

With the No-code tools method told above, the process that happens is screen-scraping, which is referred to as web scraping only but isn’t the same.

Screen scraping relies on extracting data directly from the visual layout (DOM) of webpages — essentially replicating manual data copying at scale. This method quickly runs into scalability issues. Websites do frequently change their visual structures; this can break your scraper & would require constant manual adjustments.

Moreover, screen scraping is slow, as it mimics human browsing behavior.

Why APIs Are the Best Way to Scale Web Scraping

This method, where in use API is specifically built for web scraping, can be the best if you want to scale the data extraction process.

With an API, you can:

- Extract large volumes of data quickly and efficiently.

- Avoid frequent maintenance since the data format remains consistent.

- Scale your scraping tasks effortlessly without constant adjustments.

Scrapingdog offers a web scraping API that you can use to extract data.

Using our data extraction API, you can extract the HTML of any page. Here’s a small video tutorial that explains how Scrapingdog can help you with scraping ⬇️

We do provide dedicated APIs for many platforms that can get you the output data in JSON format. Here are some of those: –

& many more that you can check more of our dedicated APIs on our website!!

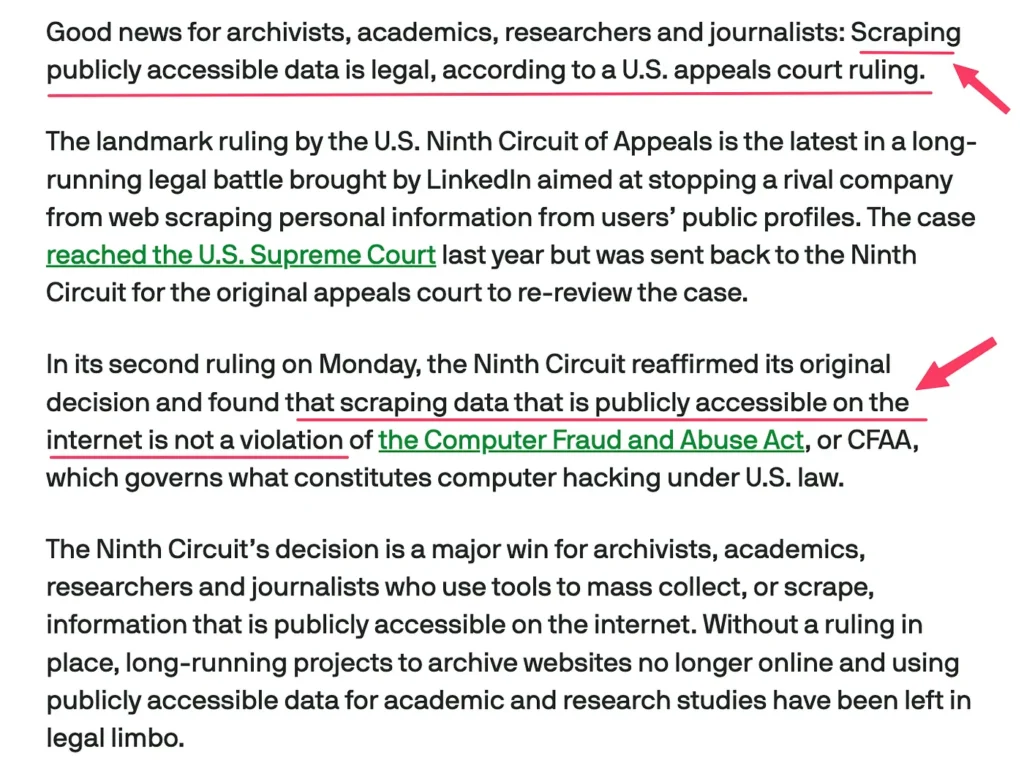

Is Web Scraping Legal in 2025? Here’s What You Should Know

Web scraping is completely legal if you are doing it ethically and extracting the publically available data.

So, if you are extracting the data that is not behind an authentication wall, you are not doing anything wrong!!

Read More: Is Scraping LinkedIn Legal?

Conclusion

Web scraping can be a powerful asset for businesses when used wisely. As you begin leveraging web scraping, ensure you clearly define your goals and have the right resources in place to manage the data effectively.

Need help getting started? Just reach out to us on chat and say, “Hi!” We’d love to assist you with your data extraction needs.

If using an API isn’t your preference, no worries! We can handle the scraping process and deliver the data directly to you through webhooks or any other method you prefer.

Happy Scraping 🎉