The internet is full of data. Web scraping is the process of extracting data from websites. It can be used for a variety of purposes, including market research.

There are several ways to scrape data from websites. The most common is to use a web scraping tool. These tools are designed to extract data from websites. They can be used to extract data from a single page or multiple pages.

Web scraping can be a time-consuming process. However, having data at your end is a valuable tool asset for any market researcher.

This guide will walk you through the basics of web scraping, and scraping marketing data, which includes choosing the right data, scraping it effectively, and using it to your advantage. With this comprehensive guide to market research, you will be able to quickly gather the data you need to make informed decisions about your business.

The Benefits of Web Scraping for Market Research

Web scraping has become a popular technique for extracting data from websites. Scraping can collect social media feeds for marketing research, create a database of leads, and much more!!

Web scraping can be done manually, but it is usually more efficient to use a web scraping tool. Many web scraping tools are available, but not all of them are created equal.

The best web scraping tools will have the following features:

Easy to Use: The user-friendliness and ease of use of a software program make it a better choice than its competitors. The more friendly the interface of the tool will be the easier to use it will be.

Fast: The response time of a good tool will be much less. They will be able to extract data quickly without waiting for the website to load.

Accurate: Accuracy is another important aspect of a scraping tool. This tool will be able to extract data with maximum accuracy.

Reliable: The best web scraping tools will be reliable. They will be able to extract data consistently without worrying about the website going down.

These are just a few of the features you should look for in a web scraping tool. There are many web scraping tools available, so take your time and find the one that best fits your needs.

The Best Practices for Web Scraping

Always check the website’s terms and conditions before scraping.

When scraping, there are certain things we need to be aware of. This includes making sure that the guidelines of the site you are scraping are strictly followed.

You need to treat the website owners with due respect.

Make sure that you thoroughly read the Terms and Conditions before you start scraping the site. Familiarize yourself with the legal requirements that you need to abide by.

The data scraped should not be used for any commercial activity.

Also, make sure that you are not spamming the site with too many requests.

Consider using a web scraping tool or service instead of writing your code.

Let’s face it, people do not like accountability and responsibility, and this is why it is so important to use a proper web scraping tool rather than sitting down to write your code. There are several advantages to this as well. Let us take a look at some of the benefits of using a web scraping tool:

- Speed

- Much more thorough data collection can be done at a scale

- It is very cost-effective

- Offers flexibility and also a systematic approach

- Structured data collection is done automatically

- Reliable and robust performance

- Relatively cheap to maintain its effectiveness

You can choose Scrapingdog to web scrape for all your marketing needs!!

Be cautious when scraping sensitive or private data.

When scraping a site, you are essentially analyzing it to improve its performance. While this is all good, some ethical dilemmas are not uncommon to pop up. For example, while scraping, you may come across data that will be considered very sensitive and/or personal.

The ethical thing to do here is to make sure that this type of data is handled safely and with responsibility. Whoever does the web scraping will need to make sure of these things.

Review your code regularly to ensure that it is scraping data correctly.

Scraping is very much dependent on the user interface and the structure of a site. This question may have already popped into your head but what happens when the targeted website goes through a period of adjustments?

Well, a common scenario in these cases is that the scraper will crash more often than not.

This is the main reason why it is much more difficult to properly maintain scrapers than to write it.

There are tools available that will let you know if your targeted website has gone through some adjustments.

Keep an eye on your web scraping activities to make sure they are not impacting the website’s performance.

Finally, when conducting scraping, it is important to make sure that your scraping activities are not negatively impacting the site’s performance. The idea here is to collect data from a website.

It is not ideal if your scraping activities slow down the site or affect it in any kind of negative way which is not meant to happen in a normal scenario.

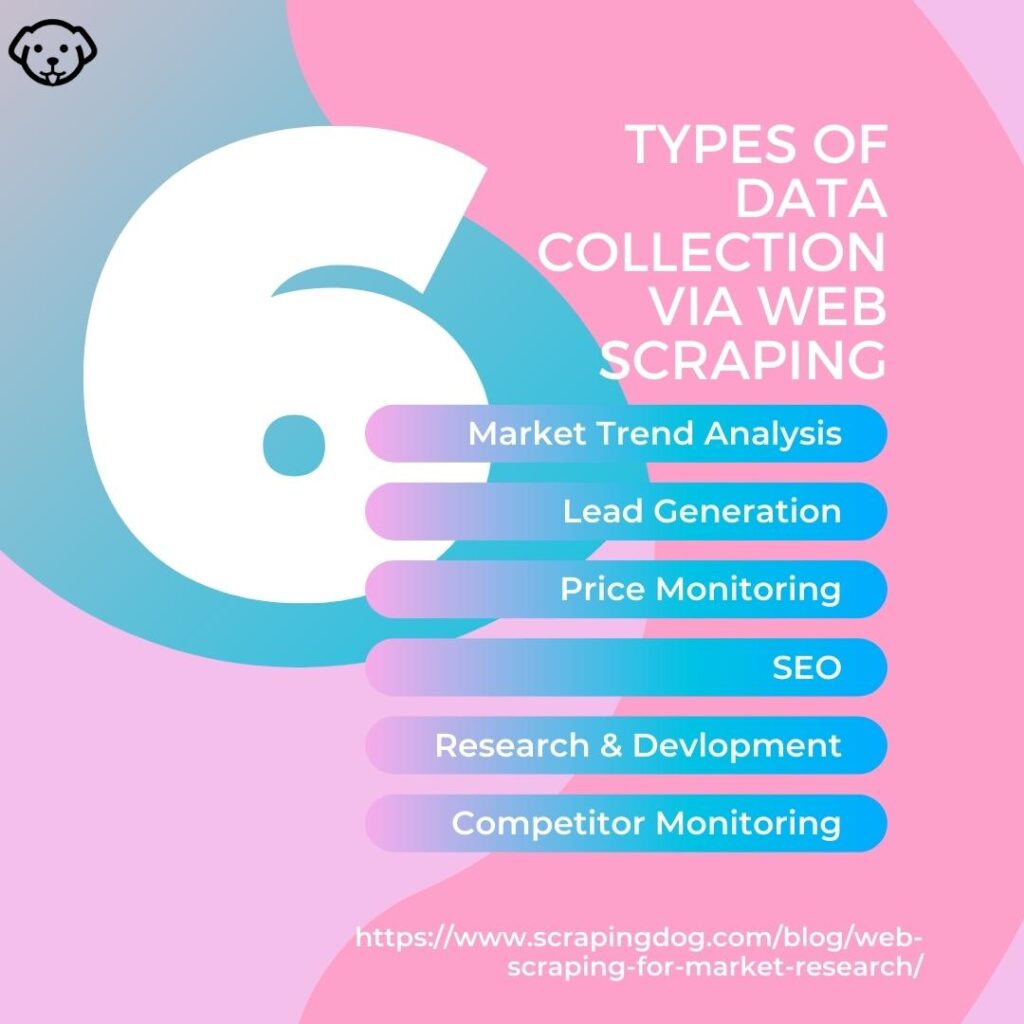

The Different Types of Data That Can Be Collected Through Web Scraping

Market trend analysis

When you have superior-quality data, it will automatically increase your chances of success. Web scraping properly is what enables analytics and research firms to conduct proper market trend analysis. When you use web and data mining to perform trend analysis, know that it is an ineffective way of conducting market trend analysis.

Proper web scraping tools will allow you to monitor the market and also industry trends by making sure that the crawling is done in real-time.

Lead Generation

For any business that is looking to expand its offerings or is even looking to enter new markets, having access to the data gathered from web scraping can be invaluable. Lead generation with web scraping techniques will give you access to data and insights that your competitors cannot see.

This can give you a tremendous advantage over your competitors.

With the help of some particular forms of web data, you will be in a much better position to understand lead generation as well. On top of that, you will have a better understanding of competitive pricing, distribution networks, product placement, influencers, and customer sentiments. Whether you’re growing a large enterprise or exploring online business ideas, this data can be a game-changer.

Price monitoring

Web scraping price data can be both a catalyst and also a sort of metrics engine that can help manage profitability. With proper web scraping methods employed, you will be able to gauge what the impact of pricing activities will have on your business.

You can then calculate how profitable you are at specific price points and will hence be able to optimize your pricing strategy. This can only be done with high-end price monitoring solutions.

Search Engine Optimization

No SEO expert will ever tell you that they follow a fixed and straightforward method to do their job. Search engines are constantly updating their algorithms, and this presents new challenges for companies looking to rank high.

This is why data is so important to sustain proper growth.

To succeed, many SEO companies combine multiple strategies that will consist of quality content, high-intent keywords, good quality link building, high-performing websites, and many other components. Web scraping will allow you to gather these data in much larger volumes instead of having to do it manually.

Most businesses will engage in these practices when they are keen on understanding what is missing in their strategies and what is working for their competitors.

Research & Development

When you have a whole bunch of scrapped data at your disposal, you will be in a much better position to understand the market, your competitors, your customers, etc. All of this data can thus be used very easily to conduct proper research and development as well.

A lot of businesses simply do not pay enough attention to this part of their business strategy and they fail to realize the importance of proper research and development.

Competitor social media research is also most important for analyzing data. It is also important to be aware of what tools competitors use for sales, CMS tools, video editing software, etc.

Staying ahead of the game and being updated with the current trends in the market is vital for any business to survive and thrive.

Whether you are developing a SaaS product or a Mobile app, the research is the first part you should be doing to get the best market analysis.

Check out this company for mobile app development.

Competitor monitoring

Web scraping will be able to give you really valuable insights that will be backed up by current, accurate, and complete information. These things are essential when it comes to outperforming the competition you have in the market. Web scrapping will help firms engage in market research by helping them collect important data about their competitors. It will be able to provide proper monitoring for your competitive landscape.

The Tools and Techniques Used for Web Scraping

Most web scraping tools and techniques revolve around using an automated web browser, such as the Selenium web driver. This web driver can be controlled programmatically to visit web pages and interact with them similarly to a real user. The web driver can extract data from web pages that would be otherwise difficult or impossible to get.

Other web scraping tools and techniques include web crawlers, which are programs that automatically similarly traverse the web to a search engine.

These crawlers can be configured to scrape specific data types from web pages, such as contact information or product pricing.

What Are Web Scraping Tools?

Selenium

Essentially, Selenium is a type of software testing framework that is designed for the web and facilitates the automation of your browsers. There are various types of tools that are available under the Selenium umbrella that can all perform automation testing. This includes the Selenium IDE, Selenium Grid, Selenium Remote Control, and Selenium 2.0 plus the WebDriver.

Familiarizing yourself with the many types of tools Selenium provides will help you approach different types of automation problems.

Selenium Grid

This is a tool that is used for executing parallel selenium scripts. If, for example, we have one single machine and this machine has the option of connecting to multiple machines along with numerous operating systems, then we will be able to run our sample cases parallel to other different machines. This can drastically reduce the total time taken.

Selenium IDE

This is a tool that only really works on Firefox and Chrome browsers. It is not able to generate any sort of reports and it cannot conduct multiple test cases. If you have about 5000 test cases, know that IDE will not work in that scenario. It is also unable to generate logs.

Selenium RC(Remote Control)

This tool helps you write dynamic scripts which can work on multiple browsers. Another thing to note is that you will need to be able to learn languages like Java and C# to execute this tool properly.

Selenium WebDrivers

The web driver is a sort of tool that can provide a really friendly interface. This will let you use the tool and explore its different functionalities of it quite easily.

These drivers are not particularly tied to any sort of framework. This will allow you to easily integrate with other testing frameworks like TestNG and JUnit.

Web crawlers

These crawlers or bots are capable of downloading and indexing content from across the internet. The main purpose of these bots is to learn what web pages are trying to convey. This is done so that this information can be accessed whenever it is needed.

It is important to note that these bots are almost always used by search engines. Through the means of a search algorithm that contains the data being collected, search engines are better able to answer search queries and provide relevant links to users.

Regular expressions

Regular expressions are commonly referred to as re-modules. These modules allow us to get specific text patterns and to also extract the data we want from specific sites. It allows us to do this much more efficiently and easily than when you would have to when you do it manually.

Web Scraping Theory and Techniques

Method 1: Data extraction

Web scraping is sometimes also known as data extraction. The main function of this technique is to use it for monitoring prices, pricing intelligence, monitoring news, generating leads, and also market research among many other uses.

Method 2: Data transformation

We live in a world that is completely driven by data. When talking about the amount of data and information that most organizations and businesses regularly can be best described as “big data.” The problem here is that most of the data that can be acquired is not in a very usable form and is very unstructured. This makes it difficult to make proper use of these data.

This is exactly where data transformation comes in. This is a process in which data sets of different structures are built up from scratch again. This will make two or even more data sets used to analyze it further.

Method 3: Data mining

Data mining is very similar to mining for actual gold. If you were to mine for actual gold then you would have to dig through a lot of rocks to get to the treasure. Data mining is very similar in the sense that you get to sort through a lot of big data sets to get the particular information that you want. It is a very important part of data science in general and also for the analytics process.

The Ethical Considerations of Web Scraping

This really ought not to come as a surprise but when you are actively designing a program that will enable you to download data from websites, you should most definitely ask the owner of the site if you are allowed to do this.

You need to get permission in written format. Some sites do allow others to collect data from their sites and in these cases, they will have a sort of web-based API. This will make collecting the data much easier than having to retrieve it from HTML pages.

Just to give you an example, consider the case of PaperBackSwap.com. This site allows users to exchange books online. They have a type of search system that will give you the ability to search for books using titles, names of authors, or even an ISBN.

In this case, you will be able to write a web scraper just to see if the book you are looking for is available. It is important to point out that you will have to go through HTML pages that return from the site to pinpoint what you are looking for.

One of the biggest advantages of using APIs is the fact that you will be able to access an officially sanctioned method of gathering data. Apart from being legally protected, API gives website owners and programmers access to the knowledge of who is gathering their information.

If for some reason, you are not able to use APIs, and you cannot get explicit permission from the owners of the site, then you need to check the “robot.txt” file of the site to see if they allow or ask robots to be able to crawl their sites. Make sure to check the terms and conditions of the site to make sure they do not explicitly prohibit web scraping.

The Future of Web Scraping for Market Research

Going ahead, marketing is bound to become a much greater competitive exercise. The future of marketing, if it is not so now, is completely going to be driven by data. For businesses to come to a proper marketing strategy that will get them the results they want, they will need to get access to data that accurately portrays the market and industry they are operating.

This is why you can see web scraping being an integral part of marketing as we go forward.

The analysis of data that businesses collect from various sources like media sites, web traffic, social media, etc, will be very closely linked to their business strategies. Let us, for the sake of argument, say that you have come up with a new medical product. Now, to market the said product, traditional forms of marketing will make use of advertising to generate leads. EMR software can also benefit from data-driven strategies.

Very shortly, web scraping will be able to accelerate this process of generating leads by collecting all the relevant information about doctors from many different sources, similar to how you might learn how to change the background color on Instagram stories to enhance your social media marketing.

You will also be able to organize this data to match your particular marketing needs as well. So, similarly, you can use web scraping for both market research purposes and SEO purposes as well.

Frequently Asked Questions

Web scraping is the process of extraction of data from web pages and further this data can be used in analyzing and planning for your next marketing campaign.

Web scraping publicly available data is legal. However, if you are scraping personal profile, or any data that is confidential is illegal.

Web scraping in marketing is the act of extracting useful data that is available publicly. This data then is used to understand the market behavior and trends.

Final Word

Web scraping can be a powerful tool for market research. By automating the process of extracting data from websites, businesses can save time and resources while gathering valuable insights into their domain.

When used correctly, web scraping can provide valuable data that can help businesses make better decisions, gain a competitive edge, and improve their bottom line.

While web scraping can be a helpful tool, businesses should be aware of the potential risks and legal implications. Scraping data from websites without the permission of the site owner can result in legal action.

Furthermore, businesses should be careful to avoid scraping sensitive data, such as personal information, that could be used to commit identity theft or other crimes.

Happy Scraping for market research!!